Introduction

Kernel regression is a non parametric estimation technique to fit your data. The idea is putting a set of identical weighted functions called kernel local to each observational data point. This is similar to K-nearest neighbor, so it does not assume any underlying distribution to estimate the regression function. In the image below you have a set of raw data points. As you can see, it is not possible to use linear regression to fit your data in your function.

The easiest way of doing this is to use K-nearest Neighbor.

K-nearest neighbor algorithm (KNN) is part of supervised learning that has been used in many applications in the field of data mining, statistical pattern recognition and many others.

KNN is a method for classifying objects based on closest training examples in the feature space.

An object is classified by a majority vote of its neighbors. K is always a positive integer. The neighbors are taken from a set of objects for which the correct classification is known.

It is usual to use the Euclidean distance, though other distance measures such as the Manhattean distance could in principle be used instead.

The algorithm on how to compute the K-nearest neighbors is as follows:

- Determine the parameter K = number of nearest neighbors beforehand. This value is all up to you.

- Calculate the distance between the query-instance and all the training samples. You can use any distance algorithm.

- Sort the distances for all the training samples and determine the nearest neighbor based on the K-th minimum distance.

- Since this is supervised learning, get all the Categories of your training data for the sorted value which fall under K.

- Use the majority of nearest neighbors as the prediction value.

For a tutorial and implementation of the different distances, please refer to my tutorial on quantitative variable distances.

Background

In a project on education and relating to how students and novice programmers learn to program, in several classes of novice first year students, an assignment is given to all the students to perform in a certain time period. Each compilation of the student is captured, this means all code and content and different file classes. This is a "snap-shot" of each program. So for the purposes of this tutorial, I will use a sample data of number of compiler errors; time in performing the exercise, and the number of compiles. Each of these students have a final grade for the course. This will be used to determine how well they have done on a particular assignment.

Using the Code

The following is a sample data which is being used as the training data. Again supervised learning requires you to know what the "category" of each training data is and to what category they belong to. In terms of this project, my categories are the grades of the course from A, A-, B+, B, B-, C, F.

double[] StudentA = new double[] { 9, 32, 65.1 };

double[] StudentB = new double[] { 12, 65, 86.1 };

double[] StudentC = new double[] { 19, 54, 45.1 };

The following code places each student in a list so as to be called later for a comparison of each student to the queried data.

List<double[]> TrainingSet = new List<double[]>();

TrainingSet.Add(StudentA);

TrainingSet.Add(StudentB);

TrainingSet.Add(StudentC);

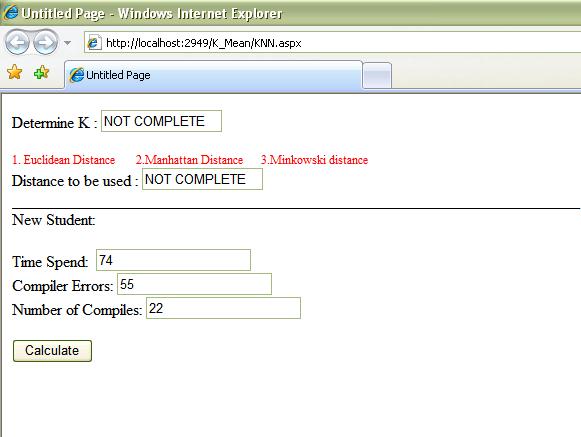

The following code grabs the attributes of a new student and places it as the queried data to be compared to each student in the training data.

List<double> newPlayer = new List<double>();

newPlayer.Add(Convert.ToDouble(TextBox1.Text));

newPlayer.Add(Convert.ToDouble(TextBox2.Text));

newPlayer.Add(Convert.ToDouble(TextBox3.Text));

double[] newSudent = (double[])newPlayer.ToArray();

Finally, the training data (each student) is compared to the queried data based on the distances of each. This means you get the minimum distance of your queried data to each of the students attributes. Example: Student A and the new student, then student B and new student.

double distance = 0.0;

for (int i = 0; i < TrainingSet.Count; i++)

{

Response.Write("<hr/>");

distance = KNNs.EuclideanDistance(newSudent, TrainingSet[i]);

Response.Write("<br/>Euclidean Distance New Student : " + distance);

distance = KNNs.ChebyshevDistance(newSudent, TrainingSet[i]);

Response.Write("<br/>Chebyshev Distance New Student : " + distance);

distance = KNNs.ManhattanDistance(newSudent, TrainingSet[i]);

Response.Write("<br/>Manhattan Distance New Student : " + distance);

}

The shortest distance is the category your queried data will fall on based on the K. This means that if you have K=2, you select the 2 shortest distances and compare the categories.

Advantages

- Robust to noisy training data (especially if we use inverse square of weighted distance as the “distance”)

- Effective if the training data is large

Disadvantages

- Need to determine value of parameter K (number of nearest neighbors)

- Distance based learning is not clear which type of distance to use and which attribute to use to produce the best results. Shall we use all attributes or certain attributes only?

- Computation cost is quite high because we need to compute distance of each query instance to all training samples. Some indexing (e.g. K-D tree) may reduce this computational cost.

References

- WIKIPEDIA

- Teknomo, Kardi. K-Nearest Neighbors Tutorial. http:\\people.revoledu.com\kardi\ tutorial\KNN\

History

- Feb 1 2009: Initial version

My name : Aresh Saharkhiz.

Origin: Unknown

Education : BS Computer Science

MS Computer Science

Interests : Genetic Programming

Neural Networks

Game AI

Programming: (language == statementEnd(semicolon)

http://sites.google.com/site/docaresh

Skill:

Flash

Carrara 3D

PHP,ASP,ASP.NET

J2SE

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin