Introduction

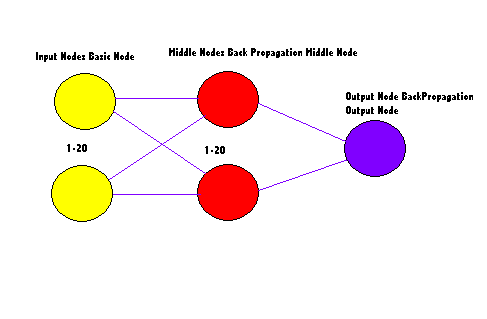

The Back Propagation Word Network is a continuation of the idea developed in the Adaline Word network and is set up so that it can learn how to tell the difference between words in a given text. The exact words are not really important to the testing of the network, neither is the text that the network trains or runs against. I am currently using the words "and" and "the" in the demonstration program provided here. The text I am using is: The Origin Of the Species by Charles Darwin. The aim of the program is to extend the idea of the XOR sample shown in the previous demonstration. This is done by giving the network two words to learn. The network must then learn to differentiate not only between the two words but between the two words and all the other words in the given text. I do this by training the network with a prepared file that contains the first chapter of the Origin Of the Species, and then when the program has been trained, it runs against the full text version of the file extracting all the sentences in the book that contain the words "and" and "the" but not both or the same word twice.

The BackPropagation Word Network

There is surprisingly little change made to get from the standard BackPropagation Network to the BackPropagation Word Network, with the only required change being for the pattern code. Everything else is as it was in the previous code.

The BackPropagation Word Pattern Class

The BackPropagation Word Pattern Class inherits from the Adaline Word Pattern Class and overrides the constructors and the class name and data functions. It would technically have been possible to extend the Adaline Word Pattern class to include the extra constructor which is used for the demonstration code, but I thought it would be better to keep it separate for now.

Training

Training for the BackPropagation Word Network is almost identical to the BackPropagation Network sample apart from the fact that we are dealing with a pattern class that has words instead of numbers.

Once again, the Run function is using the original Adaline run function code that was used earlier and is called by the Back Propagation Network class by the code:

for( int i=nFirstMiddleNode; i<this.Nodes.Count; i++ )

{

( ( AdalineNode )this.Nodes[ i ] ).Run( Values.NodeValue );

}

which cycles through the nodes starting at the first middle node and calls the AdalineNode class Run function.

The code for the BackPropagation Word Network training loop is:

while( nGood < patterns.Count )

{

nGood = 0;

for( int i=0; i<patterns.Count; i++ )

{

for( int n=0; n<20; n++ )

{

( ( BasicNode )bpNetwork2.Nodes[ n ] ).SetValue( Values.NodeValue,

( ( BackPropagationWordPattern )bpPatterns[ i ] )

.GetInSetAt( n ) );

}

bpNetwork.Run();

bpNetwork.SetOutputError(

( ( BackPropagationWordPattern )patterns[ i ] ) );

bpNetwork.Learn();

dTemp = bpNetwork.GetOutputValue( 0 );

dCalculatedTemp = bpNetwork.GetCalculatedOutputValue( 0,

( ( BackPropagationWordPattern )patterns[ i ] ).OutputValue( 0 ) );

strTemp.Remove( 0, strTemp.Length );

netWorkText.AppendText( "Absolute output value = "

+ dCalculatedTemp.ToString() + "\n" );

if( dCalculatedTemp < dBackPropagationWordTolerance )

{

strTemp.Append( "Output value is within tolerance level of "

+ dBackPropagationWordTolerance.ToString() + " of 0 " );

nGood++;

}

else if( dCalculatedTemp > ( 1 - dBackPropagationWordTolerance ) )

{

strTemp.Append( "Output value is within tolerance level of "

+ dBackPropagationWordTolerance.ToString() + " of 1 " );

nGood++;

}

else

{

strTemp.Append( "Output value is outside tolerance levels " );

}

strTemp.Append( " " );

for( int n=0; n<

( ( BackPropagationWordPattern )patterns[ i ] ).InputSize(); n++ )

{

strTemp.Append(

( ( BackPropagationWordPattern )patterns[ i ] ).InputValue( n )

+ " " );

}

strTemp.Append( "\n Output Value = "

+ ( ( BackPropagationWordPattern )patterns[ i ] )

.OutputValue( Values.NodeValue ) );

netWorkText.AppendText( "Test = "

+ dCalculatedTemp.ToString() + " Tolerance = "

+ dBackPropagationWordTolerance.ToString() + " "

+ strTemp.ToString() + "\n" );

}

netWorkText.AppendText( "\nNetwork iteration "

+ nIteration.ToString() + " produced "

+ nGood.ToString() + " good results out of "

+ patterns.Count.ToString() + "\n" );

nIteration++;

}

which starts with the same basic premise as the previous networks in that we loop through all the values stored in the Pattern class object until we get an iteration that returns a 100% good count. The first difference that happens now that we are graduating to doing whole sentences. Well, at least, whole sentences that are no more than 20 words long. The values from the pattern are loaded into the Node values with the code:

for( int n=0; n<20; n++ )

{

( ( BasicNode )bpNetwork2.Nodes[ n ] ).SetValue( Values.NodeValue,

( ( BackPropagationWordPattern )bpPatterns[ i ] ).GetInSetAt( n ) );

}

which cycles through the twenty available input nodes on the network and sets the node value with the value stored in the pattern. Remember, the GetInSetAt function will return the linear separable, numerical value for the word and not the actual word itself. The Run, SetOutputError and the Learn functions are then called exactly as they are in the BackPropagation example previously.

The rest of the code within the loop is merely confirming if the answer is correct or not by seeing if the final value returned from the output node of the network is the same value as the one that was entered into the pattern output value.

Saving And Loading

The saving and loading for the BackPropagation Word Network is the same as in the previous example, although it is considerably longer, and due to the length, I'll only show a portion of the saved file here.

="1.0"="utf-8"

<BackPropagationNetwork>

<NumberOfLayers>3</NumberOfLayers>

<FirstMiddleNode>20</FirstMiddleNode>

<FirstOutputNode>40</FirstOutputNode>

<Momentum>0.9</Momentum>

<Layers>

<Layer0>20</Layer0>

<Layer1>20</Layer1>

<Layer2>1</Layer2>

</Layers>

<BasicNode>

<Identifier>0</Identifier>

<NodeValue>0.1074</NodeValue>

<NodeError>0</NodeError>

<Bias>

<BiasValue>1</BiasValue>

</Bias>

</BasicNode>

Through to

<BasicNode>

<Identifier>19</Identifier>

<NodeValue>0.2406</NodeValue>

<NodeError>0</NodeError>

<Bias>

<BiasValue>1</BiasValue>

</Bias>

</BasicNode>

<BackPropagationMiddleNode>

<BackPropagationOutputNode>

<AdalineNode>

<BasicNode>

<Identifier>20</Identifier>

<NodeValue>0.029124473721815</NodeValue>

<NodeValue>0.45</NodeValue>

<NodeValue>0.9</NodeValue>

<NodeError>-0.00154046436077283</NodeError>

<Bias>

<BiasValue>1</BiasValue>

</Bias>

</BasicNode>

</AdalineNode>

</BackPropagationOutputNode>

</BackPropagationMiddleNode>

Through to

<BackPropagationMiddleNode>

<BackPropagationOutputNode>

<AdalineNode>

<BasicNode>

<Identifier>39</Identifier>

<NodeValue>0.0668105589475829</NodeValue>

<NodeValue>0.45</NodeValue>

<NodeValue>0.9</NodeValue>

<NodeError>-0.00126610484536625</NodeError>

<Bias>

<BiasValue>1</BiasValue>

</Bias>

</BasicNode>

</AdalineNode>

</BackPropagationOutputNode>

</BackPropagationMiddleNode>

<BackPropagationOutputNode>

<AdalineNode>

<BasicNode>

<Identifier>40</Identifier>

<NodeValue>0.312017133076152</NodeValue>

<NodeValue>0.45</NodeValue>

<NodeValue>0.9</NodeValue>

<NodeError>-0.0669783596518058</NodeError>

<Bias>

<BiasValue>1</BiasValue>

</Biasgt;

</BasicNode>

</AdalineNode>

</BackPropagationOutputNode>

<BackPropagationLink>

<BasicLink>

<Identifier>41</Identifier>

<LinkValue>0.76319456394861</LinkValue>

<LinkValue>-7.44506425561508E-05</LinkValue>

<InputNodeID>0</InputNodeID>

<OutputNodeID>20</OutputNodeID>

</BasicLink>

</BackPropagationLink>

Through To

<BackPropagationLink>

<BasicLink>

<Identifier>460</Identifier>

<LinkValue>0.303193748484268</LinkValue>

<LinkValue>-0.00201368774057822</LinkValue>

<InputNodeID>39</InputNodeID>

<OutputNodeID>40</OutputNodeID>

</BasicLink>

</BackPropagationLink>

</BackPropagationNetwork>

Testing

The Testing portions of the code are located under the run menu for the Neural Net Tester program. The test for this program is the "Load And Run back Propagation 2" menu option. This will load the file that resembles the one above. I say resembles as the linkage values won't be exactly the same any two times running.

The menu option will load and run the origins-of-species.txt file and generate the log file Neural Network Tester Load And Run BackPropagation OneLoad And Run BackPropagation 2.xml which can be viewed using the LogViewer that is part of the neural net tester program.

The display will show all the sentences in the text of the book that match the two words entered through the options list or the default words which are "and" and "the" upon finishing the network. Work will print out that the test has finished.

The quick guide is:

- Menu :- Run/Load And Run BackPropagation 2:- Loads the saves BackPropagation network from the disk and then runs it against the full text of the Origins of the Species.

- Menu :- Train/Train BackPropagation 2 :- Trains the network from scratch using the sample file which contains the introduction and the first chapter of the Origins of the Species.

- Menu :- Options BackPropagation 2 Options :- Brings up a dialog that allows you to set certain parameters for the running of the network.

Options

The above is the options dialog for the BackPropagation Word or BackPropagation 2 Network. Starting with the Tolerance which is the value returned by the network and the amount of difference between that and a positive value of one that we will accept. The momentum is an additional drive parameter that the network uses to try and prevent the network getting stuck in what is called a local minimum before it is able to achieve a correct answer. The reason this occurs is because the weights are adjusted by a percentage of the previous value and if you only used this percentage before the network achieved equilibrium, where equilibrium is defined as getting all its outputs to match the required outputs passed into the pattern output parameters, which would mean that the weight changes to the network would get ever smaller and possibly get so small that they would never be able to get to the correct solution. Or at least, that it would take so long that people would just give up.

The Learning Rate is the same value as we have seen before and so is the Bias. The new optional variables on the list are the two words that can be entered for the network to search the text for. It must be noted that if you change these values, you will have to retrain the network as the network is trained to find the specific words and is not designed or capable of just finding any random words that are entered without training. Also, there is the point that you should choose words that are in the text that you are training the network against. For example, it will be pointless to look for the character Marvin the paranoid android in a copy of The Origin Of The Species. In fact, I'd be surprised if the name Marvin showed up in the entire book, you'd be far better off searching through the text of a Douglas Adams book for that one. Which finally is why the last two optional parameters are included as these are the names of the training file and the run file that are located in the same directory as the executable. There are no restrictions on which files you search. Although the sample provided are texts from The Origin Of The Species, you could replace these with anything you wished.

This is not to say that you shouldn't train the network against the provided sample text and then run it against a different text in the run section of the program. It is merely pointing out that the context should be borne in mind.

Understanding The Output

Training

Setting pattern to, With plants which are temporarily propagated by cuttings, buds, &c

Setting pattern to, the importance of crossing is immense; for the cultivator may here disregard the extreme variability both of hybrids and of mongrels, and the sterility of hybrids; but plants not propagated by seed are of little importance to us, for their endurance is only temporary

Setting pattern to, Over all these causes of Change, the accumulative action of Selection, whether applied methodically and quickly, or unconsciously and slowly but more efficiently, seems to have been the predominant Power

The Back Propagation Word Network begins by setting the patterns for the network to be trained with. These are in the form of complete sentences, which the program will then break up into individual words that are no more than twenty characters each.

Absolute output value = 0.110635431553925

Test = 0.110635431553925 Tolerance = 0.4 Output value is within tolerance level of 0.4 of 0 1859 THE ORIGIN OF SPECIES by Charles Darwin 1859 INTRODUCTION INTRODUCTION WHEN on board H

Output Value = 0

Absolute output value = 0.00769773879206108

Test = 0.00769773879206108 Tolerance = 0.4 Output value is within tolerance level of 0.4 of 0 HM

Output Value = 0

The network then trains using the pattern array by looking through all the twenty input nodes and giving an absolute value should indicate if the network contains "and" or "the" but not both with a 1 being a positive indicator. The absolute value is tested against the tolerance levels to see if it fits and the final output value of the test is indicated. As with the previous Back Propagation Network training is done for every turn through the loop so a specifc call to learn is not indicated.

Training Done - Reloading Network and Testing against the training set

Building Network

Loading Network

Testing Network

Loading Back Propagation Training File please wait ...

Setting pattern to, 1859 THE ORIGIN OF SPECIES by Charles Darwin 1859 INTRODUCTION INTRODUCTION WHEN on board H

Upon successful training the network then saves the trained network and loads it again into a new network object. It then reloads the training file (not strictly neccassary ) and begins to test if the network learnt it's task correctly.

Absolute output value = 0.835661935490439

Test = 0.835661935490439 Tolerance = 0.4 Output value is within tolerance level of 0.4 of 1 The greater or less force of inheritance and reversion, determine whether variations shall endure

Output Value = 1

Absolute output value = 0.83858866554289

Test = 0.83858866554289 Tolerance = 0.4 Output value is within tolerance level of 0.4 of 1 Variability is governed by many unknown laws, of which correlated growth is probably the most important

Output Value = 1

Absolute output value = 0.929080538571364

Test = 0.929080538571364 Tolerance = 0.4 Output value is within tolerance level of 0.4 of 1 Some, perhaps a great, effect may be attributed to the increased use or disuse of parts

Output Value = 1

Test Finished

The results of the test are output to the screen when the test is positive, that is we have found a sentence that contains the words "and" and "the" but not both.

Running

Setting pattern to, Beagle as naturalist, I was much struck with certain facts in the distribution of the organic beings inhabiting South America, and in the geological relations of the present to the past inhabitants of that continent.

Setting pattern to, These facts, as will be seen in the latter chapters of this volume, seemed to throw some light on the origin of species- that mystery of mysteries, as it has been called by one of our greatest philosophers

Setting pattern to, On my return home, it occurred to me, in 1837, that something might perhaps be made out on this question by patiently accumulating and reflecting on all sorts of facts which could possibly have any bearing on it.

As in the training section the Back Propagation Word Network first loads the data into the patterns array and then runs it printing out exactly the same data as above.

Absolute output value = 0.915116784210617

Test = 0.915116784210617 Tolerance = 0.4 utput value is within tolerance level of 0.4 of 1 As several of the reasons which have led me to this belief are in some degree applicable in other cases, I will here briefly give them

Output Value = 1

Absolute output value = 0.660441295449701

Test = 0.660441295449701 Tolerance = 0.4 Output value is within tolerance level of 0.4 of 1 Hence it must be assumed not only that half-civilised man succeeded in thoroughly domesticating several species, but that he intentionally or by chance picked out extraordinarily abnormal species; and further, that these very species have since all become extinct or unknown

Output Value = 1

Fun And Games

The BackPropagation Word Network is the first example of a more complicated network in that it has a large number of input nodes, twenty to be exact and this is where the first compromise between the program and strict accuracy is made. Some of the sentences in the network contain many more than twenty words but for the sake of the example only the first twenty words of any sentence are counted. Of course, there are also many sentences that are shorter than twenty words long but the network copes fine with an absence of data in some of the nodes. The final compromise that has been made is that of the sentences. In the sample code, the network simply looks for a full stop. There are a number of places where a full stop can occur in an English sentence and unfortunately not all of them are at the end of the sentence. This means that, in some cases, the given sentence for a test can be one letter long. This doesn't have a detrimental effect on the performance of the network so I've left the code as it is. I have yet to notice any sentences that have fell through the gaps because of these compromises, but in theory, it is possible that the network will not perform with one hundred percent accuracy.

Because of the way the linear separability is achieved between the words, the words are case sensitive, which is to say that the word "The" is not the same as the word "the" as far as the network is concerned.

History

- 3 July 2003 :- Initial release.

- 1 December 2003 :- Review and edit for CP conformance.

References

- Tom Archer (2001) Inside C#, Microsoft Press

- Jeffery Richter (2002) Applied Microsoft .NET Framework Programming, Microsoft Press

- Charles Peltzold (2002) Programming Microsoft Windows With C#, Microsoft Press

- Robinson et al (2001) Professional C#, Wrox

- William R. Staneck (1997) Web Publishing Unleashed Professional Reference Edition, Sams.net

- Robert Callan, The Essence Of Neural Networks (1999) Prentice Hall

- Timothy Masters, Practical Neural Network Recipes In C++ (1993) Morgan Kaufmann (Academic Press)

- Melanie Mitchell, An Introduction To Genetic Algorithms (1999) MIT Press

- Joey Rogers, Object-Orientated Neural Networks in C++ (1997) Academic Press

- Simon Haykin, Neural Networks A Comprehensive Foundation (1999) Prentice Hall

- Bernd Oestereich (2002) Developing Software With UML Object-Orientated Analysis And Design In Practice, Addison Wesley

- R Beale & T Jackson (1990) Neural Computing An Introduction, Institute Of Physics Publishing

Thanks

Special thanks go to anyone involved in TortoiseCVS for version control.

All UML diagrams were generated using Metamill version 2.2.

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin

Just look at the references he gives in the article or do a Google search.

Just look at the references he gives in the article or do a Google search.