Download:

You can download the App for Ultrabook(TM) from Intel AppUp store Here

Introduction

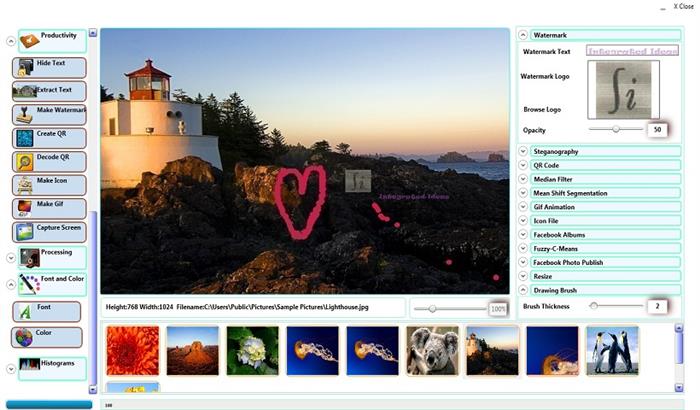

When I started to think about ultraboook, I find the device and the concept quite tricky. It is a hybrid of Tablets and Laptops with tons of sensors preloaded. When I looked around the Apps that people are building, I realized that the Apps are mainly Either Entertainment centric or a solution to a problem that can be provided through pure desktop or say tablets. Having used tablets and laptops for long, the main flaw that I see in tablets is their lack of offering any productivity. Most people uses them for surfing, video and reading e-books. I wanted to comeup with a hybrid App that adds value to productivity and at the same time introduce entertainment value to ultrabook. So the App needed to explore the great programming and processing capabilities alongside the touches and nudges. So I am presenting a powerful image utility App, which I have named as ImageGrassy. So what does the app do? It gives you with some cool tools to have fun with your images. It gives you tool to analyze them and it gives you the power to create your custom xml routine to build Image Processing batch application.

So why we require another image processing tool when there are tons of good tools like Photoshop, OpenCV already available?

Image editing tools like Photoshops are difficult to use for image analysis purpose and tools like Matlab are difficult to mod as entertainment station and image editing. OpenCV needs coding.

So here is the hybrid that does all of them (Well to the extend) along with utilizing the power of various sensors.

Background

So you get the basic Idea of how exactly the system is intended to function. The heart of the system is a Gallery which is the user photo gallery with a gallery config that stores the image tags and other information. You can manually add and remove files from gallery.

Once you start your application, gallery is loaded, if you do not have one than it prompts you to create one. The images in the gallery are loaded as thumbnails. You can select any image from the gallery and import that to the main viewer. It renders the image.

User can take the advantage of many utility applications and fun applications that would be ported with this app to readily play with their images. They can also use a Tagging system that would associate features extracted from the image to remember a type of image. User can input an Image for search and it would find out all the related images from the gallery with feature matching process.

The system would also support Template matching. You create small thumbnails of templates like face or other objects and the system should be able to Locate the part of the image that matches with the template.

This is all from a user perspective. The app will come shipped with a developer's Interface. Here you can basically specify the operations serially and the system will process your algorithm as batch and will produce you the desired result.

Here is a Sample 'CodeSnippet' of the new Algorithm level program that is introduced through the App.

start;

I=input of type jpg;

Ig=convert I to GRAY;

Ige=equilize Ig through Histogram;

Ib= convert Ige to BINARY;

Ibr=resize Ib to 128,128;

Ibr=process Ibr with DILATION with 3,3;

M=process Ibr with BLOB_DETECTION;

M=combine M with I;

save M as "rslt.jpg";

end

The fundamental is, it will hide any looping or strong programming fundamentals and programmers can simply work with Algorithms by combining and merging.

We have not yet tested the control statements yet.

Sensors

One of the features planned is adjusting the brightness of the viewer depending upon the light intensity as sensed by the sensor. The size and orientation of the viewing Pane can be changed with touch sensors. Also a Context menu driven operation rendering will be provided. The pane can be resized, rotated, replaced to a different part of the screen using screen touch input.

Image cropping will be given support through Stylus.

A. Ambient Light Sensor

int changed=0;

var brightnessSensor = Windows.Devices.Sensors.LightSensor.GetDefault();

if(brightnessSensor != null)

{

brightnessSensor.ReadingChanged += (sender, eventArgs) =>

{

double mc=WpfImageProcessing.GetMeanContrast(iSrc);

var isLow = eventArgs.Reading.IlluminanceInLux != mc;

if(changed/100>0)

{

Image1.Source=WpfImageProcessing.ContrastStretch(iSrc,.5-(mc-eventArgs.Reading.IlluminanceInLux)/MaxLux;

iSrc=Bitmap.BitmapImageFromBitmapSource(Image1.Source as BitmapSource);

changed=0;

}

else

changed++;

};

}

The logic is, trap the light change event. Now my long career with Arduino, 16F84, and all sort of sensors tells me that such an event might be shot very frequently if you are outdoor (say is railway station). So adjusting the main image brightness with this event handler may actually trigger a flicker. Hence I added a variable to limit the number of refreshes.

The Contrast stretching Logic is as bellow:

public static BitmapSource ContrastStretch(BitmapImage srcBmp, double pc )

{

if (pc == .5)

{

return srcBmp;

}

bool bright = true;

pc = .5 - pc;

if (pc < 0)

{

bright = false;

}

pc = Math.Abs(pc);

Bitmap bmp = new Bitmap(srcBmp);

Bitmap contrast = new Bitmap(srcBmp); PixelColor c ;

for (int i = 0; i < bmp.NumRow; i++)

{

for (int j = 0; j < bmp.NumCol; j++)

{

c = bmp.GetPixel(j, i); int rd = c.Red; int gr = c.Green; int bl = c.Blue;

if (bright)

{

rd = rd + (int)((double)rd * pc);

gr = gr + (int)((double)gr * pc);

bl = bl + (int)((double)bl * pc);

if (rd > 255)

rd = 255;

if (gr > 255)

gr = 255;

if (bl > 255)

bl = 255;

}

else

{

if (bright)

{

rd = rd - (int)((double)rd * pc);

gr = gr - (int)((double)gr * pc);

bl = bl - (int)((double)bl * pc);

if (rd < 0)

rd = 0;

if (gr < 0)

gr = 0;

if (bl < 0)

bl = 0;

}

}

PixelColor c2 =new PixelColor(rd, gr, bl,c.Alpha);

contrast.SetPixel(j, i, c2);

}

}

contrast.Finalize();

return contrast.Image;

}

As you can see, the method considers current contrast value as 50% and then adjusts the same in a Linear scale upto 100% or 10%.

B. GPS Sensor and Compass

Now this is quite interesting. Chris pointed out in my post that 'GPS is a great tool for Image Processing'. I must agree that I somehow did manage to not understand his point. But then I went to my drawing board and realized wow! GPS! So you visit a place, you take images, you create a Gallery. You add a tag of the GPS Location. As you move around, the gallery gets updated and shows you the images corresponding to the location. But is that of any use? Have you ever traversed around any busy streets of any Asian countries like Malaysia, or Delhi/Mumbai/Kolkata in India? Streets look same, crowded, and makes it difficult for you to locate your hotel or other landmarks. So use your Ultrabook to track them.

As you have already understood that the App uses a XML Gallery data which includes several Elements like Tag, Path, IsFacePresent, FaceLocation,Features, We add another Element called GPS and populate the gallery based on this.

What is more, we do have compass which would keep us updated with the direction of motion. So from current location and direction, we calculate the other GPS points that have images on the way in our gallery and shows the overlay.

The utility can get better with an Integration with Bing Map. However at this point, I have not tested this integration yet.

C. Webcam

I know, you will argue that it is not really a sensor! but wait ours is an image processing app that allows us image manipulation, search , adjustment, sharing and what not. So what a better sensor than a capture from your Ultrabook? Chris pointed out that the ultrabook is thin enough and he can actually twist and rotate it easily. So I will assume that it can also be accomodated in outdoor and in events. You may actually want the image processing framework to do stuff on captured images from webcam.

Actually we did integrate this part. Now note that wpf does not support webcam access and you need to go for framework like Microsoft Expression Encoder framework to do it. I tested and did not found any distinct advantage of the same. So I used DirectX with System.Drawing to capture a System.Drawing.Bitmap (Yeah I know it is dirty coding but it works!) and then convert it to BitmapImage of wpf type. Rest of the processing takes the help of our WpfImageProcessing Library to do stuff which is then overlayed over the captured image through ImageBrush.

Using the code

This viewer is basically a WPF Image type. So the source must be a BitmapImage type. We hold the actual image in a BitmapImage type and assign that as image source to this image.

Now coming to processing part. Wpf does not support Image access as GDI or using System.Drawing.Imaging. It provides you some classes like WritableBitmap, BitmapFrame, BitmapImage which are to be used along with using stream for processing the image. stream or arrays are linear memory, but Image processing needs matrix operations for many tasks. So we First create a Bitmap class which provides the abstraction to entire image operation and we can treat the image the same way that System.Drawing.Bitmap class object is used.

Here is the Bitmap Class.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Data;

using System.Windows.Documents;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Media.Imaging;

using System.Windows.Navigation;

using System.Windows.Shapes;

using Microsoft.Win32;

using System.Windows.Media.Imaging;

using System.IO;

namespace ImageApplications

{

public struct PixelColor

{

public byte Blue;

public byte Green;

public byte Red;

public byte Alpha;

public PixelColor(int r, int g, int b, int a)

{

this.Red = (byte)r;

this.Green = (byte)g;

this.Blue = (byte)b;

this.Alpha = (byte)a;

}

}

public class Bitmap

{

public BitmapSource Image;

private BitmapImage iSrc;

private byte []array;

private Int32Rect rect;

public int Height, Width;

public int NumCol, NumRow;

public Bitmap(string fileName)

{

iSrc = new BitmapImage(new Uri(fileName));

Image = iSrc;

array = new byte[iSrc.PixelWidth * iSrc.PixelHeight * 4];

rect = new Int32Rect(0, 0, iSrc.PixelWidth, iSrc.PixelHeight);

iSrc.CopyPixels(rect, array, iSrc.PixelWidth * 4, 0);

NumRow = iSrc.PixelHeight;

NumCol = iSrc.PixelWidth;

Height = NumRow;

Width = NumCol;

}

public Bitmap(BitmapImage bmpSrc)

{

iSrc = bmpSrc;

Image = iSrc;

array = new byte[iSrc.PixelWidth * iSrc.PixelHeight * 4];

rect = new Int32Rect(0, 0, iSrc.PixelWidth, iSrc.PixelHeight);

iSrc.CopyPixels(rect, array, iSrc.PixelWidth * 4, 0);

NumRow = iSrc.PixelHeight;

NumCol = iSrc.PixelWidth;

Height = NumRow;

Width = NumCol;

}

private BitmapImage CropImage(ImageSource source, int width, int height, int startx,int starty)

{

var rect = new Rect(startx, starty, startx+width , height +startx);

var group = new DrawingGroup();

RenderOptions.SetBitmapScalingMode(group, BitmapScalingMode.HighQuality);

group.Children.Add(new ImageDrawing(source, rect));

var drawingVisual = new DrawingVisual();

using (var drawingContext = drawingVisual.RenderOpen())

drawingContext.DrawDrawing(group);

var resizedImage = new RenderTargetBitmap(

width, height,

96, 96,

PixelFormats.Default);

resizedImage.Render(drawingVisual);

BitmapSource bf= BitmapFrame.Create(resizedImage);

BitmapImage bi = BitmapImageFromBitmapSource(bf);

return bi;

}

public void CropTheImage(int startx, int starty, int width, int height)

{

Image = CropImage(Image, width, height, startx, starty);

}

public void Resize(int rRows,int rCols)

{

TransformedBitmap tb = new System.Windows.Media.Imaging.TransformedBitmap(

Image, new ScaleTransform((double)rRows/iSrc.Height, (double)rCols/iSrc.Width));

Image = tb;

iSrc = BitmapImageFromBitmapSource(Image);

array = new byte[iSrc.PixelWidth * iSrc.PixelHeight * 4];

rect = new Int32Rect(0, 0, iSrc.PixelWidth, iSrc.PixelHeight);

iSrc.CopyPixels(rect, array, iSrc.PixelWidth * 4, 0);

NumRow = iSrc.PixelHeight;

NumCol = iSrc.PixelWidth;

Height = NumRow;

Width = NumCol;

}

public Bitmap(int Height,int Width)

{

WriteableBitmap wb = new System.Windows.Media.Imaging.WriteableBitmap(

Width, Height, 96, 96, PixelFormats.Bgra32, null);

Image = wb;

iSrc = BitmapImageFromBitmapSource(Image);

array = new byte[iSrc.PixelWidth * iSrc.PixelHeight * 4];

rect = new Int32Rect(0, 0, iSrc.PixelWidth, iSrc.PixelHeight);

iSrc.CopyPixels(rect, array, iSrc.PixelWidth * 4, 0);

NumRow = iSrc.PixelHeight;

NumCol = iSrc.PixelWidth;

Height = NumRow;

Width = NumCol;

}

#region Image Processing Stuff

public void Finalize()

{

Image=WriteableBitmap.Create(iSrc.PixelWidth, iSrc.PixelHeight,

96, 96, PixelFormats.Bgra32, null, array, iSrc.PixelWidth * 4);

}

private PixelColor GetPixelValue(int x, int y, byte[] rawpixel, int width, int hight)

{

PixelColor pointpixel;

int offset = y * width * 4 + x * 4;

pointpixel.Blue = rawpixel[offset + 0];

pointpixel.Green = rawpixel[offset + 1];

pointpixel.Red = rawpixel[offset + 2];

pointpixel.Alpha = rawpixel[offset + 3];

return pointpixel;

}

public PixelColor GetPixel(int x,int y)

{

return(GetPixelValue( x, y, array, Width, Height));

}

public void SetPixel(int x,int y, PixelColor color)

{

array=PutPixel(array, Width, Height, color, x, y);

}

private byte[] PutPixel(byte[] rawimagepixel, int width, int hight, PixelColor pixels, int x, int y)

{

int offset = y * width * 4 + x * 4;

rawimagepixel[offset + 0] = pixels.Blue;

rawimagepixel[offset + 1] = pixels.Green;

rawimagepixel[offset + 2] = pixels.Red;

rawimagepixel[offset + 3] = pixels.Alpha;

return rawimagepixel;

}

public static BitmapImage BitmapImageFromBitmapSource(BitmapSource src)

{

BitmapSource bitmapSource = src;

JpegBitmapEncoder encoder = new JpegBitmapEncoder();

MemoryStream memoryStream = new MemoryStream();

BitmapImage bImg = new BitmapImage();

encoder.Frames.Add(BitmapFrame.Create(bitmapSource));

encoder.Save(memoryStream);

bImg.BeginInit();

bImg.StreamSource = new MemoryStream(memoryStream.ToArray());

bImg.EndInit();

memoryStream.Close();

return bImg;

}

public void Save(string filePath)

{

var image = Image;

using (var fileStream = new FileStream(filePath, FileMode.Create, FileAccess.Write))

{

BitmapEncoder encoder = new JpegBitmapEncoder();

encoder.Frames.Add(BitmapFrame.Create(image));

encoder.Save(fileStream);

}

}

#endregion

}

}

We work with four color channels: R, G, B, A. Hence we first declare a structure called

PixelColor to represent the color pattern of every pixel.

Three constructors are provided with the Bitmap class. One that reads a file name and initialize a bitmap object, the one which creates sort of a void object with only width and height parameters and one that uses a

BitmapImage to initialize a Bitmap object. We introduce two functions called

GetPixel and SetPixel so that we can access the pixels the same way that we do for

System.Drawing.Bitmap object. However these functions calls GetPixelColor and

SetPixelColor methods which access the pixels directly from 'array' variable which is initialized through the constructor of the Bitmap class with the pixels of

BitmapImage using CopyPixel method. Also a conversion utility to convert from

BitmapSource to BitmapImage is added so that operation results can be returned as either

BitmapImage or BitmapSource as needed.

Class support:

As it stands now, the class and all other functions are optimized for Jpeg image type.

Size: Algorithm is tested with 6Mb 4000x4000 pixels images.

We also provide Save option for saving the Bitmap class object as image in your file system.

So now we have basic image class by name

Bitmap and also PixelColor class. Let us start with Image manipulation stuff.

Preprocessing.

It is essentially an operation that is carried out before major image processing.

1. Resize:

WPF does not provide any native support for any image resizing. So we took the advantage of Scale transform to resize the images and that too without compromising the aspect ratio.

The support is provided in Bitmap class itself through resize method.

TransformedBitmap tb = new System.Windows.Media.Imaging.TransformedBitmap(

Image, new ScaleTransform((double)rRows/iSrc.Height, (double)rCols/iSrc.Width));

From the method that uses Resize operation, we will specify the exact size which will be converted to relative percentage and the image will be resized through

ScaleTransform.

See the resizing of an image to 64x32 size

2. Gray Scale Conversion

Now this has nothing to do with naive Bitmap support. This is a core image processing stuff. So we put the method in

WpfImageProcessing class.

public static BitmapSource GrayConversion(BitmapImage srcImage)

{

Bitmap bmp = new Bitmap(srcImage);

for (int i = 0; i < bmp.NumRow; i++)

{

for (int j = 0; j < bmp.NumCol; j++)

{

PixelColor c = bmp.GetPixel(j, i);

int rd = c.Red; int gr = c.Green; int bl = c.Blue;

double d1 = 0.2989 * (double)rd + 0.5870 * (double)gr + 0.1140 * (double)bl;

int c1 = (int)Math.Round(d1);

PixelColor c2 = new PixelColor(c1, c1, c1, c.Alpha);

bmp.SetPixel(j, i, c2);

}

}

bmp.Finalize();

return bmp.Image;

}

You can see, we have used the famous formula to read RGB values and convert it to gray.

double d1 = 0.2989 * (double)rd + 0.5870 * (double)gr + 0.1140 * (double)bl;

Observe a finalize method at the end of the method. It is like Lock and Unlock function. Rather than manipulating the original bits of

BitmapImage iSrc of Bitmap class, we perform this only once after the operation is completed. This results in faster operation. As WPF does not support manipulating the

BitmapImage, so we take the help of WritableBitmap to write create a

BitmapImage from the array that was manipulated through image operation and finally assign it to Image variable of the

Bitmap class.

Image=WriteableBitmap.Create(iSrc.PixelWidth, iSrc.PixelHeight,

96, 96, PixelFormats.Bgra32, null, array, iSrc.PixelWidth * 4);.

Here is the result of Gray Conversion

3. Binary Conversion

A binary image is essentially an image which has either '0' or '1' for the pixels. But we want to maintain

a homogeneity about the way image is handled and viewed. Hence we are going to represent 1 with 255. So our binary image is also a 3 channel image with values 255 and 0. Any gray scale value > Threshold should be 255 and it should be 0 zero otherwise.

public static BitmapSource Gray2Binary(BitmapImage grayImage,double threshold)

{

if (threshold > 1)

{

throw new ApplicationException("Threshold must be between 0 and 1");

}

if (threshold < 0)

{

threshold = System.Math.Abs(threshold);

}

threshold = 255 * threshold;

Bitmap bmp = new Bitmap(grayImage);

for (int i = 0; i < bmp.NumRow; i++)

{

for (int j = 0; j < bmp.NumCol; j++)

{

PixelColor c = bmp.GetPixel(j, i);

int rd = c.Red;

double d1 = 0;

if (rd > threshold)

{

d1 = 255;

}

int c1 = (int)Math.Round(d1);

PixelColor c2 = new PixelColor(c1, c1, c1, c.Alpha);

bmp.SetPixel(j, i, c2);

}

}

bmp.Finalize();

return bmp.Image;

}Here is the sample output of the operation

Important thing here is the routine expects it's Input to be of Gray type. Once you have the result

of Gray conversion in Image1 or main image renderer, you must also update the source BitMapImage or iSrc as:

iSrc = Bitmap.BitmapImageFromBitmapSource(Image1.Source as BitmapSource);

Dilation and Erosion are two major operations, that are needed over Binary images. Dilation is the process of filling the near by pixels of a Pixel with white and erosion is the reverse. To keep the article short enough, I am omitting the description of these simple methods.

Another color manipulation commonly used in image operation is color inversion. The logic is pretty simple, any pixel color should be inverted with (max-value) theory. So if the red component of a pixel is 10, inverted color value will be 255.

Here is a sample output.

Transform

Transforms are the mathematical modelling or more precisely mapping of data from one domain to another domain to explore hidden behaviour of data. We use different types of Transforms in image processing like wavelet Transform, DCT and So on.

We have already implemented most common Transforms in this studio. For continuity of the discussion, I would show the way we have used wavelet transform.

Wavelet Transform

Wavelet basically gives multi resolution Image. When you subject any image through wavelet transform, you get four sub images, HH,HL,LH,LL. HH is the component which retains the actual image disparity and map. HL,LH,LL are three other rescaled, filtered images. Wavelet transform gives a great means of extracting edges and other features which are essential for pattern recognition.

So our wavelet transform takes two inputs: The image and a function value which can be either 1 or 0. 0 will return Edge from wavelet transform image and 1 will return all the scaled images as a single image.

public static BitmapSource WaveletTransform(BitmapImage bmpSource, int function,int m_threshold)

{

int[,] orgred;

int[,] orgblue;

int[,] orggreen;

int[,] rowred;

int[,] rowblue;

int[,] rowgreen;

int[,] colred;

int[,] colblue;

int[,] colgreen;

int[,] scalered;

int[,] scaleblue;

int[,] scalegreen;

int[,] recrowred;

int[,] recrowblue;

int[,] recrowgreen;

int[,] recorgred;

int[,] recorgblue;

int[,] recorggreen;

Bitmap bitmap = new Bitmap(bmpSource);

orgred = new int[bitmap.Height + 1, bitmap.Width + 1];

orgblue = new int[bitmap.Height + 1, bitmap.Width + 1];

orggreen = new int[bitmap.Height + 1, bitmap.Width + 1];

rowred = new int[bitmap.Height + 1, bitmap.Width + 1];

rowblue = new int[bitmap.Height + 1, bitmap.Width + 1];

rowgreen = new int[bitmap.Height + 1, bitmap.Width + 1];

colred = new int[bitmap.Height + 1, bitmap.Width + 1];

colblue = new int[bitmap.Height + 1, bitmap.Width + 1];

colgreen = new int[bitmap.Height + 1, bitmap.Width + 1];

scalered = new int[bitmap.Height + 1, bitmap.Width + 1];

scaleblue = new int[bitmap.Height + 1, bitmap.Width + 1];

scalegreen = new int[bitmap.Height + 1, bitmap.Width + 1];

recrowred = new int[bitmap.Height + 1, bitmap.Width + 1];

recrowblue = new int[bitmap.Height + 1, bitmap.Width + 1];

recrowgreen = new int[bitmap.Height + 1, bitmap.Width + 1];

recorgred = new int[bitmap.Height + 1, bitmap.Width + 1];

recorgblue = new int[bitmap.Height + 1, bitmap.Width + 1];

recorggreen = new int[bitmap.Height + 1, bitmap.Width + 1];

{

for (int i = 0; i < bitmap.Height; i++)

{

for (int j = 0; j < bitmap.Width; j++)

{

orgred[i, j] = bitmap.GetPixel(j,i).Red;

orggreen[i, j] = bitmap.GetPixel(j, i).Green;

orgblue[i, j] = bitmap.GetPixel(j, i).Blue;

}

}

}

for (int r = 0; r < bitmap.Height; r++)

{

int k = 0;

for (int p = 0; p < bitmap.Width; p = p + 2)

{

rowred[r, k] = (int)((double)(orgred[r, p] + orgred[r, p + 1]) / 2);

rowred[r, k + (bitmap.Width / 2)] = (int)((double)(orgred[r, p] - orgred[r, p + 1]) / 2);

rowgreen[r, k] = (int)((double)(orggreen[r, p] + orggreen[r, p + 1]) / 2);

rowgreen[r, k + (bitmap.Width / 2)] = (int)((double)(orggreen[r, p] - orggreen[r, p + 1]) / 2);

rowblue[r, k] = (int)((double)(orgblue[r, p] + orgblue[r, p + 1]) / 2);

rowblue[r, k + (bitmap.Width / 2)] = (int)((double)(orgblue[r, p] - orgblue[r, p + 1]) / 2);

k++;

}

}

for (int c = 0; c < bitmap.Width; c++)

{

int k = 0;

for (int p = 0; p < bitmap.Height; p = p + 2)

{

colred[k, c] = (int)((double)(rowred[p, c] + rowred[p + 1, c]) / 2);

colred[k + bitmap.Height / 2, c] = (int)((double)(rowred[p, c] - rowred[p + 1, c]) / 2);

colgreen[k, c] = (int)((double)(rowgreen[p, c] + rowgreen[p + 1, c]) / 2);

colgreen[k + bitmap.Height / 2, c] = (int)((double)(rowgreen[p, c] - rowgreen[p + 1, c]) / 2);

colblue[k, c] = (int)((double)(rowblue[p, c] + rowblue[p + 1, c]) / 2);

colblue[k + bitmap.Height / 2, c] = (int)((double)(rowblue[p, c] - rowblue[p + 1, c]) / 2);

k++;

}

}

for (int r = 0; r < bitmap.Height; r++)

{

for (int c = 0; c < bitmap.Width; c++)

{

if (r >= 0 && r < bitmap.Height / 2 && c >= 0 && c < bitmap.Width / 2)

{

scalered[r, c] = colred[r, c];

scalegreen[r, c] = colgreen[r, c];

scaleblue[r, c] = colblue[r, c];

}

else

{

scalered[r, c] = Math.Abs((colred[r, c] - 127));

scalegreen[r, c] = Math.Abs((colgreen[r, c] - 127));

scaleblue[r, c] = Math.Abs((colblue[r, c] - 127));

}

}

}

for (int r = 0; r < bitmap.Width / 2; r++)

{

for (int c = 0; c < bitmap.Height / 2; c++)

{

colred[r, c] = 0;

colgreen[r, c] = 0;

colblue[r, c] = 0;

}

}

for (int r = 0; r < bitmap.Height; r++)

{

for (int c = 0; c < bitmap.Width; c++)

{

if (!(r >= 0 && r < bitmap.Height / 2 && c >= 0 && c < bitmap.Width / 2))

{

if (Math.Abs(colred[r, c]) <= m_threshold)

{

colred[r, c] = 0;

}

else

{

}

if (Math.Abs(colgreen[r, c]) <= m_threshold)

{

colgreen[r, c] = 0;

}

else

{

}

if (Math.Abs(colblue[r, c]) <= m_threshold)

{

colblue[r, c] = 0;

}

else

{

}

}

}

}

for (int c = 0; c < bitmap.Width; c++)

{

int k = 0;

for (int p = 0; p < bitmap.Height; p = p + 2)

{

recrowred[p, c] = (int)((colred[k, c] + colred[k + bitmap.Height / 2, c]));

recrowred[p + 1, c] = (int)((colred[k, c] - colred[k + bitmap.Height / 2, c]));

recrowgreen[p, c] = (int)((colgreen[k, c] + colgreen[k + bitmap.Height / 2, c]));

recrowgreen[p + 1, c] = (int)((colgreen[k, c] - colgreen[k + bitmap.Height / 2, c]));

recrowblue[p, c] = (int)((colblue[k, c] + colblue[k + bitmap.Height / 2, c]));

recrowblue[p + 1, c] = (int)((colblue[k, c] - colblue[k + bitmap.Height / 2, c]));

k++;

}

}

for (int r = 0; r < bitmap.Height; r++)

{

int k = 0;

for (int p = 0; p < bitmap.Width; p = p + 2)

{

recorgred[r, p] = (int)((recrowred[r, k] + recrowred[r, k + (bitmap.Width / 2)]));

recorgred[r, p + 1] = (int)((recrowred[r, k] - recrowred[r, k + (bitmap.Width / 2)]));

recorggreen[r, p] = (int)((recrowgreen[r, k] + recrowgreen[r, k + (bitmap.Width / 2)]));

recorggreen[r, p + 1] = (int)((recrowgreen[r, k] - recrowgreen[r, k + (bitmap.Width / 2)]));

recorgblue[r, p] = (int)((recrowblue[r, k] + recrowblue[r, k + (bitmap.Width / 2)]));

recorgblue[r, p + 1] = (int)((recrowblue[r, k] - recrowblue[r, k + (bitmap.Width / 2)]));

k++;

}

}

Bitmap imgPtr = new Bitmap(bmpSource);

{

int k = 0;

for (int i = 0; i < bitmap.Height; i++)

{

for (int j = 0; j < bitmap.Width; j++)

{

if (function == 0)

{

PixelColor pc=new PixelColor();

pc.Red =(byte) Math.Abs(recorgred[i, j] - 0);

pc.Green = (byte)Math.Abs(recorggreen[i, j] - 0);

pc.Blue = (byte)Math.Abs(recorgblue[i, j] - 0);

imgPtr.SetPixel(j, i, pc);

}

else

{

PixelColor pc = new PixelColor();

pc.Red = (byte)scalered[i, j];

pc.Green = (byte)scalegreen[i, j];

pc.Blue= (byte)scaleblue[i, j];

imgPtr.SetPixel(j, i, pc);

}

}

}

}

imgPtr.Finalize();

return imgPtr.Image;

}

Here is the result of Multi resolution image.

Above is the result from wavelet Edge detection.

Filtering

Filtering is one of the most essential aspect of any image. It is used to remove noise from the image or increase the visibility or sharpness. The Filtering process is defined as a function of convolution of a kernel with image block. So you basically define a Kernel or Filter core as mxn matrix.

Example:

Filtering should multiply the kernel values with associated pixels and then take a sum and replace the central pixel. One of the prominent filter

is median filter. Let us see how median filter works with our App.

public static BitmapSource MedianFilter(BitmapImage srcBmp, int []Kernel)

{

List<int> rd = new List<int>();

List<int> gr = new List<int>();

List<int> bl = new List<int>();

List<int> alp = new List<int>();

int xdir = Kernel[0];

int ydir = Kernel[1];

Bitmap bmp = new Bitmap(srcBmp);

Bitmap median = new Bitmap(srcBmp);

PixelColor c;

for (int i = 0; i < bmp.NumRow; i++)

{

for (int j = 0; j < bmp.NumCol; j++)

{

rd = new List<int>();

gr = new List<int>();

bl = new List<int>();

alp = new List<int>();

int ind = 0;

PixelColor pc=new PixelColor();

for (int i1 = i-ydir; i1 <= i+ydir; i1++)

{

for (int j1 = j-xdir; j1 <= j+xdir; j1++)

{

if((j1<bmp.NumCol) && (i1<bmp.NumRow) &&

(j1>=0)&&(i1>=0)&&(i1!=i)&&(j1!=j))

{

pc = median.GetPixel(j1, i1);

rd.Add(pc.Red);

gr.Add(pc.Green);

bl.Add(pc.Blue);

alp.Add(pc.Alpha);

ind++;

}

{

}

}

}

if (rd.Count > 0)

{

int red = (int)GetMedian(rd.ToArray());

int green = (int)GetMedian(gr.ToArray());

int blue = (int)GetMedian(bl.ToArray());

int alpha = (int)GetMedian(alp.ToArray());

PixelColor pc2 = new PixelColor(red, green, blue, alpha);

median.SetPixel(j, i, pc2);

}

}

}

median.Finalize();

return median.Image;

}

It basically loops through Image, take a pixel, extract all the pixels around this and find median. Replace the central pixel with the median of the neighbours.

Fundamentally though the size is predefined, at the edges, pixels will not have as many neighbours as desired. So we use List rather than a fixed array to pull the neighbours.

GetMedian method returns the median of a list.

Median is given by center of a sorted array. If the array length is odd, then it is calculated as mean of two center values.

Here is the result of Median Filtering

Talking about filtering, what would we do without a proper convolution

filter that allows the user to perform kernel based processing? One of the simplest form of filter is 3x3 filters as depicted above. Let us explore 3x3 filter for edge detection. We perform convolution with a {-5 0 0,0 0 0,0 0 5} filter to detect edge ( diagonal edge detection).

Here is the code for convolution:

public static BitmapSource ConvFilter(BitmapImage b, int [,]Kernel)

{

Bitmap bmp = new Bitmap(b);

Bitmap rslt = new Bitmap(b);

int nRows = Kernel.GetUpperBound(0);

int nCols = Kernel.GetUpperBound(1);

double sumR = 0, sumG = 0, sumB = 0,sumAlpha=0;

int weight = 0;

int r = 0, c = 0;

int tot = 0;

string s = "";

for (int i = nRows/2; i < bmp.NumRow-nRows/2; i++)

{

for (int j = nCols/2; j < bmp.NumCol-nCols/2; j++)

{

sumR = sumG = sumB = sumAlpha=0;

r = 0;

c = 0;

PixelColor pc = bmp.GetPixel(j, i);

for (int i1 = -nRows/2; i1 <=nRows/2 ; i1++)

{

for (int j1 = -nCols/2; j1 <=nCols/2; j1++)

{

pc = bmp.GetPixel(j1+j, i1+i);

double red = (double)pc.Red;

sumR = sumR+red * (double)Kernel[r, c];

double green = (double)pc.Green;

double blue = pc.Blue;

sumG = sumG + green * (double)Kernel[r, c];

sumB = sumB + blue* (double)Kernel[r, c];

tot++;

c++;

}

r++;

c = 0;

}

if (weight == 0)

{

weight = 1;

}

sumG = 0;

sumB = 0;

if (sumR > 255)

{

sumR = 255;

}

if (sumR < 0)

{

sumR = 0;

}

if (sumG > 255)

{

sumG = 255;

}

if (sumG < 0)

{

sumG = 0;

}

if (sumB < 0)

{

sumB = 0;

}

if (sumB > 255)

{

sumB = 255;

}

pc.Red = (byte)(int)sumR;

pc.Green = (byte)sumG;

pc.Blue = (byte)sumB;

rslt.SetPixel(j, i, pc);

}

}

rslt.Finalize();

return rslt.Image;

}One of the problems with convolution filter is that it needs multiplication to be performed either on float or better on double domain.

Where as the pixel values are in byte. So the system needs multiple typecasting. We are looking for a possible fix for this problem.

Here is the result of Edge detection with 3x3 mentioned diagonal mask.

Segmentation

Segmentation is the process of clubbing the colors and normalize them to a nearest color values so that overall number of colors

in the image is reduced. This helps in object detection in images. There are several color segmentation, but this App will support Men-Shift Segmentation and Face segmentation out of the box.

Mean-Shift Segmentation

The fundamental of this technique is very simple. First collect NxN neighbor pixels of a pixel (x,y). Calculate the mean and then check if the center is sufficiently closed to the mean. If so, replace the center pixel with the mean value. But this approach has certain limitations, With new high definitions cameras, there are huge color depths. So variation from one color to another color is huge. Hence I modified the algorithm in following way:

1) Calculate the HSV component from RGB component

2) Run mean shift algorithm on HSV component

3) If center pixel replacement is positive, than replace the mean of RGB in RGB image and mean of HSV in HSV image. So HSV image is automatically clustered along with RGB image. Once the Process is complete, return RGB image.

Here is the code for mean shift algorithm:

public static BitmapSource MeanShiftSegmentation(BitmapImage src, double ThresholdDistance,int radious)

{

Bitmap rgb = new Bitmap(src);

Bitmap hsv = new Bitmap(Bitmap.BitmapImageFromBitmapSource(ConvertImageFromRGB2HSV(src)));

for (int i = 0; i < rgb.NumRow; i++)

{

for (int j = 0; j < rgb.NumCol; j++)

{

double tot=0;

double valsH = 0;

double valsS = 0;

double valsB = 0;

double valsR = 0;

double valsG = 0;

double valsBl = 0;

for (int i1 = -radious; i1 < radious; i1++)

{

for (int j1 = -radious; j1 < radious; j1++)

{

if (((i1 + i) >= 0) && ((i1 + i) < rgb.NumRow) &&

((j1 + j) >= 0) && ((j1 + j) < rgb.NumCol))

{

PixelColor pcHSV = hsv.GetPixel(j1 + j, i1 + i);

valsH+=(double)(pcHSV.Red);

valsS += (double)(pcHSV.Green);

valsB+=(double)(pcHSV.Blue);

PixelColor pcRGB = rgb.GetPixel(j1 + j, i1 + i);

valsR += (double)(pcRGB.Red);

valsG += (double)(pcRGB.Green);

valsBl += (double)(pcRGB.Blue);

tot++;

}

}

}

double mH = valsH/tot;

double mS =valsS/tot;

double mV = valsB/tot;

byte mR =(byte) (valsR/tot);

byte mG = (byte)(valsG/tot);

byte mB = (byte)(valsBl/tot);

PixelColor pcv = hsv.GetPixel( j, i);

PixelColor pcR = new PixelColor();

double avgColor = (Math.Abs(pcv.Red - mH) +

Math.Abs(pcv.Green - mS) + Math.Abs(pcv.Blue - mV)) / 3;

if (avgColor < ThresholdDistance)

{

pcR = new PixelColor(mR, mG, mB, 255);

rgb.SetPixel(j, i, pcR);

}

}

}

rgb.Finalize();

return rgb.Image;

}

Here is the result of segmentation. You can see that the prominent parts are marked with a nearest color and variations are minimum. Edges are retained. By suitably changing the distance and radius parameters, you can change the behavior of segmentation process.

Here is the result of Face Dtection which is based on color thresholding in Ycbr color scale followed by morphological processing.

Fun

What is an Ultrabook App without fun element? People uses Apps to add to their entertainment factor and there are a lot of such things to do with images.

We have already integrated three applications out of the box.

- Inpainting

- Face Collage

- Anaglyph

An inpainting is a method by means of which you can replace undesired objects from the images. Say you have a Nice picture with your former Girlfriend and You are looking dam smart. But unfortunately you are getting married to some one else and you dont want to delete the photo. So? Use inpainting, remove your Ex from the image. Or you have a beautiful snap and you find some irritating Electric wires hanging, use inpainting to remove those stuff.

Read my article on inpainting for more details: <<Here>>

Face Collage

Well you have an Album of many friends and you want to create a single Photo by cascading all photos such that the cascaded photo also interprets a picture.

Here is a Collage of my 18 month old Son.

Here goes the code

public static BitmapSource MakeCollage(BitmapImage srcBmp,Bitmap[]allBmp,int BlockSizeRow,int BlockSizeCol)

{

Bitmap Rslt = new Bitmap(srcBmp);

Bitmap src = new Bitmap(srcBmp);

int NumRow = src.Height;

int numCol = src.Width;

Bitmap srcBlock = new Bitmap(BlockSizeRow, BlockSizeCol);

for (int i = 0; i < NumRow - BlockSizeRow; i += BlockSizeRow)

{

for (int j = 0; j < numCol - BlockSizeCol; j += BlockSizeCol)

{

srcBlock = new Bitmap(BlockSizeRow, BlockSizeCol);

for (int i1 = 0; i1 < (BlockSizeRow); i1++)

{

for (int j1 = 0; j1 < (BlockSizeCol); j1++)

{

srcBlock.SetPixel(j1, i1, Rslt.GetPixel(j + j1, i + i1));

}

}

srcBlock.Finalize();

double dst = 999999999999999.0;

int small = -1;

for (int k = 0; k < allBmp.Length; k++)

{

double d = 0;

for (int i1 = 0; i1 < BlockSizeRow; i1++)

{

for (int j1 = 0; j1 < BlockSizeCol; j1++)

{

PixelColor c1 = srcBlock.GetPixel(j1, i1);

int rd1 = c1.Red;

int gr1 = c1.Green;

int bl = c1.Blue;

PixelColor c2 = allBmp[k].GetPixel(j1, i1);

int rd2 = c2.Red;

int gr2 = c2.Green;

int bl2 = c2.Blue;

d = d + Math.Abs(rd1 - rd2) + Math.Abs(gr1 - gr2) + Math.Abs(bl - bl2);

}

}

d = d / (double)(BlockSizeRow * BlockSizeCol);

if (d < dst)

{

dst = d;

small = k;

}

}

for (int i1 = 0; i1 < BlockSizeRow; i1++)

{

for (int j1 = 0; j1 < BlockSizeCol; j1++)

{

PixelColor c1 = allBmp[small].GetPixel(j1, i1);

try

{

Rslt.SetPixel(j1 + j, i1 + i, c1);

}

catch (Exception ex)

{

}

}

}

}

}

Rslt.Finalize();

return Rslt.Image;

}

More description about the technique shown here for collage can be found <here>

Anaglyph

Well, Anaglyphs are basically Red-Cyan channel images which when visualized with 3d Spects or one with a Red and Cyan vision gives a 3d Visualization. This is primary interface provided for 3d vision that is planned as future version of the App.

Here is an Anaglyph generated from a Pair of Stereoscopic images.

Here are the source stereo images

And Here is the 3d View that we would get

This image will be generated by mapping the depth Map of the Anaglyph image with the Left view image. Depth map is the visual disparity associated with the stereoscopic view.

Steganography

To add to fun part, we are also ready with a unique Image steganography process that would allow you to hide an image behind another image of same scale. Yeah you got it right. Source and Payload will be of same size to eliminate the need for extra pixels that are typical characteristic of Steganography.

Here is the Algorithm:

Encoding process:

- Input the image to be hidden (img_hide)

- Take wavelet transform with HAAR wavelet for img_hide and call the components: cA, cH, cV, cD respectively.

- Make redundant values in the img_hide as 0.

- Find the maximum of all the components and normalize each sub image by dividing it with the maximum values.

- DEC is the data that contains normalized wavelet decomposed values of img_hide

- Decompose the cover image im, into cA1,cV1,cH1,cD1 components using HAAR wavelet

- Store the cA size,M1,M2,M3,M4 I first four values of cH1.

- Store normalized img_hide data dec in cV1 and cD1.

- Take IDWT of DEC1 which is nothing but idwt(cA1,cH1,cV1,cD1) and call it as S.

- While saving the image S directly there may be loss during conversion so we normalize S.

- Convert S to 16 bit format with the value M stored as the first pixel value. Where M=maximum (absolute(S)).

Decoding process:

- Read the stego image to S1.

- Extract the normalization size m from first pixel.

- Set the first pixel value as second pixel for compensating for the losses that will incur otherwise.

- Convert S1from unit16 scale to original scale.

- Apply wavelet transform of variable S1and call the sub image or the components as cA1,cV1,cH1,cD1respectively.

- Extract the data from cH1.

- Denormalize the values.

- Extract dec from combined data of cD1 and cH1.

- Denormalize the dec .

- Take IDWT of set {cA,cD,cV,cH} and call it as rec.

- It is the recovered image

Utilities

These are small Applications that comes ported with main application which are often needed for business and other educational purposes.

The utilities that are now almost ready to be shipped in with the Applications are:

- Gif Movie maker

- Visible and Invisible Watermarking

- Dicom Viewer and Jpeg Exporter

Content Based Image Search and Retrieval

First part of content search through template is ready and find the explanation bellow. However for learning machine and content based image search, you need to come back again.

Search a template within an Image

Template matching is a technique where you select one (or more) small image called template and search it over a large image called f. Template matching works on the principle of cross correlation, which means the closeness of the values within a block defined by the size of template t with f.

In the simplest term the process can be explained by following formula:

Software like OpenCV provides a good template matching technique. However with the current framework my primary goal was to eliminate any third party, GDI support and even pointers so that the library could be rewritten in Java with ease. So here is the code for Correlation that I used.

public static Int32Rect TemplateMatch(BitmapImage mainImage, BitmapImage templateImage)

{

Bitmap f=new Bitmap(mainImage);

Bitmap t=new Bitmap(templateImage);

int BlockSizeRow = t.NumRow;

int BlockSizeCol = t.NumCol;

int NumRow = f.NumRow;

int numCol = f.NumCol;

double dst = 0;

int smallX = 0,smallY=0;

double miuF = CalculateMean(f.GetImageAsList());

double stdF = CalculateStdDev(f.GetImageAsList());

double miuT = CalculateStdDev(t.GetImageAsList());

double stdT = CalculateStdDev(t.GetImageAsList());

for (int i = 0; i < NumRow - BlockSizeRow; i ++)

{

for (int j = 0; j < numCol - BlockSizeCol; j ++)

{

double sm = 0;

for (int i1 = 0; i1 < (BlockSizeRow); i1++)

{

for (int j1 = 0; j1 < (BlockSizeCol); j1++)

{

PixelColor fpc = f.GetPixel(j1 + j, i1 + i);

PixelColor tpc = t.GetPixel(j1, i1);

sm =sm+ ((double)fpc.Red - miuF) * ((double)tpc.Red - miuT) / (stdF * stdT);

}

}

sm=sm/(double)(BlockSizeCol*BlockSizeRow);

sm = Math.Abs(sm);

if (sm > dst)

{

dst = sm;

smallX = i;

smallY = j;

}

}

}

return new Int32Rect(smallX, smallY, (int)templateImage.Width, (int)templateImage.Height);

}

In simple words, the above method implements the formula I mentioned. It finds the block that best matches the template over f and returns a rectangle of size of template

starting at the coordinates where best match appears.

But wait, before you step into an opinion that it is easy, I would like to emphasis few facts. As you can see

that template matching is performed in gray scale and the method is spatial, the chances of mismatch is quite high. So all you have to do is, convert spatial template

matching to spectral template matching method by takinf DCT/ wavelet transform over both template and f and then subject them for template matching technique. I have already put wavelet technique . DCT will be covered soon with next update.

Here is the result of template matching process with a screenshot of the simplest interface that I am using to test the algorithms.

I am using a small template as seen at bottom left corner to match in one of the images in my gallery. For overlaying the rectangle over the image, I am using canvas. MainImage source is assigned as Source for an ImageBrush. A rectangle is first filled with source image and is added as child to the canvas. Another rectangle is used to draw the resultant rectangle from TemplateMatch method and is added as another child to canvas.

Here is the overlaying code.

Rectangle exampleRectangle1 = new Rectangle();

exampleRectangle1.TranslatePoint(new Point(r.X+30, r.Y+30), Image1);

exampleRectangle1.Width = r.Width;

exampleRectangle1.Height = r.Height;

exampleRectangle1.Stroke = Brushes.Red;

exampleRectangle1.StrokeThickness = 4;

canvas.Children.Insert(1, exampleRectangle1);

Rectangle exampleRectangle = new Rectangle();

exampleRectangle.TranslatePoint(new Point(r.X, r.Y), Image1);

exampleRectangle.Width = 128;

exampleRectangle.Height = 128;

ImageBrush myBrush = new ImageBrush();

myBrush.ImageSource = Image1.Source;

exampleRectangle.Fill = myBrush;

canvas.Children.Insert(0, exampleRectangle);

Rectangle exampleRectangle1 = new Rectangle();

exampleRectangle1.TranslatePoint(new Point(r.X+30, r.Y+30), Image1);

exampleRectangle1.Width = r.Width;

exampleRectangle1.Height = r.Height;

exampleRectangle1.Stroke = Brushes.Red;

exampleRectangle1.StrokeThickness = 4;

canvas.Children.Insert(1, exampleRectangle1);

Communication

When I showed the Apps to my wife and asked her to evaluate, she bluntly said "if I were to choose your apps,

I would not. Yes it gives many processing and Image searching and modification capabilities, I can not share anything! And that sucks a big time!"

I was stunned and thought yeah right, anything cool we do, we want to show, we want to share and we want to do it from Apps. So I decided to add a communication framework along with ImageProcessing framework for easy sharing of the photos.

The framework gives to fetching and sharing option out of the box: Facebook and Bluetooth

Firstly we can download images straight from our and our friend's facebook Album. And we can publish the photo in our Image1 main image pane directly to facebook.

A. Facebook Integration

1. Obtaining API Key

For any Facebook application, The first thing you need is an App approved by Facebook ( It can not contain the name Face in the App name).

So open https://developers.facebook.com

Click on Apps and Click on Create Apps. Give a Name and Obtain an API key. observe the following screen shots. the green marked fields are important.

2. Configuring your App with API details

Open FacebookingTest Solution in Visual Studio.Net 2010. See at the application level the First thing you need is that the App should be authenticated by Facebook.

Assuming the fact that you are distributing the App, whoever uses it would need to accept that their Facebook data (Called Graph ) must be allowed to be fetched or accessed by the application.

So while connecting to facebook, the application must tell that the Apps is a valid one.

After opening the Project, you may see App.config in your solution explorer. Open the file.

Save the Code. Now First step that the App must do is to call the Facebook Autorization service.

passing your App id as the client_id which is requesting facebook to authenticate the App. If the authentication is successful, you will be redirected to

http://www.facebook.com/connect/login_success.html with a Session token that confirms the successful Login.

Then you need to create a Picture fetching method.

See when you try to fetch any details like friend list or status update of your friends, you work with 'Get' type of API, and when you want to post something, you play with 'Post' API.

This page here gives you the details of all APIs

https://developers.facebook.com/docs/reference/api/

https://developers.facebook.com/docs/ref.../api/post/ Gives you details of Parameters in Post API.

me/feed gives your own feed. You need to look into Post API fields to understand what all you can pass.

Observer that the result is stored in dynamic data object. So It is able to consume different format of data. Result of any facebook API accessing method will be a XML stream. dynamic data type can parse the stream and Enumerate the data objects easily.

Will send you all pictures from yours and your's friend list.

B. Bluetooth Integration

We use InTheHand namespace of 32Feet bluetooth library to develop a mechanism for searching and sending the image. A BluetoothDeviceSelectionDialog will first enable the users to get the bluetooth devices in the proximity followed by selecting a device to transfer the image in Image1.

We will first save the image of Image1 by using the Save utility of our Bitmap class followed by creating a ObexWebRequest with Uri that includes the filename along with the selected device address. We request for a read with post method which writes the image in the selected bluetooth device. Once successful, we delete the local image.

Here is the sample code:

private void sendfile()

{

SelectBluetoothDeviceDialog dialog = new SelectBluetoothDeviceDialog();

dialog.ShowAuthenticated = true;

dialog.ShowRemembered = true;

dialog.ShowUnknown = true;

dialog.ShowDialog();

BitmapImage iSrc = Bitmap.BitmapImageFromBitmapSource(Image1.Source as BitmapSource);

Bitmap bmp = new Bitmap(iSrc);

bmp.Save("my.jpg");

System.Uri uri = new Uri("obex://" +

dialog.SelectedDevice.DeviceAddress.ToString() + "/" + "my.jpg");

ObexWebRequest request = new ObexWebRequest(uri);

request.ReadFile("my.jpg");

ObexWebResponse response = (ObexWebResponse)request.GetResponse();

MessageBox.Show(response.StatusCode.ToString());

response.Close();

}

Gallery Management and Content based Image Search

The fundamental of Gallery Management is very simple. User can have multiple Galleries (like Albums in Facebook). Main Config file stores the details of the Gallery.

<Gallery>

<Name> My Wedding</Name>

<ConfigLocation>MyWedding.xml</ConfigLocation>

<Message> Photos of My Wedding</Message>

<Date>dd.mm.yyyy</Date>

<GeoLocation>

<Latitude>LT</Latitude>

<Longitude>LN</Longitude>

</GeoLocation>

</Gallery>

Each gallery is further stored as node in this config file with same structure. User may choose to use the attributes or they might be left as blank. As seen from the configuration file, it stores the location of independent galleries. Structure of Independent gallery is as follows

<Picture>

<location>c:\Photos\wed1.jpg</location>

<NumFaces>1</NumFaces>

<Face>

<Name>Rupam</Name>

<Rectangle> 20 30 80 110</Rectangle>

<FacePca>1.7 3.49 6.66 2.23 8.41 9.92 9.99 17.6</FacePca>

</Face>

<StandardFeatures>

<ColorFeatures>MR MG MB SR SG SB</ColorFeatures>

<TextureFeatures>MH MS MV SH SS SV<TextureFeatures>

<ShapeFeatures>

<Zernike>

ZM1_M ZM2_M ZM3_M ZM4_M

</Zernike>

<ShapeFeatures>

</StandardFeatures>

</Picture>

It can be seen that every picture is treated as an Element in the Gallery. Every Picture can have multiple faces detected with Skin segmentation and Connected Component as explained above. We extract 8 dominant PCA components from Eigen faces. When you browse an image, the App tries to locate the faces automatically and then marks them. PCA of the rectangle is extracted and is normalized. User can also select the face using Mouse or stylus device. As the database grows, significant information about the faces are available. When new photos are loaded, template matching with PCA is adopted to search the faces and the system becomes really accurate in terms of locating the faces.

With facebook imaging system, you need to manually Tag the faces. But with this APP, it is automatically done. Faces are auto tagged after detection using PCA based face matching. But as you expect, the system misdetection rate is very high at the beginning due to low number of training faces. So we leave a provision for the user to re-tag the faces with correct name. But as your gallery expands, recognition accuracy also increases.

Now Once Faces are extracted, rest of the image is treated as background. We extract Global features from the image as Mean of Red, Green, Blue color components (MR,MG,MB), Mean of HSV Texture Components(MH,MS,MV), Standard Deviation of Color Components ( SR,SG,SB), Standard Deviation of Texture Components ( SH,SS,SV). We Also incorporate Global shape information for proper matching of flowers, animals, foods etc.

Shape features are extracted from Zernike moments. A Zernike moment is moment extracted from polar Harmonic of the images. Initially we restrict ourselves to 4 order Zernike moments. Other shape features that I am trying to integrate are: Polar Histogram and Shape Context.

Once you load an image and search, the search will be performed among all your galleries and a temporary xml will be created with new files that matches the search. User can save the result of search as another Gallery.

Here is a sample screenshot of the tested algorithm.

Left is the Query image, right is the matched image. Lower listbox gives all the matches in order of closeness.

Summery of Features and Usage

User Mode

1) Use can manage multiple Galleries

2) Faces are Automatically extracted and Tagged

3) Pictures can be Imported through Bluetooth and Facebook

4) Pictures can be published to Facebook or shared through Bluetooth

5) Pictures can be searched based on Geo Location, Features, Faces. Search input can be a simple tag or it can be an Image itself. Result of Search can also be saved as a Gallery

6) User can Create a Face Collage of a Face from all the pictures in a Gallery

7) User can remove unwanted objects from Images using Inpainting Technique

8) Users can hide an Image behind another Image

9) User can Hide text behind the Images

10) User can create Anaglyph image from Steroscopic Pair of Images

11) Depth map extraction and 3-d Rendering of the images

12) GPS based automatic rendering of inter Gallery Pictures

13) Extract Still images from webcam and load into gallery

14) Ambient Light based Brightness adjustment

15) Stylus and Touch based contour Selection

16) Cool Presentation mode with slideshow and slideshow effects.

Developer Mode

1) Use the framework and Simple Algo-Language to develop own image processing routine

3) Easy design of Filters

4) Import the techniques and apply on video

5) Develop face Biometric Solutions

6) Create Custom CBIR System for any contents

In short you can manage Images as contents and explore the great power of image processing.

How it is different from OpenCV or Matlab?

Matlab and OpenCV needs coding to implement techniques and are mainly for developing algorithms. No other software ( At least not in my knowledge) gives the option of having fun with images, at the same time building your own techniques. So if you do not have ImageGrassy, you probably have never know how strong image processing can be!

Points of Interest

Final Integration testing is underway for getting it approved to AppStore. Having cleared the first round, the next target is to offer the users with an exclusive experience and fun with their Images. So I am introducing another section, "Product Tour" where you will see the initial screens and functioning of the entire system. Your Suggestions are valuable. Dont forget leaving your comments!

Disclaimer: None of the interfaces or designs are final yet. I am keeping Product Tour Section updated to let you know the current development and also to get valuable suggestions from you!

Why Ultrabook?

You know it! When people visit an ultrabook App store they discover that Apps here are productive tools like any other Microsoft product and not just stripped and deformed version of one of those thousands of Apple Apps. Second the design, the hybrid programming model and cool applications needs a cool platform and in windows 7, it would look bit like a clumsy editor where somebody has clubbed thousands of routines.

Where is the Code?

I have no planning to make this as another freeware that I made and distributed like many others. It is kind of pure Wpf model that abstracts the wpf powers and gives your conventional programming skills a good wing. As the stuff is planned and written for commercial apps, its difficult to release the code. But wait, all the snippets are functional and you can build your own App or use them in your project. All of them work!

Why not other sensors?

Too many cooks spoils the party. So I would limit the App's sensor usage to touch, Stylus and Light Sensing enabled. For God sake it is more of a laptop and you are not going to twist it in your hand(I take my words back  " /> ).

" /> ).

Any other cool application that you think is possible with the framework are also welcome. Dont forget to drop a comment about features you think absolutely necessary with the App.

Which Category?

Firstly I thought of Metro, but when I started testing it on a monotonous color Metro Interface, it looked silly. Because the App provides many image operations and manipulations, there are several colors and variations which ultimately takes away the metro look and feel. So I would rather go for desktop app with Education/Entertainment category.

References

Following Links, concepts and code snippets have been quite helpful and I am thankful to writers of all the following Articles/Snippets

[1] http://www.codeproject.com/Articles/340859/Metro-Style-Lightweight-Image-Processing

[2] http://www.codeproject.com/Articles/354702/WPF-Slideshow

[3] http://social.msdn.microsoft.com/forums/en-US/wpf/thread/580fca03-d15b-48b2-9fb3-2e6fec8a2c68/

[4] http://langexplr.blogspot.in/2007/06/creating-fractal-images-using-c-30.html

[5] http://stackoverflow.com/questions/5275115/add-a-median-method-to-a-list

[6] http://stackoverflow.com/questions/3141692/c-sharp-standard-deviation-of-generic-list

[7] http://www.8bitavenue.com/2012/04/wavelet-edge-detector-in-c/

[8] http://www.codeproject.com/Articles/93642/Canny-Edge-Detection-in-C

[9] http://www.codeproject.com/Articles/99457/Edge-Based-Template-Matching

[10] http://www.c-sharpcorner.com/uploadfile/raj1979/dynamic-and-static-rectangle-in-wpf/History

1. Article without CBIS and Template matching is released on 10.10.2012

2. Convolution and Edge detection with diagonal filter explained 11.10.2012

3. Template Matching with Normalized cross correlation Explained 11.10.2012

4. Sensors, Webcam, CommunicationFramework integrated and elaborated on 13.10.2012

gasshopper.iics is a group of like minded programmers and learners in codeproject. The basic objective is to keep in touch and be notified while a member contributes an article, to check out with technology and share what we know. We are the "students" of codeproject.

This group is managed by Rupam Das, an active author here. Other Notable members include Ranjan who extends his helping hands to invaluable number of authors in their articles and writes some great articles himself.

Rupam Das is mentor of Grasshopper Network,founder and CEO of Integrated Ideas Consultancy Services, a research consultancy firm in India. He has been part of projects in several technologies including Matlab, C#, Android, OpenCV, Drupal, Omnet++, legacy C, vb, gcc, NS-2, Arduino, Raspberry-PI. Off late he has made peace with the fact that he loves C# more than anything else but is still struck in legacy style of coding.

Rupam loves algorithm and prefers Image processing, Artificial Intelligence and Bio-medical Engineering over other technologies.

He is frustrated with his poor writing and "grammer" skills but happy that coding polishes these frustrations.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin