Introduction

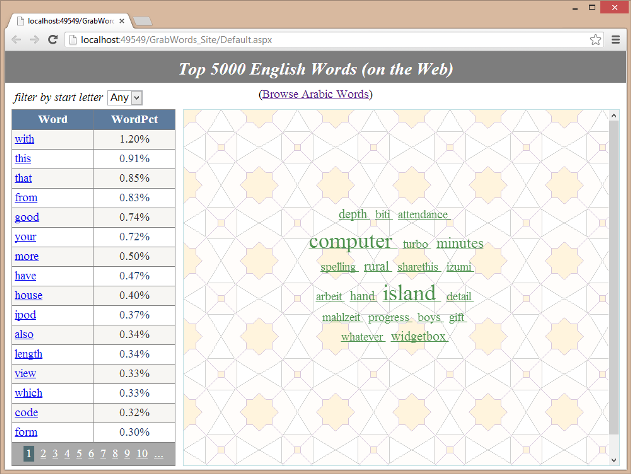

The purpose of this ASP.NET project is to collect and report the most widely used English (or Arabic) words found in random web pages.

The associated web site can be accessed from here.

The project utilizes a number of practical concepts including web crawling, database storage and retrieval and lastly a technique for starting and monitoring a long running task. The latter technique was described in a previous article by the author.

The project was initially developed to find and report the most widely used English words on the web. Since I had a prior experience with developing bilingual software, I have decided to extend it to handle Arabic content. This is done without duplicating the application's web forms. More information about this issue is given in a later section in this write-up.

How the Application Works

The application is a website (Microsoft Visual Studio 2010) project consisting of three ASPX pages (web forms) and their code-behind C# classes.

The application consists of two independent parts:

Part 1: This part (FindWords.aspx) crawls the web collecting words and saves them to "Words.MDB" (Microsoft Access database).

This part uses an auxiliary class "Grawods.cs" (in the App_Code folder).

Part 2: This part (Default.aspx) uses a GridView object to query and display words from "Words.MDB".

The design for "Default.aspx" page includes an iframe whose source is set to "WordCloud.aspx" -- this displays a set of randomly-selected words as a cloud (i.e. font-size is proportional to the word relative frequency). The frame also serves as a target for the hyperlinked words (it hosts content from wordrefrence.com)

A proper starting place for understanding the code of the various web forms is the Page_Load() method (this method is executed every time the page is requested by the client (browser)).

Next, I will briefly explain some key parts from the code.

Finding Words

private void RunTask()

{

int MaxWords = 20000;

bool LangAr = false;

int MinWordLength = 4;

int MaxWordLength = 10;

try

{ GrabWords gw = new GrabWords(DBPath,LangAr);

Hashtable dtWords = null;

int CurrentWordCount = 0;

while (CurrentWordCount < MaxWords)

{ if (Cancel)

{ CompletionStatus = "Canceled"; return; }

int ProgressPct = (int) (100 * CurrentWordCount / (double) MaxWords);

ProgressChanged(this, ProgressPct);

dtWords = gw.BuildWordTable(MinWordLength, MaxWordLength);

CurrentWordCount = dtWords.Count;

}

gw.FillDB(dtWords);

ProgressChanged(this, 100);

}

catch (Exception ex)

{ CompletionStatus = ex.ToString(); ProgressChanged(this, -1); }

}

The preceding procedure represents the server-side task that crawls the web (using the call to gw.BuildWordTable()). The words found are saved to a Hash table dtWords that is maintained as an instance member of the GraWord object (gw).

The method BuildWordTable() executes only for a short time to allow the caller to report progress. The call to BuildWordTable() is executed repeatedly until MaxWords (or more) words are collected.

Note: The program variable MaxWords is set to 20000. Thus, the hash table dtWords (and the corresponding table in DB) will have at least that many rows. However, to limits the displayed data to the top 5000 words (or less when filtering by a letter), the application executes a "select ... from qWords", where "qWords" is a query object in our database.

Following the completion of the while loop, we execute the call to gw.FillDB(dtWords) to copy the content of the hash table dtWords to a database table.

Next, we give some information about BuildWordTable() shown below:

public Hashtable BuildWordTable(int MinWordLength, int MaxWordLength)

{

if ((dtWords.Count > 1000) && (searchWords.Count < 100))

{ searchWords = new ArrayList(dtWords.Keys); }

string searchWord = (string) searchWords[rand.Next(searchWords.Count)];

+ searchWord + "&start=" + rand.Next(200);

string SearchUrl = "http://www.bing.com/search?&q=" +

searchWord + "&first=" + rand.Next(200);

string searchData = FetchURL(SearchUrl);

if (searchData.StartsWith("Error"))

{

return dtWords;

}

List<string> urlSet = GrabURLs(searchData);

int count = 4;

for(int i=0; i < count; i++)

{ int randLoc = rand.Next(urlSet.Count);

string url = urlSet[randLoc];

if (visitedURLs.Contains(url)) continue;

visitedURLs.Add(url);

searchData = FetchURL(url);

if (!searchData.StartsWith("Error"))

{ string msg = GetWords(searchData, dtWords, MinWordLength, MaxWordLength);

}

}

return dtWords;

}

In BuildWordTable(), we access various web sites and fetch HTML content by calling the auxiliary method FetchURL(url).

The process is done through two stages. In the first stage, the call FetchURL(SearchUrl) is used to fetch content corresponding to a search query to Bing (or Google). The returned content (searchData) is then processed to extract URLs using the call GrabURLs(searchData), which returns a set of URLs (urlSet). In the second stage (the for-loop), we select four random URLs from urlSet that are again accessed via calls to FetchURL(url); this time, however, the received content is handled by the call GetWords(), which extracts language words to add to the dtwords hash table.

It is also worthwhile to say few words about the GetWords() method given below:

string GetWords(string htmlData, Hashtable dtWords, int MinWordLength, int MaxWordLength)

{

try

{ htmlData = htmlData.ToLower();

stripTag(htmlData, "style");

stripTag(htmlData, "script");

htmlDoc.LoadHtml(htmlData);

string Cont = htmlDoc.DocumentNode.SelectSingleNode("//body").InnerText;

char[] sep = new char[]

{ ' ', '"', '\\', '>', '<', '\'', ':', '/', '.', ',' ,')', ';', '('};

string[] words = Cont.Split(sep);

for (int i = 0; i < words.Length; i++)

{ string word = words[i];

if (word.Length < MinWordLength) continue;

if (word.Length > MaxWordLength) continue;

if (!LangAr)

{ for (int j = 0; j < word.Length; j++)

if ((word[j] < 'a') || (word[j] > 'z')) goto cont2;

}

else

{ for (int j = 0; j < word.Length; j++)

if ((word[j] < 'أ') || (word[j] > 'ي')) goto cont2;

}

if (LangAr)

{

if (word.StartsWith("ال"))

{ word = word.Substring(2);

if (word.Length < MinWordLength) continue;

}

}

if (!LangAr)

{ if (badWords.Contains(word)) continue; }

if (dtWords.ContainsKey(word))

{ dtWords[word] = 1 + (int)dtWords[word]; }

else dtWords.Add(word, 1);

cont2: ;

}

return null;

}

catch (Exception e)

{ return e.ToString(); }

}

The object htmlDoc is of type HtmlAgilityPack.HtmlDocument. This is essentially used to get the text (innerText of all tags within the <body> tag) of the HTML page using the code:

htmlDoc.LoadHtml(htmlData);

string Cont = htmlDoc.DocumentNode.SelectSingleNode("//body").InnerText;

Arabization

The approach taken here is to do a minimal modification to program code and external data sources.

For a start, we just duplicated (no design changes) the "Words.mdb" to one named "Words_Ar.mdb" to store Arabic words. Thus, little change, if any, is needed to the program code used to insert/fetch data.

As stated earlier, this project consists of two distinct parts, finding words by FindWord.aspx and displaying results by Default.aspx. In both web forms, we use a flag LangAr. The flag is passed somehow to the other classes Grawords.cs and WordCloud.aspx.cs.

The following code fragment (in Page_Load() of Default.aspx) shows how to choose the proper database and tailor the gridView object for RTL (right-to-left) display and floated position.

string DBPath = Server.MapPath("") + "/Words.mdb";

if (LangAr)

{ DBPath = Server.MapPath("") + "/Words_Ar.mdb";

gridView.Attributes.Add("dir", "rtl");

gridView.CssClass = "gridFloatRight";

}

The language flag is also used to control the text (Arabic or English) of certain design elements of the web page such as the page header and the caption of the drop-down list. The flag is also used to control the CSS direction property for certain HTML div tags, as in:

<div <% if (LangAr) { %> style="direction:rtl;" <% } %> >

...

</div>

The following summarizes some other issues relevant to the Arabic language.

- Building the letter-filter drop-down list:

This is handled by the following code fragment in the Page_Load() method of Default.aspx page.

if (!Page.IsPostBack)

{ if (LangAr)

{ lstLetter.Items.Add("الكل");

for(int i = 0; i < AR_letterList.Length; i++)

lstLetter.Items.Add(AR_letterList[i].ToString());

}

else

{ lstLetter.Items.Add("Any");

for(int i = 0; i < letterList.Length; i++)

lstLetter.Items.Add(char.ToUpper(letterList[i]).ToString());

}

}

- Arabizing column headers of the gridView object:

This is handled by the following code fragment in the gridView_RowDataBound() method.

protected void gridView_RowDataBound(object sender, GridViewRowEventArgs e)

{

if (e.Row.RowType == DataControlRowType.Header)

{ e.Row.Cells[0].Width = 120;

if (LangAr)

{ e.Row.Cells[0].Text = "الكلمة";

e.Row.Cells[1].Text = "النسبة";

}

}

}

- Other language considerations:

In Arabic text, the "al" (meaning "the") is often joined to the Arabic words. Thus, in the procedure that collects words, we have implemented a step to remove the "al". This is not a perfect solution because there are words where "al" is an intrinsic part of the word. A similar problem appears with Arabic words beginning with the letter "Baa". The letter can be an intrinsic part of the word or a preposition. This problem (not resolved in this project) could be resolved by looking up words in an Arabic lexicon.

Another consideration is related to filtering by first letter. In Arabic, the letter "alif" appears in various forms (with or without hamza). In the current implementation, the "alif"-filter generates an or-search-criteria for only two of the possible forms.

History

- 10th June, 2013: Version 1.0

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin