Glossary

- SO(F)M - Self-Organizing (Feature) Maps

- (A)NN – (Artificial) Neural Network

Introduction

Self-Organizing Feature maps are competitive neural networks in which neurons are organized in a two-dimensional grid (in the most simple case) representing the feature space. According to the learning rule, vectors that are similar to each other in the multidimensional space will be similar in the two-dimensional space. SOFMs are often used just to visualize an n-dimensional space, but its main application is data classification.

Data classification

Suppose we have a set of n-dimensional vectors describing some objects (for example, cars). Each vector element is a parameter of an object (in the case with cars, for instance – width, height, weight, the type of the engine, power, gasoline tank volume, and so on). Each of such parameters is different for different objects. If you need to determine the type of a car by looking only on such vectors, then using SOFMs, you can do it easily.

The architecture of SOFM

The SOFM NN consists of two layers of neurons (Fig. 1). The first layer is not actually a neurons layer, it only receives the input data and transfers it to the second layer. Let us consider the simplest case, when neurons of the second layer are combined into a two-dimensional grid. Other structures, like three-dimensional spheres, cylinders, etc., are out of the scope of this article. Each neuron of the third layer connects with each neuron of the second layer. The number of neurons in the second layer can be chosen arbitrarily, and differs from task to task. Each neuron of the second layer has its own weights vector whose dimension is equal to the dimension of the input layer. The neurons are connected to adjacent neurons by a neighborhood relation, which dictates the topology or structure of the map. Such a neighborhood relation is assigned by a special function called a topological neighborhood (see below).

Fig. 1. The architecture of an SOFM NN

Learning rule

In the beginning of the functioning, all weights vectors of the second layer’s neurons are set to random values. After that, some input-vector from the set of learning vectors is selected and set to the input of the NN. At this step, the differences between the input vector and all neurons vectors are calculated as follows:

Where, i and j are the indices of neurons in the output layer. After that, the NN chooses the winner-neuron, i.e., the neuron whose weights vector is the most similar to the input vector:

Here, k1 and k2 are indices of the winner-neuron. Now, we need to make a correction of the weights vectors of the winner and all the adjacent neurons. The neighborhood of a neuron is determined by a topological neighborhood function, as follows:

Here, r is the distance to the winner-neuron:

s is a function dictating the space of the neighborhood. In the beginning of the functioning, it involves almost the whole space of the grid, but with time, the value of s decreases. As the attraction function equal to 1, r equals to zero. Shown here is the simplest form of a topological neighborhood function, but in real tasks, its modifications are often used:

| - "Mexican hat"; |

| - "French hat" - a is a priory assumed constant |

At the next step, after calculating the topological neighborhood function for each neuron, the weights of all the neurons are updated as follows:

Here a(t) is a learning rate function that also decreases with time. If a neuron is a winner or adjacent to the winner, then its weight vector is updated, or remains unchanged otherwise. On each step, the NN determines the neuron whose weights vector is the most similar to the input vector, and corrects it and its neighbors’ weights vectors to make them closer to the input vector (Fig. 2).

Fig. 2. Updating the winner-neuron and its neighbors towards the input vector marked with ×. The solid and dashed lines correspond to the situations before and after updating, respectively

Often, each input vector from the training set is presented to the NN, and learning continues either some fixed number of cycles or while the difference between an input and the weights vectors reach some epsilon value. The difference between adjacent neurons decreases with time, and hence they organize into groups (maps) which correspond to one of the classes from the learning set.

Playing with the demo

Before we start, I need to say a few words about data that can be handled by the demo project. It must be tabled data. Each row of the table represents an object. The items on the row are variables of the data set. The important thing is that every data sample has the same set of variables. Thus, each column of the table holds all the values for a variable. The last variable in the row corresponds to the class name; if you do not know it, just add an additional space character. So, let us begin.

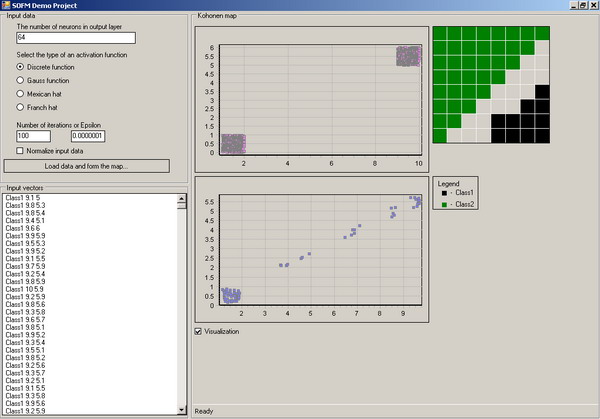

- Run the demo project – SOFMTest.exe.

- Set number of neurons in the second layer equal to 100. Set the number of iterations to 10. The value of the epsilon must be less than 0.01. Select the discrete topology neighborhood function and leave the "Normalize input data" checkbox unchecked.

- First of all, let’s consider the two-classes example. Press the "Load data and form the map" button and select the 2classtest.data file.

- The map building will be in progress. You can see the data distribution on the top graph (Fig. 3a), and the process of neuron weights modification during the learning process (Fig. 3b).

- When learning is finished, you will see the color map (Fig. 4) which shows how the classification process was realized.

Note: You can uncheck the visualization checkbox to accelerate the calculations.

Fig. 3. The two-classes example. Each object from the data set consists of two parameters; the x-axis corresponds to the first parameter and the y-axis corresponds to the second one. a) Input data distribution. b) Weights changing process.

Fig. 4. Map obtained for the two-clasess example. Each rectangle corresponds to a neuron from the second layer. Black neurons – first class, green neurons – second class.

Additionally, you can try how SOFM works on a standard testing data set – the well-know Fisher’s Iris data set. The data set consists of measurements from 150 Iris flowers: 50 Iris-Setosa, 50 Iris-Versicolor, and 50 Iris-Virginica (Fig. 5.).

Fig. 5. The Irises. a) Setosa b) Versicolor c) Virginica.

Set the number of second layer neurons to 64, set the "Normalize input data" checkbox to checked position (all other parameters remain same), and press the "Load data and form map" button. Then, choose the iris.data file. After some calculations (you can uncheck the "Visualization" checkbox to perform them faster), the map will be constructed (Fig. 6). Each color on it corresponds to one of the Iris classes.

Fig. 6. Map obtained for the Iris data example. Each rectangle corresponds to a neuron from the second layer. Black neurons – first class (Setosa), red neurons – second class (Versicolor), blue neurons – third class (Virginica).

Diving into the code

Let’s examine how it works. There are two main classes in the object model of SOFM (Fig. 7): Neuron and NeuralNetwork.

Fig. 7. The class diagram. SOFM.Neuron and SOFM.NeuralNetwork.

Each Neuron class has a Coordinate field, which shows the neuron’s position in two-dimensinal greed. The Weights field is a weights vector of generic type, and contains some double values. The Neuron class can perform the ModifyWeights operation, which modifies the weights vector of a neuron according to the selected topological neighborhood function (h) type, distance to the winner, and the number of the current iteration.

The NeuralNetwork class contains a two-dimensional grid of neurons, and can perform a number of operations:

public NeuralNetwork(int m, int numberOfIterations, double epsilon, Functions f)

public void ReadDataFromFile(string inputDataFileName)

public void StartLearning()

All patterns from a learning set presents to NN input numberOfIterations times or while the weights vector modification is minor (currentEpsilon <= Epsilon).

public void StartLearning()

{

int iterations = 0;

while (iterations<=numberOfIterations && currentEpsilon > epsilon)

{

List <List<double>>patternsToLearn = new List<List<double>>(numberOfPatterns);

foreach (List<double> pArray in patterns)

patternsToLearn.Add(pArray);

Random randomPattern = new Random();

List<double> pattern = new List<double>(inputLayerDimension);

for (int i = 0; i < numberOfPatterns; i++)

{

pattern = patternsToLearn[randomPattern.Next(numberOfPatterns - i)];

StartEpoch(pattern);

patternsToLearn.Remove(pattern);

}

iterations++;

OnEndIterationEvent(new EventArgs());

}

}

The private StartEpoch() method responds for finding the winner neuron and for starting the process of weights modification through all the outputLayer neurons.

private void StartEpoch(List<double> pattern)

{

Neuron Winner = this.FindWinner(pattern);

currentEpsilon = 0;

for (int i = 0; i < outputLayerDimension; i++)

for (int j = 0; j < outputLayerDimension; j++)

{

currentEpsilon +=

outputLayer[i, j].ModifyWights(pattern, Winner.Coordinate,

currentIteration, function);

}

currentIteration++;

currentEpsilon = Math.Abs(currentEpsilon /

(outputLayerDimension * outputLayerDimension));

EndEpochEventArgs e = new EndEpochEventArgs();

OnEndEpochEvent(e);

}

So, an epoch is a process of presenting one of the patterns to an NN input, and an iteration is a set of epochs when all patterns from a training set are presented to an NN input. Each time an epoch or iteration ends, the corresponding event is raised.

protected virtual void OnEndEpochEvent(EndEpochEventArgs e)

{

if (EndEpochEvent != null)

EndEpochEvent(this, e);

}

protected virtual void OnEndIterationEvent(EventArgs e)

{

if (EndIterationEvent != null)

EndIterationEvent(this, e);

}

The ColorSOFM() method returns the colored matrix, where each element corresponds to a neuron from an output layer. Colors for map painting are choosen randomly.

How to use an SOFM

To use SOFM classes in your program, first of all, you have to add a reference to the sofm.dll assembly. Then, you need to add the following line to the using section:

using SOFM;

Next, you have to create an instance of the SOFM.NeuralNetwork class like:

SOFM.NeuralNetwork nn = new NeuralNetwork(NumberOfCards,

NumberOfIterations, Epsilon, function);

Then, add subscribers to the NeuralNetwork events (if needed):

nn.EndEpochEvent += new EndEpochEventHandler(nn_EndEpochEvent);

nn.EndIterationEvent += new EndIterationEventHandler(nn_EndIterationEvent);

And, define the Normalize property of instance (ether to normalize or not input data). Then, read data from the file:

nn.ReadDataFromFile(ofd.FileName);

And start the learning process:

nn.StartLearning();

After learning is finished, the colored map can be obtained like follows:

System.Drawing.Color[,] colorMatrix = nn.ColorSOFM();

That’s all.

Last word

An SOFM can divide the input data set to classes, only in cases when input vectors from different classes are not highly correlated (are not too similar). Only the basic concepts of SOFMs are provided here. For more detailed description please refer to one of the books from references section.

References

The Steema TeeChart control was used in the demo project for graphs plotting. It is a great control migrated to .NET from Delphi, the lite version is for free. It can be downloaded from the Steema Software site.

There are some links for additional study on SOFMs:

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin

Shame on me

Shame on me  . Sorry.

. Sorry.