In this article, we will learn how to translate Elastic statements into a C# fully operational CRUD app.

Introduction

In the first part, we learned how to setup, config, and run a bunch of Elastic statements. Now it’s time to translate it into a C# fully operational CRUD app. Let’s get it done.

Creating a Demo App

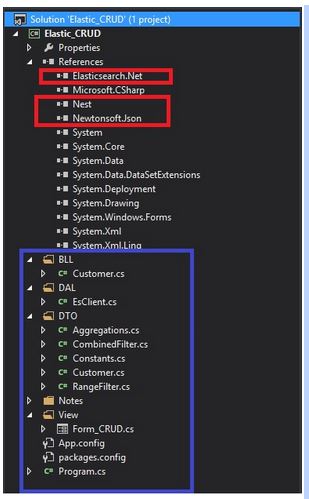

First step, create a new Windows Form solution. It is available for download here, and it looks like this:

The references highlighted in red are the most important and you can get them via NuGet. As the names suggest, NEST and Elasticsearch DLLs are the .NET abstraction for Elasticsearch.

At the time that I wrote this article, the official documentation was visibly outdated. Either way, you can access it at http://nest.azurewebsites.net/.

All blue area is how I have decided to organize the project. Pretty standard: BLL stands for the Business Rules, DAL for the Data Access Layer, DTO contains the Entities and View holds our Windows Form.

Connecting with Elastic via NEST

Following the abstraction I have shown, our data access layer is as simple as:

namespace Elastic_CRUD.DAL

{

public class EsClient

{

private const string ES_URI = "http://localhost:9200";

private ConnectionSettings _settings;

public ElasticClient Current { get; set; }

public EsClient()

{

var node = new Uri(ES_URI);

_settings = new ConnectionSettings(node);

_settings.SetDefaultIndex(DTO.Constants.DEFAULT_INDEX);

_settings.MapDefaultTypeNames(m => m.Add(typeof(DTO.Customer),

DTO.Constants.DEFAULT_INDEX_TYPE));

Current = new ElasticClient(_settings);

Current.Map(m => m.MapFromAttributes());

}

}

}

The property called ‘’Current” is the abstraction of the Elastic REST client. All CRUD commands will be done via it. Another important part here is the “Settings”, I have grouped all the config keys into a simple class:

public static class Constants

{

public const string DEFAULT_INDEX = "crud_sample";

public const string DEFAULT_INDEX_TYPE = "Customer_Info";

public const string BASIC_DATE = "yyyyMMdd";

}

As you can see, all the names refer to the storage that we have created in the first part of this article.

Group 1 (Index, Update and Delete)

We are going to replicate the Elastic statements that we have learned previously into this WinForm app. In order to organize it, I end up with one tab for each group of features, thus five of them:

The first tab, as you can see, will be responsible for adding, updating and deleting a customer. Given that, the customer entity is a very important part and it must be properly mapped using NEST decoration, as shown below:

[ElasticType(Name = "Customer_Info")]

public class Customer

{

[ElasticProperty(Name="_id", NumericType = NumberType.Long)]

public int Id { get; set; }

[ElasticProperty(Name = "name", Index = FieldIndexOption.NotAnalyzed)]

public string Name { get; set; }

[ElasticProperty(Name = "age", NumericType = NumberType.Integer)]

public int Age { get; set; }

[ElasticProperty(Name = "birthday", Type = FieldType.Date, DateFormat = "basic_date")]

public string Birthday { get; set; }

[ElasticProperty(Name = "hasChildren")]

public bool HasChildren { get; set; }

[ElasticProperty(Name = "enrollmentFee", NumericType = NumberType.Double)]

public double EnrollmentFee { get; set; }

[ElasticProperty(Name = "opinion", Index = FieldIndexOption.NotAnalyzed)]

public string Opinion { get; set; }

}

Now that we already have the REST connection and our customer entity fully mapped, it’s time for writing some logic. Adding or updating a record should use almost the same logic. Elastic is clever enough to decide if it’s either a new record or an update, by checking the existence of the given ID.

public bool Index(DTO.Customer customer)

{

var response = _EsClientDAL.Current.Index

(customer, c => c.Type(DTO.Constants.DEFAULT_INDEX_TYPE));

if (response.Created == false && response.ServerError != null)

throw new Exception(response.ServerError.Error);

else

return true;

}

The method responsible for that in the API is called "Index()", as when saving a doc into a Lucene storage, the correct term is “indexing”.

Note that we are using our constant index type (“Customer_Info”) in order to inform NEST where the customer will be added/updated. This index type, roughly speaking, is our table in the Elastic’s world.

Another thing that will be present in the NEST usage is the lambda notation, nearly all NEST API’s methods works through it. Nowadays, using lambda is far from being breaking news, but it’s not as straightforward as regular C# notation is.

If over the reading here, you feel like the syntax is confusing, I strongly suggest you to have a quick search here in the Code Project community, there are plenty of tutorials about how to use Lambda.

Deleting is the simplest one:

public bool Delete(string id)

{

return _EsClientDAL.Current

.Delete(new Nest.DeleteRequest(DTO.Constants.DEFAULT_INDEX,

DTO.Constants.DEFAULT_INDEX_TYPE,

id.Trim())).Found;

}

Quite similar to the “Index()” method, but here it is just required to inform the customer id. And, of course, call "Delete()" method.

Group 2 (Standard Queries)

As I have mentioned previously, Elastic is really resourceful when it comes to querying, thus it’s not possible to cover all advanced ones here. However, after playing with the following samples, you will be able to understand its basics, hence start writing your own user cases later on.

The second tab holds three queries:

- Search by the ID: It basically uses a valid ID and only taking it into account:

public List QueryById(string id)

{

QueryContainer queryById = new TermQuery() { Field = "_id", Value = id.Trim() };

var hits = _EsClientDAL.Current

.Search(s => s.Query(q => q.MatchAll() && queryById))

.Hits;

List typedList = hits.Select(hit => ConvertHitToCustumer(hit)).ToList();

return typedList;

}

private DTO.Customer ConvertHitToCustumer(IHit hit)

{

Func<IHit<DTO.Customer=>, DTO.Customer=> func = (x) =>

{

hit.Source.Id = Convert.ToInt32(hit.Id);

return hit.Source;

};

return func.Invoke(hit);

}

Let’s take it slow here.

First, it’s necessary to create a NEST QueryContainer object, informing the field we want to use as search criteria. In this case, the customer id.

This query object will be used as the parameter by the Search() method in order to get the Hits (resultset returned from Elastic).

The last step is to convert the Hits into our known Customer entity via ConvertHitToCustomer method.

I could have done all of it in one single method, but I have decided to split it up instead. The reason is to demonstrate that you guys have several options to organize your code, other than putting it all together in an unreadable Lambda statement.

- Querying using all fields, with the “

AND” operator to combine them:

public List QueryByAllFieldsUsingAnd(DTO.Customer costumer)

{

IQueryContainer query = CreateSimpleQueryUsingAnd(costumer);

var hits = _EsClientDAL.Current

.Search(s => s.Query(query))

.Hits;

List typedList = hits.Select(hit => ConvertHitToCustumer(hit)).ToList();

return typedList;

}

private IQueryContainer CreateSimpleQueryUsingAnd(DTO.Customer customer)

{

QueryContainer queryContainer = null;

queryContainer &= new TermQuery() { Field = "_id", Value = customer.Id };

queryContainer &= new TermQuery() { Field = "name", Value = customer.Name };

queryContainer &= new TermQuery() { Field = "age", Value = customer.Age };

queryContainer &= new TermQuery()

{ Field = "birthday", Value = customer.Birthday };

queryContainer &= new TermQuery()

{ Field = "hasChildren", Value= customer.HasChildren };

queryContainer &= new TermQuery()

{ Field = "enrollmentFee", Value=customer.EnrollmentFee };

return queryContainer;

}

Same idea behind the ID search, but now our query object was created by the CreateSimpleQueryUsingAnd method. It receives a customer entity and converts it into a NEST QueryContainer object.

Note that we are concatenating all fields using the “&=” NEST custom operator, which represents the “AND”.

- It follows the previous example, but combining the fields with

OR “|=” operator instead.

public List QueryByAllFieldsUsingOr(DTO.Customer costumer)

{

IQueryContainer query = CreateSimpleQueryUsingOr(costumer);

var hits = _EsClientDAL.Current

.Search(s => s.Query(query))

.Hits;

List typedList = hits.Select(hit => ConvertHitToCustumer(hit)).ToList();

return typedList;

}

private IQueryContainer CreateSimpleQueryUsingOr(DTO.Customer customer)

{

QueryContainer queryContainer = null;

queryContainer |= new TermQuery() { Field = "_id", Value = customer.Id };

queryContainer |= new TermQuery() { Field = "name", Value = customer.Name };

queryContainer |= new TermQuery() { Field = "age", Value = customer.Age };

queryContainer |= new TermQuery()

{ Field = "birthday", Value = customer.Birthday };

queryContainer |= new TermQuery()

{ Field = "hasChildren", Value = customer.HasChildren };

queryContainer |= new TermQuery()

{ Field = "enrollmentFee", Value = customer.EnrollmentFee };

return queryContainer;

}

Group 3 (Combining Queries)

The third tab shows how to combine filters using bool query. The available clauses here are “must”, “must not” and “should”. Although it may look strange at first sight, it’s not far from the others dbs:

- must: The clause (query) must appear in matching documents.

- must_not: The clause (query) must not appear in the matching documents.

- should: The clause (query) should appear in the matching document. In a boolean query with no "

must" clauses, one or more should clauses must match a document. The minimum number of should clauses to match can be set using the minimum_should_match parameter.

Translating it into our C# app, we will get:

public List QueryUsingCombinations(DTO.CombinedFilter filter)

{

FilterContainer[] agesFiltering = new FilterContainer[filter.Ages.Count];

for (int i = 0; i < filter.Ages.Count; i++)

{

FilterDescriptor clause = new FilterDescriptor();

agesFiltering[i] = clause.Term("age", int.Parse(filter.Ages[i]));

}

FilterContainer[] nameFiltering = new FilterContainer[filter.Names.Count];

for (int i = 0; i < filter.Names.Count; i++)

{

FilterDescriptor clause = new FilterDescriptor();

nameFiltering[i] = clause.Term("name", filter.Names[i]);

}

var hits = _EsClientDAL.Current.Search(s => s

.Query(q => q

.Filtered(fq => fq

.Query(qq => qq.MatchAll())

.Filter(ff => ff

.Bool(b => b

.Must(m1 => m1.Term

("hasChildren", filter.HasChildren))

.MustNot(nameFiltering)

.Should(agesFiltering)

)

)

)

)

).Hits;

List typedList = hits.Select(hit ==> ConvertHitToCustumer(hit)).ToList();

return typedList;

}

Here, you can see the first loop creating the "should" filters collection for the given "ages", and the next one in turn building the "must not clause" list for the "names" provided.

The "must" clause will be applied just upon the “hasChildren” field, so no need for a collection here.

With all filter objects filled up, it's just a matter of passing it all as parameter to the lambda Search() method.

Group 4 (Range Queries)

In the fourth tab, we will be talking about the range query (very same idea as the 'between', 'greater than', 'less than' etc. operators from SQL).

In order to reproduce that, we will be combining two range queries, highlighted as follows:

Our BLL has a method to compose this query and run it:

public List QueryUsingRanges(DTO.RangeFilter filter)

{

FilterContainer[] ranges = new FilterContainer[2];

FilterDescriptor clause1 = new FilterDescriptor();

ranges[0] = clause1.Range(r => r.OnField(f =>

f.EnrollmentFee).Greater(filter.EnrollmentFeeStart)

.Lower(filter.EnrollmentFeeEnd));

FilterDescriptor clause2 = new FilterDescriptor();

ranges[1] = clause2.Range(r => r.OnField(f => f.Birthday)

.Greater(filter.Birthday.ToString

(DTO.Constants.BASIC_DATE)));

var hits = _EsClientDAL.Current

.Search(s => s

.Query(q => q

.Filtered(fq => fq

.Query(qq => qq.MatchAll())

.Filter(ff => ff

.Bool(b => b

.Must(ranges)

)

)

)

)

).Hits;

List typedList = hits.Select(hit => ConvertHitToCustumer(hit)).ToList();

return typedList;

}

Detailing the method, it will be creating a FilterContainer object with two items:

First one holds the "EnrollmentFee" range, applying the "Great" and "Lower" operators upon it. The second will cover values greater than whatever the user supplies for the "Birthday" field.

Note that we need to stick with the date format used since the conception of this storage (see the first article).

With all set, just send it as parameter to the Search().

Group 5 (Aggregations)

At last, the fifth tab shows the coolest feature (in my opinion), the aggregations.

As I have stated in my previous article, this functionality is specially useful to quantify data, thus make sense of it.

The first combobox holds all available fields and the second one the aggregations options. For the sake of simplicity, I'm showing here the most popular aggregations:

Sum

private void ExecuteSumAggregation

(DTO.Aggregations filter, Dictionary list, string agg_nickname)

{

var response = _EsClientDAL.Current

.Search(s => s

.Aggregations(a => a

.Sum(agg_nickname, st => st

.Field(filter.Field)

)

)

);

list.Add(filter.Field + " Sum", response.Aggs.Sum(agg_nickname).Value.Value);

}

Average

private void ExecuteAvgAggregation

(DTO.Aggregations filter, Dictionary list, string agg_nickname)

{

var response = _EsClientDAL.Current

.Search(s => s

.Aggregations(a => a

.Average(agg_nickname, st => st

.Field(filter.Field)

)

)

);

list.Add(filter.Field + " Average", response.Aggs.Average(agg_nickname).Value.Value);

Count

private void ExecuteCountAggregation

(DTO.Aggregations filter, Dictionary list, string agg_nickname)

{

var response = _EsClientDAL.Current

.Search(s => s

.Aggregations(a => a

.Terms(agg_nickname, st => st

.Field(filter.Field)

.Size(int.MaxValue)

.ExecutionHint

(TermsAggregationExecutionHint.GlobalOrdinals)

)

)

);

foreach (var item in response.Aggs.Terms(agg_nickname).Items)

{

list.Add(item.Key, item.DocCount);

}

}

Min/Max

private void ExecuteMaxAggregation

(DTO.Aggregations filter, Dictionary list, string agg_nickname)

{

var response = _EsClientDAL.Current

.Search(s => s

.Aggregations(a => a

.Max(agg_nickname,

st => st

.Field(filter.Field)

)

)

);

list.Add(filter.Field + " Max", response.Aggs.Sum(agg_nickname).Value.Value);

}

Conclusion

As most developers are used to relational databases, work with a non-relational storage may be challenging, even strange. At least, it has been true for me.

I have been working with most of the known relational databases in several projects, and its concepts, standards are really stamped into my mind.

So, being in touch with this emerging storage technology is transforming my perception which I may assume, is happening worldwide with others IT professionals.

Over time, you may realise that it really opens a large range of solutions to be designed.

I hope you guys have enjoyed it!.

Cheers!

History

- 28th September, 2015: Initial version

Software developer. I've been working with the design and coding of several .NET solutions over the past 12 years.

Brazilian, living in Australia currently working with non-relational searching engine and BI.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin