Contents To Focused:

- What is Cognitive Services?

- What is Face API?

- Sign Up for Face API

- Create ASP.Net MVC Sample Application

- Add AngularJS

- Install & Configure the Face API

- Upload images to detect faces

- Mark faces in the image

- List detected faces with face information

- Summary

Cognitive Services (Project Oxford) :

Microsoft Cognitive Services, formerly known as Project Oxford are a set of machine-learning application programming interfaces (REST APIs), SDKs and services that helps developers to make smarter application by add intelligent features – such as emotion and video detection; facial, speech and vision recognition; and speech and language understanding. Get more details from website: https://staging.www.projectoxford.ai

Face API:

Microsoft Cognitive Services, there are four main components

- Face recognition: recognizes faces in photos, groups faces that look alike and verifies whether two faces are the same,

- Speech processing: recognize speech and translate it into text, and vice versa,

- Visual tools: analyze visual content to look for things like inappropriate content or a dominant color scheme, and

- Language Understanding Intelligent Service (LUIS): understand what users mean when they say or type something using natural, everyday language.

Get more details from Microsoft blog: http://blogs.microsoft.com/next/2015/05/01/microsofts-project-oxford-helps-developers-build-more-intelligent-apps/#sm.00000qcvfxlefheczxed59b9u8jna

We will implement Face recognition API in our sample application. So what is Face API? Face API, is a cloud-based service that provides the most advanced face algorithms to detect and recognize human faces in images.

Face API has:

- Face Detection

- Face Verification

- Similar Face Searching

- Face Grouping

- Face Identification

Get detailed overview here: https://www.microsoft.com/cognitive-services/en-us/face-api/documentation/overview

Face Detection: In this post we are focusing on detecting faces so before we deal with the sample application let’s take a closer look to API Reference (Face API - V1.0). To enable the services we need to get an authorization key (API Key) by signing up with the service for free. Go to the link for signup: https://www.microsoft.com/cognitive-services/en-us/sign-up

Sign Up for Face API:

Sign up using any one by clicking on it,

- Microsoft account

- GitHub

- LinkedIn

after successfully joining it will redirect to subscriptionsmpage. Request new trials for any of the product by selecting the checkbox.

Process: Click on Request new trials > Face - Preview > Agree Term > Subscribe

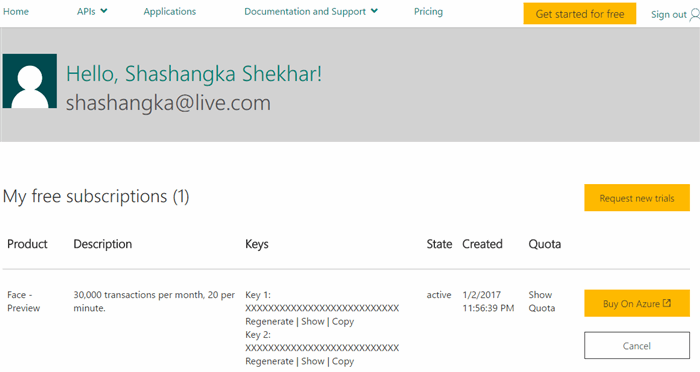

Here you can see I have attached a screenshot of my subscription. In the Keys column from Key 1 click on “Show” to preview the API Key, click “Copy” to copy the key for further use. Key can be regenerate by clicking on “Regenerate”.

So fur we are done with the subscription process, now let’s get started with the ASP.Net MVC sample application.

Create Sample Application:

Before going to start the experiment let’s make sure Visual Studio 2015 is installed on development machine. let’s open Visual Studio 2015, From the File menu, click on New > Project.

Select ASP.Net Web Application, name it as you like, I just named it “FaceAPI_MVC” click Ok button to proceed on for next step. Choose empty template for the sample application, select “MVC” check box then click Ok.

In our empty template let’s now create MVC controller and generate views by scaffolding.

Add AngularJS:

We need to add packages in our sample application. To do that go to Solution Explorer Right Click on Project > Manage NuGet Package

In Package Manager Search by typing “angularjs”, select package then click Install.

After installing “angularjs” package we need to reference it in our layout page, also we need to define app root using “ng-app” directive.

After installing “angularjs” package we need to reference it in our layout page, also we need to define app root using “ng-app” directive.

If you are new to angularjs, please get a basic overview on angularjs with MVC application from here: http://shashangka.com/2016/01/17/asp-net-mvc-5-with-angularjs-part-1

Install & Configure the Face API:

We need to add “Microsoft.ProjectOxford.Face” library in our sample application. Type and search like below screen then select and Install.

Web.Config: In application Web.Config add a new configuration setting in appSettings section with our previously generated API Key.

<add key="FaceServiceKey" value="xxxxxxxxxxxxxxxxxxxxxxxxxxx" />

Finallly appSettings:

<appSettings>

<add key="webpages:Version" value="3.0.0.0" />

<add key="webpages:Enabled" value="false" />

<add key="PreserveLoginUrl" value="true" />

<add key="ClientValidationEnabled" value="true" />

<add key="UnobtrusiveJavaScriptEnabled" value="true" />

<add key="FaceServiceKey" value="xxxxxxxxxxxxxxxxxxxxxxxxxxx" /> <!--replace with API Key-->

</appSettings>

MVC Controller: This is where we are performing our main operation. First of all Get FaceServiceKey Value from web.config by ConfigurationManager.AppSettings.

private static string ServiceKey = ConfigurationManager.AppSettings["FaceServiceKey"];

Here in MVC Controller we have two main method to performing the face detection operation. One is HttpPost method, which is using for uploading the image file to folder and the other one is HttpGet method is using to get uploaded image and detecting faces by calling API Service. Both methods are getting called from client script while uploading image to detect faces. Let’s get explained in steps.

Image Upload: This method is responsible for uploading images.

[HttpPost]

public JsonResult SaveCandidateFiles()

{

}

Image Detect: This method is responsible for detecting the faces from uploaded images.

[HttpGet]

public async Task<dynamic> GetDetectedFaces()

{

}

This is the code snippet where the Face API is getting called to detect the face from uploaded image.

var fStream = System.IO.File.OpenRead(FullImgPath)

var faceServiceClient = new FaceServiceClient(ServiceKey);

Face[] faces = await faceServiceClient.DetectAsync(fStream, true, true, new FaceAttributeType[] { FaceAttributeType.Gender, FaceAttributeType.Age, FaceAttributeType.Smile, FaceAttributeType.Glasses });

Create & Save Cropped Detected Face Images.

var croppedImg = Convert.ToString(Guid.NewGuid()) + ".jpeg" as string;

var croppedImgPath = directory + '/' + croppedImg as string;

var croppedImgFullPath = Server.MapPath(directory) + '/' + croppedImg as string;

CroppedFace = CropBitmap(

(Bitmap)Image.FromFile(FullImgPath),

face.FaceRectangle.Left,

face.FaceRectangle.Top,

face.FaceRectangle.Width,

face.FaceRectangle.Height);

CroppedFace.Save(croppedImgFullPath, ImageFormat.Jpeg);

if (CroppedFace != null)

((IDisposable)CroppedFace).Dispose();

Method that Cropping Images according to face values.

public Bitmap CropBitmap(Bitmap bitmap, int cropX, int cropY, int cropWidth, int cropHeight)

{

}

Finally Full MVC Controller:

public class FaceDetectionController : Controller

{

private static string ServiceKey = ConfigurationManager.AppSettings["FaceServiceKey"];

private static string directory = "../UploadedFiles";

private static string UplImageName = string.Empty;

private ObservableCollection<vmFace> _detectedFaces = new ObservableCollection<vmFace>();

private ObservableCollection<vmFace> _resultCollection = new ObservableCollection<vmFace>();

public ObservableCollection<vmFace> DetectedFaces

{

get

{

return _detectedFaces;

}

}

public ObservableCollection<vmFace> ResultCollection

{

get

{

return _resultCollection;

}

}

public int MaxImageSize

{

get

{

return 450;

}

}

public ActionResult Index()

{

return View();

}

[HttpPost]

public JsonResult SaveCandidateFiles()

{

string message = string.Empty, fileName = string.Empty, actualFileName = string.Empty; bool flag = false;

HttpFileCollection fileRequested = System.Web.HttpContext.Current.Request.Files;

if (fileRequested != null)

{

CreateDirectory();

ClearDirectory();

for (int i = 0; i < fileRequested.Count; i++)

{

var file = Request.Files[i];

actualFileName = file.FileName;

fileName = Guid.NewGuid() + Path.GetExtension(file.FileName);

int size = file.ContentLength;

try

{

file.SaveAs(Path.Combine(Server.MapPath(directory), fileName));

message = "File uploaded successfully";

UplImageName = fileName;

flag = true;

}

catch (Exception)

{

message = "File upload failed! Please try again";

}

}

}

return new JsonResult

{

Data = new

{

Message = message,

UplImageName = fileName,

Status = flag

}

};

}

[HttpGet]

public async Task<dynamic> GetDetectedFaces()

{

ResultCollection.Clear();

DetectedFaces.Clear();

var DetectedResultsInText = string.Format("Detecting...");

var FullImgPath = Server.MapPath(directory) + '/' + UplImageName as string;

var QueryFaceImageUrl = directory + '/' + UplImageName;

if (UplImageName != "")

{

CreateDirectory();

try

{

using (var fStream = System.IO.File.OpenRead(FullImgPath))

{

var imageInfo = UIHelper.GetImageInfoForRendering(FullImgPath);

var faceServiceClient = new FaceServiceClient(ServiceKey);

Face[] faces = await faceServiceClient.DetectAsync(fStream, true, true, new FaceAttributeType[] { FaceAttributeType.Gender, FaceAttributeType.Age, FaceAttributeType.Smile, FaceAttributeType.Glasses });

DetectedResultsInText = string.Format("{0} face(s) has been detected!!", faces.Length);

Bitmap CroppedFace = null;

foreach (var face in faces)

{

var croppedImg = Convert.ToString(Guid.NewGuid()) + ".jpeg" as string;

var croppedImgPath = directory + '/' + croppedImg as string;

var croppedImgFullPath = Server.MapPath(directory) + '/' + croppedImg as string;

CroppedFace = CropBitmap(

(Bitmap)Image.FromFile(FullImgPath),

face.FaceRectangle.Left,

face.FaceRectangle.Top,

face.FaceRectangle.Width,

face.FaceRectangle.Height);

CroppedFace.Save(croppedImgFullPath, ImageFormat.Jpeg);

if (CroppedFace != null)

((IDisposable)CroppedFace).Dispose();

DetectedFaces.Add(new vmFace()

{

ImagePath = FullImgPath,

FileName = croppedImg,

FilePath = croppedImgPath,

Left = face.FaceRectangle.Left,

Top = face.FaceRectangle.Top,

Width = face.FaceRectangle.Width,

Height = face.FaceRectangle.Height,

FaceId = face.FaceId.ToString(),

Gender = face.FaceAttributes.Gender,

Age = string.Format("{0:#} years old", face.FaceAttributes.Age),

IsSmiling = face.FaceAttributes.Smile > 0.0 ? "Smile" : "Not Smile",

Glasses = face.FaceAttributes.Glasses.ToString(),

});

}

var rectFaces = UIHelper.CalculateFaceRectangleForRendering(faces, MaxImageSize, imageInfo);

foreach (var face in rectFaces)

{

ResultCollection.Add(face);

}

}

}

catch (FaceAPIException)

{

}

}

return new JsonResult

{

Data = new

{

QueryFaceImage = QueryFaceImageUrl,

MaxImageSize = MaxImageSize,

FaceInfo = DetectedFaces,

FaceRectangles = ResultCollection,

DetectedResults = DetectedResultsInText

},

JsonRequestBehavior = JsonRequestBehavior.AllowGet

};

}

public Bitmap CropBitmap(Bitmap bitmap, int cropX, int cropY, int cropWidth, int cropHeight)

{

Rectangle rect = new Rectangle(cropX, cropY, cropWidth, cropHeight);

Bitmap cropped = bitmap.Clone(rect, bitmap.PixelFormat);

return cropped;

}

public void CreateDirectory()

{

bool exists = System.IO.Directory.Exists(Server.MapPath(directory));

if (!exists)

{

try

{

Directory.CreateDirectory(Server.MapPath(directory));

}

catch (Exception ex)

{

ex.ToString();

}

}

}

public void ClearDirectory()

{

DirectoryInfo dir = new DirectoryInfo(Path.Combine(Server.MapPath(directory)));

var files = dir.GetFiles();

if (files.Length > 0)

{

try

{

foreach (FileInfo fi in dir.GetFiles())

{

GC.Collect();

GC.WaitForPendingFinalizers();

fi.Delete();

}

}

catch (Exception ex)

{

ex.ToString();

}

}

}

}

UI Helper:

internal static class UIHelper

{

#region Methods

public static IEnumerable<vmFace> CalculateFaceRectangleForRendering(IEnumerable<Microsoft.ProjectOxford.Face.Contract.Face> faces, int maxSize, Tuple<int, int> imageInfo)

{

var imageWidth = imageInfo.Item1;

var imageHeight = imageInfo.Item2;

float ratio = (float)imageWidth / imageHeight;

int uiWidth = 0;

int uiHeight = 0;

uiWidth = maxSize;

uiHeight = (int)(maxSize / ratio);

float scale = (float)uiWidth / imageWidth;

foreach (var face in faces)

{

yield return new vmFace()

{

FaceId = face.FaceId.ToString(),

Left = (int)(face.FaceRectangle.Left * scale),

Top = (int)(face.FaceRectangle.Top * scale),

Height = (int)(face.FaceRectangle.Height * scale),

Width = (int)(face.FaceRectangle.Width * scale),

};

}

}

public static Tuple<int, int> GetImageInfoForRendering(string imageFilePath)

{

try

{

using (var s = File.OpenRead(imageFilePath))

{

JpegBitmapDecoder decoder = new JpegBitmapDecoder(s, BitmapCreateOptions.None, BitmapCacheOption.None);

var frame = decoder.Frames.First();

return new Tuple<int, int>(frame.PixelWidth, frame.PixelHeight);

}

}

catch

{

return new Tuple<int, int>(0, 0);

}

}

#endregion Methods

}

MVC View:

@{

ViewBag.Title = "Face Detection";

}

<div ng-controller="faceDetectionCtrl">

<h3>{{Title}}</h3>

<div class="loadmore">

<div ng-show="loaderMoreupl" ng-class="result">

<img src="~/Content/ng-loader.gif" /> {{uplMessage}}

</div>

</div>

<div class="clearfix"></div>

<table style="width:100%">

<tr>

<th><h4>Select Query Face</h4></th>

<th><h4>Detection Result</h4></th>

</tr>

<tr>

<td style="width:60%" valign="top">

<form novalidate name="f1">

<input type="file" name="file" accept="image/*" onchange="angular.element(this).scope().selectCandidateFileforUpload(this.files)" required />

</form>

<div class="clearfix"></div>

<div class="loadmore">

<div ng-show="loaderMore" ng-class="result">

<img src="~/Content/ng-loader.gif" /> {{faceMessage}}

</div>

</div>

<div class="clearfix"></div>

<div class="facePreview_thumb_big" id="faceCanvas"></div>

</td>

<td style="width:40%" valign="top">

<p>{{DetectedResultsMessage}}</p>

<hr />

<div class="clearfix"></div>

<div class="facePreview_thumb_small">

<div ng-repeat="item in DetectedFaces" class="col-sm-12">

<div class="col-sm-3">

<img ng-src="{{item.FilePath}}" width="100" />

</div>

<div class="col-sm-8">

<ul>

@*<li>FaceId: {{item.FaceId}}</li>*@

<li>Age: {{item.Age}}</li>

<li>Gender: {{item.Gender}}</li>

<li>{{item.IsSmiling}}</li>

<li>{{item.Glasses}}</li>

</ul>

</div>

<div class="clearfix"></div>

</div>

</div>

<div ng-hide="DetectedFaces.length">No face detected!!</div>

</td>

</tr>

</table>

</div>

@section NgScript{

<script src="~/ScriptsNg/FaceDetectionCtrl.js"></script>

}

Angular Controller:

angular.module('myFaceApp', [])

.controller('faceDetectionCtrl', function ($scope, FileUploadService) {

$scope.Title = 'Microsoft FaceAPI - Face Detection';

$scope.DetectedResultsMessage = 'No result found!!';

$scope.SelectedFileForUpload = null;

$scope.UploadedFiles = [];

$scope.SimilarFace = [];

$scope.FaceRectangles = [];

$scope.DetectedFaces = [];

$scope.selectCandidateFileforUpload = function (file) {

$scope.SelectedFileForUpload = file;

$scope.loaderMoreupl = true;

$scope.uplMessage = 'Uploading, please wait....!';

$scope.result = "color-red";

var uploaderUrl = "/FaceDetection/SaveCandidateFiles";

var fileSave = FileUploadService.UploadFile($scope.SelectedFileForUpload, uploaderUrl);

fileSave.then(function (response) {

if (response.data.Status) {

$scope.GetDetectedFaces();

angular.forEach(angular.element("input[type='file']"), function (inputElem) {

angular.element(inputElem).val(null);

});

$scope.f1.$setPristine();

$scope.uplMessage = response.data.Message;

$scope.loaderMoreupl = false;

}

},

function (error) {

console.warn("Error: " + error);

});

}

$scope.GetDetectedFaces = function () {

$scope.loaderMore = true;

$scope.faceMessage = 'Preparing, detecting faces, please wait....!';

$scope.result = "color-red";

var fileUrl = "/FaceDetection/GetDetectedFaces";

var fileView = FileUploadService.GetUploadedFile(fileUrl);

fileView.then(function (response) {

$scope.QueryFace = response.data.QueryFaceImage;

$scope.DetectedResultsMessage = response.data.DetectedResults;

$scope.DetectedFaces = response.data.FaceInfo;

$scope.FaceRectangles = response.data.FaceRectangles;

$scope.loaderMore = false;

$('#faceCanvas_img').remove();

$('.divRectangle_box').remove();

var canvas = document.getElementById('faceCanvas');

var elemImg = document.createElement("img");

elemImg.setAttribute("src", $scope.QueryFace);

elemImg.setAttribute("width", response.data.MaxImageSize);

elemImg.id = 'faceCanvas_img';

canvas.append(elemImg);

angular.forEach($scope.FaceRectangles, function (imgs, i) {

var divRectangle = document.createElement('div');

var width = imgs.Width;

var height = imgs.Height;

var top = imgs.Top;

var left = imgs.Left;

divRectangle.className = 'divRectangle_box';

divRectangle.style.width = width + 'px';

divRectangle.style.height = height + 'px';

divRectangle.style.position = 'absolute';

divRectangle.style.top = top + 'px';

divRectangle.style.left = left + 'px';

divRectangle.style.zIndex = '999';

divRectangle.style.border = '1px solid #fff';

divRectangle.style.margin = '0';

divRectangle.id = 'divRectangle_' + (i + 1);

canvas.append(divRectangle);

});

},

function (error) {

console.warn("Error: " + error);

});

};

})

.factory('FileUploadService', function ($http, $q) {

var fact = {};

fact.UploadFile = function (files, uploaderUrl) {

var formData = new FormData();

angular.forEach(files, function (f, i) {

formData.append("file", files[i]);

});

var request = $http({

method: "post",

url: uploaderUrl,

data: formData,

withCredentials: true,

headers: { 'Content-Type': undefined },

transformRequest: angular.identity

});

return request;

}

fact.GetUploadedFile = function (fileUrl) {

return $http.get(fileUrl);

}

return fact;

})

Upload images to detect faces:

Browse Image from local folder to upload and detect faces.

Mark faces in the image:

Detected faces will mark with white rectangle.

List detected faces with face information:

List and Separate the faces with detailed face information.

Summary:

You have just seen how to call Face API to detect faces in an Image. Hope this will help to make application more smart and intelligent 🙂.

References:

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin