This is a copy of the post I made on the Intel site here. For the duration of the contest, I am posting a weekly blog digest of my progress with using the Perceptual Computing items. This weeks post shows how Huda has evolved from the application that was created at the end of the fourth week. My fellow competitors are planning to build some amazing applications, so I’d suggest that you head on over to the main Ultimate Coder site and follow along with their progress as well. I’d like to take this opportunity to wish them good luck.

Bring Me the Bacon

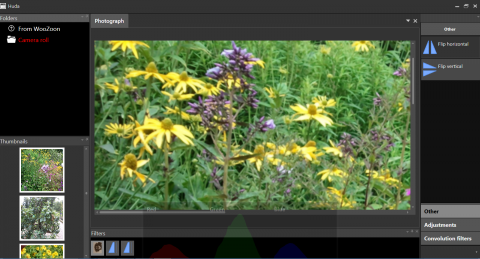

Rather than doing a day by day update this week, I’ve waited until near the end of the week to cover the progress I’ve been making this week. It couldn’t be more timely, given Steve’s update this week as I’d reached a lot of the conclusions he did regarding gesture performance and also layout of the interface (a point he raised elsewhere). Hopefully, having a bit of video footage should showcase where we are with Huda right now.

The first part of this week revolved around finalising the core saving and loading of photographs. As I revealed last week, the filters aren’t applied directly to the image; rather, they are associated with the image in a separate file. The code I showed last week demonstrated that each filter would be responsible for serialising itself, so the actual serialisation graph code should be relatively trivial.

using AForge.Imaging.Filters;

using GalaSoft.MvvmLight.Messaging;

using Huda.Messages;

using Huda.Transformations;

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Runtime.Serialization.Formatters.Binary;

using System.Text;

using System.Threading.Tasks;

namespace Huda.Model

{

public sealed class PhotoManager

{

private Dictionary<st ring,

List<IImageTransform>> savedImageList = new Dictionary<string, List<IImageTransform>>();

private readonly object SyncLock = new object();

private string savedirectory;

private string path;

public PhotoManager()

{

}

public List<IImageTransform> GetFilters(string originalImage)

{

if (!savedImageList.ContainsKey(originalImage))

{

lock (SyncLock)

{

if (!savedImageList.ContainsKey(originalImage))

{

savedImageList.Add(originalImage, new List<IImageTransform>());

Save();

}

}

}

return savedImageList[originalImage];

}

private void AddFilter(string key, IImageTransform filter)

{

var filters = GetFilters(key);

lock (SyncLock)

{

filters.Add(filter);

Save();

}

}

private void Save()

{

if (savedImageList == null || savedImageList.Count == 0) return;

using (FileStream fs = new FileStream(path, FileMode.Create, FileAccess.Write))

{

BinaryFormatter formatter = new BinaryFormatter();

formatter.Serialize(fs, savedImageList);

}

}

public void Load()

{

savedirectory = Path.Combine(Environment.GetFolderPath

(Environment.SpecialFolder.CommonApplicationData), @"Huda");

if (!Directory.Exists(savedirectory))

{

Directory.CreateDirectory(savedirectory);

}

path = Path.Combine(savedirectory, @"Huda.hda");

Messenger.Default.Register<FilterUpdatedMessage>(this, FilterUpdated);

if (!File.Exists(path)) return;

using (FileStream fs = new FileStream(path, FileMode.Open, FileAccess.Read))

{

BinaryFormatter formatter = new BinaryFormatter();

savedImageList = (Dictionary<string, List<IImageTransform>>)formatter.Deserialize(fs);

}

}

private void FilterUpdated(FilterUpdatedMessage filter)

{

if (filter == null) throw new ArgumentNullException("filter");

AddFilter(filter.Key, filter.Filter);

}

}

}

While I could have created a custom format to wrap the Dictionary there, it would have been overkill for what I was trying to achieve. The reason for me using a Dictionary in this class is that it’s a great way to see if an item is already present – helping to satisfy our requirement to load the filters that we previously applied to the photo.

This class subscribes to all the messages that Huda will raise with relation to filters, which means that we can easily save the state of the filters as and when they are updated. A key tenet of MVVM is that we shouldn’t be introducing knowledge of one ViewModel in another ViewModel, so we use messaging as an abstraction to pass information around. The big advantage of this is that we are able to hook into the same messages here in the model, so we don’t have to worry about which ViewModel is going to update the model. In MVVM, it’s okay to have the link between the ViewModel and the Model, but as we already have this messaging mechanism in place, it’s straightforward for us to just use it here.

Saving and loading the actual photo and filter information is accomplished using a simple BinaryFormatter. As the filters are explicitly serializing themselves, the performance should be acceptable as the formatter doesn’t have to rely on reflection to work out what it needs to save. That’s Pete’s big serializer hint for you this week – it may be easy to let the formatter work out what to serialize, but when your file gets bigger, the load takes longer.

You may wonder why I’m only guarding the Save routine through a lock (note, the lock is actually in the AddFilter method). The Load routine is only called once – at application startup, and it has to finish before the application can continue, so we have this in a thread in the Startup routine – and the splash screen doesn’t disappear until all the information is loaded.

Okay, that’s the core of the photo management in place. Now it’s just a matter of maintaining the filter lists, and that’s accomplished through applying the filters.

You may recall from last week's code that some of the filters had to convert from BitmapSource to Bitmap (needed for AForge support), but what wasn’t apparent was the fact that we need to convert to a standard image format. Knowing this, I decided to simplify my life by introducing the following helper code:

public static Bitmap To24BppBitmap(this BitmapSource source)

{

return ToBitmap(source, System.Drawing.Imaging.PixelFormat.Format24bppRgb);

}

public static Bitmap ToBitmap(BitmapSource source, System.Drawing.Imaging.PixelFormat format)

{

using (Bitmap bmp = ToBitmap(source))

{

return AForge.Imaging.Image.Clone(bmp, format);

}

}

This enabled me to simplify my filter calls quite considerably. So, the Sepia transformation code, for instance, became this:

public System.Windows.Media.Imaging.BitmapSource Transform

(System.Windows.Media.Imaging.BitmapSource source)

{

using (Bitmap bitmap = source.To24BppBitmap())

{

using (Bitmap bmp = new Sepia().Apply(bitmap))

{

return ImageSourceHelpers.FromBitmap(bmp);

}

}

}

Another thing that should be apparent in that code is that I'm wrapping Bitmap allocations inside using statements. This is because Huda was leaking memory all over the place. Basically, Bitmap objects were being created and not released - this needed taking care of. Fortunately, that's a very simple fix - all it requires was for me to wrap the Bitmap allocations in using statements.

I realised after I posted last week's article that you might actually like to see the code I'm using to get the gesture to work with the tree. It's incredibly simple and very similar to the POC sample I showed last week. So, if you want to hook the gesture code in, all you need to do is:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Windows;

using System.Windows.Interactivity;

using System.Windows.Input;

using System.Windows.Controls;

using System.Diagnostics;

using System.Timers;

using System.Windows.Media;

using System.Windows.Threading;

using Goldlight.Perceptual.Sdk;

namespace Huda.Behaviors

{

public class TreeviewGestureItemBehavior : Behavior<TreeView>

{

private bool inBounds;

private Timer selectedTimer = new Timer();

private TreeViewItem selectedTreeview;

private TreeViewItem previousTreeView;

protected override void OnAttached()

{

PipelineManager.Instance.Gesture.HandMoved += Gesture_HandMoved;

selectedTimer.Interval = 2000;

selectedTimer.AutoReset = true;

selectedTimer.Elapsed += selectedTimer_Elapsed;

base.OnAttached();

}

protected override void OnDetaching()

{

PipelineManager.Instance.Gesture.HandMoved -= Gesture_HandMoved;

selectedTimer.Stop();

base.OnDetaching();

}

void selectedTimer_Elapsed(object sender, ElapsedEventArgs e)

{

selectedTimer.Stop();

Dispatcher.Invoke(DispatcherPriority.Normal,

(Action)delegate()

{

if (previousTreeView != null)

{

previousTreeView.IsSelected = false;

}

previousTreeView = selectedTreeview;

selectedTreeview.IsSelected = true;

});

}

void Gesture_HandMoved(object sender, Goldlight.Perceptual.Sdk.Events.GesturePositionEventArgs e)

{

Dispatcher.Invoke((Action)delegate()

{

Point pt = new Point(e.X - ((MainWindow)App.Current.MainWindow).GetWidth() / 2,

e.Y - ((MainWindow)App.Current.MainWindow).GetHeight() / 2);

Rect rect = new Rect();

rect = AssociatedObject.RenderTransform.TransformBounds(

new Rect(0, 0, AssociatedObject.ActualWidth, AssociatedObject.ActualHeight));

if (rect.Contains(pt))

{

if (!inBounds)

{

inBounds = true;

}

if (selectedRectangle == null || !selectedRectangle.Contains(pt))

{

GetElement(pt);

}

}

else

{

if (inBounds)

{

selectedTimer.Stop();

inBounds = false;

}

}

}, DispatcherPriority.Normal);

}

private Rect selectedRectangle;

private void GetElement(Point pt)

{

IInputElement element = AssociatedObject.InputHitTest(pt);

if (element == null) return;

TreeViewItem t = FindUpVisualTree<TreeViewItem>((DependencyObject)element);

if (t != null)

{

if (selectedTreeview != t)

{

selectedTimer.Stop();

selectedTreeview = t;

selectedRectangle = selectedTreeview.RenderTransform.TransformBounds(

new Rect(0, 0, t.ActualWidth, t.ActualHeight));

selectedTimer.Start();

}

}

else

{

selectedTimer.Stop();

selectedTreeview = null;

}

}

private T FindUpVisualTree<T>(DependencyObject initial) where T : DependencyObject

{

DependencyObject current = initial;

while (current != null && current.GetType() != typeof(T))

{

current = VisualTreeHelper.GetParent(current);

}

return current as T;

}

}

}

Of course, this is only useful if you have the Gesture handling code that I use as well. Being a nice guy, here it is:

using Goldlight.Perceptual.Sdk.Events;

using Goldlight.Windows8.Mvvm;

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Linq;

using System.Text;

using System.Threading;

using System.Threading.Tasks;

namespace Goldlight.Perceptual.Sdk

{

public class GesturePipeline : AsyncPipelineBase

{

private WeakEvent<GesturePositionEventArgs> gestureRaised =

new WeakEvent<GesturePositionEventArgs>();

private WeakEvent<MultipleGestureEventArgs> multipleGesturesRaised =

new WeakEvent<MultipleGestureEventArgs>();

private WeakEvent<GestureRecognizedEventArgs> gestureRecognised =

new WeakEvent<GestureRecognizedEventArgs>();

public event EventHandler<GesturePositionEventArgs> HandMoved

{

add { gestureRaised.Add(value); }

remove { gestureRaised.Remove(value); }

}

public event EventHandler<MultipleGestureEventArgs> FingersMoved

{

add { multipleGesturesRaised.Add(value); }

remove { multipleGesturesRaised.Remove(value); }

}

public event EventHandler<GestureRecognizedEventArgs> GestureRecognized

{

add { gestureRecognised.Add(value); }

remove { gestureRecognised.Remove(value); }

}

public GesturePipeline()

{

EnableGesture();

searchPattern = GetSearchPattern();

}

private int ScreenWidth = 1024;

private int ScreenHeight = 960;

public void SetBounds(int width, int height)

{

this.ScreenWidth = width;

this.ScreenHeight = height;

}

public override void OnGestureSetup(ref PXCMGesture.ProfileInfo pinfo)

{

pinfo.activationDistance = 70;

base.OnGestureSetup(ref pinfo);

}

public override void OnGesture(ref PXCMGesture.Gesture gesture)

{

var handler = gestureRecognised;

if (handler != null)

{

handler.Invoke(new GestureRecognizedEventArgs(gesture.label.ToString()));

}

base.OnGesture(ref gesture);

}

public override bool OnNewFrame()

{

try

{

if (!IsDisconnected())

{

var gesture = QueryGesture();

PXCMGesture.GeoNode[] nodeData = new PXCMGesture.GeoNode[6];

PXCMGesture.GeoNode singleNode;

searchPattern = PXCMGesture.GeoNode.Label.LABEL_BODY_HAND_PRIMARY;

var status = gesture.QueryNodeData(0, GetSearchPattern(), out singleNode);

if (status >= pxcmStatus.PXCM_STATUS_NO_ERROR)

{

var handler = gestureRaised;

if (handler != null)

{

handler.Invoke(new GesturePositionEventArgs(singleNode.positionImage.x - 85,

singleNode.positionImage.y - 75,

singleNode.body.ToString(),

ScreenWidth,

ScreenHeight));

}

}

}

Sleep(20);

}

catch (Exception ex)

{

Log(ex);

}

return true;

}

private static readonly object SyncLock = new object();

private void Sleep(int time)

{

lock (SyncLock)

{

Monitor.Wait(SyncLock, time);

}

}

private PXCMGesture.GeoNode.Label searchPattern;

private PXCMGesture.GeoNode.Label GetSearchPattern()

{

return PXCMGesture.GeoNode.Label.LABEL_BODY_HAND_PRIMARY |

PXCMGesture.GeoNode.Label.LABEL_FINGER_INDEX |

PXCMGesture.GeoNode.Label.LABEL_FINGER_MIDDLE |

PXCMGesture.GeoNode.Label.LABEL_FINGER_PINKY |

PXCMGesture.GeoNode.Label.LABEL_FINGER_RING |

PXCMGesture.GeoNode.Label.LABEL_FINGER_THUMB |

PXCMGesture.GeoNode.Label.LABEL_HAND_FINGERTIP;

}

}

}

In order to use this, you’ll need my AsyncPipelineBase class:

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Linq;

using System.Text;

using System.Threading;

using System.Threading.Tasks;

namespace Goldlight.Perceptual.Sdk

{

public abstract class AsyncPipelineBase : UtilMPipeline

{

private readonly object SyncLock = new object();

public async void Run()

{

try

{

await Task.Run(() => {

while (true)

{

this.LoopFrames();

lock (SyncLock)

{

Monitor.Wait(SyncLock, 1000);

}

}

});

}

finally

{

this.Dispose();

}

}

}

}

If the gesture camera wasn’t hooked up to the computer, the call to LoopFrames drops out, which means that we have to maintain a loop for the lifetime of the application. I’m simply wrapping this in a while loop which sleeps for a second if the LoopFrames drops out. This seems to be a reasonable timeout for the application to wait until it picks up again.

Another big change for me this week was dropping the carousel. I know, it seems as though I’m chopping and changing the interface constantly, but that’s what happens in a development, you try something out, refine it and drop it if there’s a better way to do things. There were three main reasons for dropping the carousel:

- Selecting a filter from elsewhere in the carousel also triggered the action associated with that filter. This could have been overcome, but the way it looked would have been compromised.

- I put the carousel in place to give me a chance to use some of the pre-canned gestures. Unfortunately, I found using them to be far too cumbersome and “laggy”. I grew impatient waiting for it to cycle through the commands.

- Using gestures with this just didn’t feel natural or intuitive. This was the biggest issue as far as I’m concerned.

Fortunately, the modular design of Huda allows me to take the items that were associated with the carousel and just drop them into another container with no effort. Two minutes and I had the filters in place in a different way. The downside is that Huda is becoming less and less like a Perceptual application as the interface favours touch more and more. Hmmmm, okay, I’ll revisit that statement. Huda feels less and less like an application that is trying too hard to be Perceptual. Over the last couple of weeks of development, I’ll be augmenting the touch interface with complementary gestures. For instance, I’d like to experiment with putting gesture pinch in place to trigger a x2 zoom. As the core infrastructure is in place, all I would really need to do here is hook the pinch recognition into the zoom command by passing a message. Now you see why I’ve gone with MVVM and message passing.

Part of the reason for the change in the interface came about because of something Steve talked about this week regarding “UserLaziness”. Quite naturally, the left and right hand sides of the screen relate to the operations that the user can perform to open photographs, and to manipulate the lists, but this just didn’t feel quite right. Basically, reordering the filters is something that I expect the user will do a lot less frequently than they will actually adding them, so it seems to me that we should reorder the interface a bit to move the filters to the bottom of the screen and to put the filter selection at the right hand side, where it hovers more naturally under the right hand thumb. This also means that the toolbar has become superfluous – again, it should be in the same location, minimizing the movements you should have to make.

One thing that is easy to get overlooked is that this is an Ultrabook application first and foremost. To that end, it really should make use of Ultrabook features. As a test, I hooked the Accelerometer up to the filters, so shaking it adds, appropriately enough, a blur filter.

This Week's Music

- AC/DC – For Those About To Rock

- AC/DC – Back In Black

- Bon Jovi – What About Now

- Eric Johnson – Ah Via Musicom

- Wolfmother – Cosmic Egg

- Airbourne – Runnin Wild

- DeeExpus – The King Of Number 33

- Dream Theater – A Dramatic Turn Of Events

Status Update

So where are we now? Well, Huda feels like an Ultrabook app to me first and foremost. When I start it up, I don’t really need to use the mouse or keyboard at all – the desktop side of it isn’t as necessary because the Ultrabook really makes it easier for the user to use touch to accomplish pretty much everything. I’m not too happy with the pinch zoom and touch drag though – it does very, very odd things – I’ll sort that out so that it is much closer to what you’d expect with a photo editing package.

One point of design that I’d like to touch upon. Nicole raised an interesting point about not saving the filtered version of the photo into the same location as the original. My intention was always to have a separate Export directory in which to store the images, but I still haven’t decided where that’s going to be. So Nicole – here’s your chance to really shape an application to do what you want – where would you like to see exported images reside?

Finally. While playing around with adding filters, it quickly becomes apparent that it’s possible to add them far quicker than they can be applied and rendered. Now, there are things I can do to help with this, but I am making the tough call here that the most important thing for me to concentrate on right now is adding more gestures, and making sure that it’s as robust and tested as I can possibly make it. If there’s time, and it doesn’t impact on the stability, I will mitigate the filter application. The issue is to do with the fact that Bitmap and BitmapSource conversions happen in a lot of the filters. As the filters are reapplied to the base image every time, this conversion could happen each time – I could cache earlier image conversions and just apply the new filter, but reordering the filters will still incur this overhead. As I say, I’ll assess how best to fit this in.

Well, that’s me for this week. Thanks for reading and if there’s anything you need to know, please let me know.

A developer for over 30 years, I've been lucky enough to write articles and applications for Code Project as well as the Intel Ultimate Coder - Going Perceptual challenge. I live in the North East of England with 2 wonderful daughters and a wonderful wife.

I am not the Stig, but I do wish I had Lotus Tuned Suspension.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin