Introduction

First of all, let's clear up the term Serialization:

In computer science, in the context of data storage and transmission, serialization is the process of converting a data structure or object state

into a format that can be stored (for example, in a file or memory buffer, or transmitted across a network connection link) and "resurrected"

later in the same or another computer environment. (Wikipedia)

Serialization is the process of converting the state of an object into a form that can be persisted or transported. The complement of serialization is deserialization,

which converts a stream into an object. (MSDN)

The serialization technique may roughly be divided into two types: Human-readable and Binary. The .NET Framework provides the

BinaryFormatter class for binary serialization and the XmlSerializer class for human-readable technique.

Most sources recommend using BinaryFormatter instead of XmlSerializer, if we need smaller size of serialized data. I almost never use

XmlSerializer - it brings some restrictions to your classes. However, BinaryFormatter is used rather often and I have something to say here about it.

Background

I started using BinaryFormatter when .NET Framework 2.0 was released. There was no WCF, and binary serializing was used by Remoting.

We developed an application where there should have been a special protocol with the system of messages. We had the right to choose the way of message encoding.

We decided to try BinaryFormatter, and it worked OK. For some time. When we tried sending a thousand messages in a loop - we got a problem of TCP

Zero window size - that meant that the receiving side didn't manage to deserialize messages in time.

I was extremely surprised when examining the network traffic, I found out that the size of each message was about 1,000 bytes, while encoding them

in a per-byte manner manually would have taken at most 70 bytes.

BinaryFormatter and manual approach

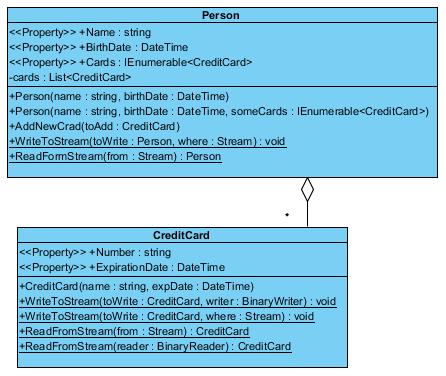

In the example application, we've got two "useful" classes - Person aggregating CreditCard, like it's shown in the picture:

We're going to serialize the objects of the Person class. To serialize it with BinaryFormatter, we need to add the Serializable attribute

before the Person class definition, and before all classes that Person aggregates. Then we're allowed to write:

BinaryFormatter formatter = new BinaryFormatter();

formatter.Serialize(stream, toSerialize);

where stream and toSerialize are variables of type Stream and Person, respectively.

Things become a bit harder when implementing manual synchronization. Instead of putting a Serializable attribute, we have to provide some methods for calling

them later. In the example, such methods are the static members WriteToStream and ReadFromStream inside the serialized classes. Then we write:

Person.WriteToStream(toSerialize, stream);

and that's it.

Using the code

Now that we briefly explored the two techniques, let's examine the testing code.

static byte[] getBytesWithBinaryFormatter(Person toSerialize)

{

MemoryStream stream = new MemoryStream();

BinaryFormatter formatter = new BinaryFormatter();

formatter.Serialize(stream, toSerialize);

return stream.ToArray();

}

static byte[] getBytesWithManualWrite(Person toSerialize)

{

MemoryStream stream = new MemoryStream();

Person.WriteToStream(toSerialize, stream);

return stream.ToArray();

}

static void testSize(Person toTest)

{

byte[] fromBinaryFormatter = getBytesWithBinaryFormatter(toTest);

Console.WriteLine("\tWith binary formatter: {0} bytes",

fromBinaryFormatter.Length);

byte[] fromManualWrite = getBytesWithManualWrite(toTest);

Console.WriteLine("\tManual serializing: {0} bytes", fromManualWrite.Length);

}

static void testTime(Person toTest, int timesToTest)

{

List<byte[]> binaryFormatterResultArrays = new List<byte[]>();

List<byte[]> manualWritingResultArrays = new List<byte[]>();

DateTime start = DateTime.Now;

for (int i = 0; i < timesToTest; ++i )

binaryFormatterResultArrays.Add(getBytesWithBinaryFormatter(toTest));

TimeSpan binaryFormatterSerializingTime = DateTime.Now - start;

Console.WriteLine("\tBinary formatter serializing: {0} seconds",

binaryFormatterSerializingTime.TotalSeconds);

start = DateTime.Now;

for (int i = 0; i < timesToTest; i++)

manualWritingResultArrays.Add(getBytesWithManualWrite(toTest) );

TimeSpan manualSerializingTime = DateTime.Now - start;

Console.WriteLine("\tManual serializing: {0} seconds",

manualSerializingTime.TotalSeconds);

BinaryFormatter formatter = new BinaryFormatter();

start = DateTime.Now;

foreach (var next in binaryFormatterResultArrays)

formatter.Deserialize(new MemoryStream(next));

TimeSpan binaryFormatterDeserializingTime = DateTime.Now - start;

Console.WriteLine("\tBinaryFormatter desrializing: {0} seconds",

binaryFormatterDeserializingTime.TotalSeconds );

start = DateTime.Now;

foreach (var next in manualWritingResultArrays)

Person.ReadFromStream(new MemoryStream(next));

TimeSpan manualDeserializingTime = DateTime.Now - start;

Console.WriteLine("\tManual desrializing: {0} seconds",

manualDeserializingTime.TotalSeconds );

}

static void addCards(Person where, int howMany)

{

for (int i = 0; i < howMany; ++i)

{

where.AddNewCard(new CreditCard(Guid.NewGuid().ToString(),

new DateTime(2012, 12, 24 )));

}

}

static void Main(string[] args)

{

Person aPerson = new Person("Kate", new DateTime(1986, 2, 1),

new CreditCard[] { new CreditCard(Guid.NewGuid().ToString(),

new DateTime(2012, 12, 24 )) });

Console.WriteLine("Size test: 1 card");

testSize(aPerson);

Console.WriteLine("Time test: 1 card, 100 000 times");

testTime(aPerson, 100000);

addCards(aPerson, 10000);

Console.WriteLine("Size test: 10 000 cards");

testSize(aPerson);

Console.WriteLine("Time test: 10 000 cards, 100 times");

testTime(aPerson, 100);

Console.ReadKey();

}

First, size and time tests are performed on a Person with one CreditCard object. Then the same test is performed on a person with 10,000 credit cards :).

And here is the output from my machine:

Size test: 1 card

With binary formatter: 896 bytes

Manual serializing: 62 bytes

Time test: 1 card, 100 000 times

Binary formatter serializing: 4,24 seconds

Manual serializing: 0,28 seconds

BinaryFormatter desrializing: 4,57 seconds

Manual desrializing: 0,169 seconds

Size test: 10 000 cards

With binary formatter: 640899 bytes

Manual serializing: 450062 bytes

Time test: 10 000 cards, 100 times

Binary formatter serializing: 4,713 seconds

Manual serializing: 0,527 seconds

BinaryFormatter desrializing: 4,519 seconds

Manual desrializing: 0,57 seconds

Based on these results and on the knowledge we have, let's compare the two techniques.

Size

Serializing a message with BinaryFormatter is expensive because of the metadata present. Manual serializing gives a real benefit there.

We receive a nearly 14 times smaller byte array result in the example. However, when we talk about serializing an array of objects - then the difference in resulting

array size is not so huge - the result array of manual synchronization is 1.4 times smaller in our example.

Speed

We've got a nice benefit in speed for both one object and array of objects serialization. For one Person and one CreditCard object,

we've got 4.24 / 0.28 = 15.14 times faster speed. And manual approach is almost 10 times faster for 10,000 CreditCard objects inside a Person object.

The speed tests usually require mentioning the machine parameters, a table which shows some dependencies - well you are welcome to test this yourself

and draw a table. I'm not trying to give precise results, but the common picture.

Versioning

Well, protocols change, and your start message format may be very different from the one three years later. Fields could be removed and added. Because we are looking

at serializing in a networking context, we should keep in mind: all peers should react in a good way on changes in the message format.

In manual serializing approach, we have two ways: either update all peers when the format changes, or provide our own way to handle the situation

when messages with other versions come.

In .NET, the new fields can be marked as optional - and they may be present in the message - or may not. Of course, that doesn't solve all problems,

and the new-version host, when receiving a message, should handle the presence and absence of optional fields.

Development speed

Manual serialization does take time. For developing and for testing. If you have version control logic - than it'll take even more time. Standard .NET methods save all this time for you.

Technologies

BinaryFormatter is used in WCF when you're using TCP binding. Theoretically there's an opportunity to provide your own formatter, however I've never heard

of any good realization.

Structure complexity impact

BinaryFormatter is capable of serializing an object of any complexity. This means, that even if the objects from your system from a graph with

cycles - it will be serialized correctly. Again, serializing (and deserializing!) a leaf class from class hierarhy is not a problem.

Things are not so shiny about Manual serialization. Having a class not aggregating any others is a trivial case. Then, when you have a tree

of objects (Person with 100 CreditCard objects inside is a tree) - we've got some complications. Having a class hierarchy

brings even more complexity, and independence of cycles makes your code completely hard to study. Personally, I've never used Manual synchronization for object graphs that might contain cycles.

Summary

Pros for BinaryFormatter:

- ability to use WCF or other technologies

- no need to spend time for implementing the Serializing mechanism

- versioning support present

- object of any structure complexity may be processed

Pros for manual serializing:

- best size complexity may be achieved

- best performance may be achieved

Points of interest

Manual serializing: leaf class serializing

Many aspects appear in serializing and especially deserializing code.

Manual serializing: where to locate serialization code?

Actually, this is a continuation of the previous point of interest. In this example I put the code as a static method into the respective classes.

This approach may be explained by the Information Expert GRASP pattern.

But everything is neat in the example project because there is no class hierarchy. The approach with static methods looks dirty when a class hierarchy appears.

Maybe this point could be covered in the next article.

Protobuff: http://protobuf-net.googlecode.com. Interesting project, really hard work. I made the same tests with their

serializer - and got the same size characteristic as for Manual approach! However, time consumption is even worse (in Debug build - thanks to Sam Cragg's remark )

than for BinaryFormatter. You could download this code - 92.5 KB - the same project as in the example,

but also with Protobuff check to see that for yourself. The Release build for Protobuff-net tests is available

here - 86.6 KB. Release build has shown 3-5 times better time characteristics than BinaryFormatter, and worse time characteristics than Manual approach.

Why is BinaryFormatter

so slow and space-consuming?

History

- 10.01.2012 - First upload.

- 13.01.2012 - An update to reflect remarks from feedback.

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin