Throughout my previous article, I demonstrated how you can access the 2D positions of the eyes, nose, and mouth, using Microsoft’s Kinect Face API. The Face API provides us with some basic, yet impressive, functionality: we can detect the X and Y coordinates of 4 eye points and identify a few facial expressions using just a few lines of C# code. This is pretty cool for basic applications, like Augmented Reality games, but what if you need more advanced functionality from your app?

Recently, we decided to extend our Kinetisense project with advanced facial capabilities. More specifically, we needed to access more facial points, including lips, jaw and cheeks. Moreover, we needed the X, Y and Z position of each point in the 3D space. Kinect Face API could not help us, since it was very limited for our scope of work.

Thankfully, Microsoft has implemented a second Face API within the latest Kinect SDK v2. This API is called HD Face and is designed to blow your mind!

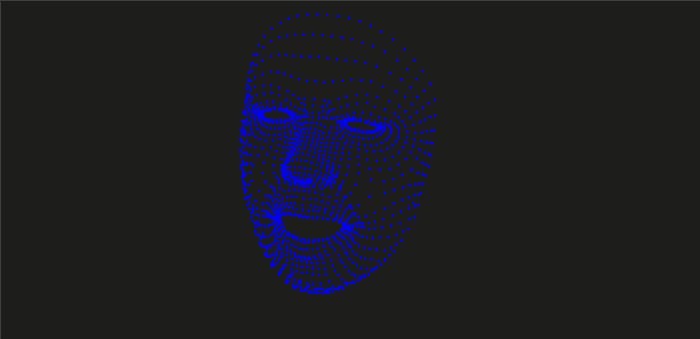

At the time of writing, HD Face is the most advanced face tracking library out there. Not only does it detect the human face, but it also allows you to access over 1,000 facial points in the 3D space. Real-time. Within a few milliseconds. Not convinced? I developed a basic program that displays all of these points. Creepy, huh?!

In this article, I am going to show you how to access all these points and display them on a canvas. I’ll also show you how to use Kinect HD Face efficiently and get the most out of it.

Prerequisites

Source Code

Tutorial

Although Kinect HD Face is truly powerful, you’ll notice that it’s badly documented, too. Insufficient documentation makes it hard to understand what’s going on inside the API. Actually, this is because HD Face is supposed to provide advanced, low-level functionality. It gives us access to raw facial data. We, the developers, are responsible to properly interpret the data and use them in our applications. Let me guide you through the whole process.

Step 1: Create a New Project

Let’s start by creating a new project. Launch Visual Studio and select File -> New Project. Select C# as you programming language and choose either the WPF or the Windows Store app template. Give your project a name and start coding.

Step 2: Import the Required Assemblies

To use Kinect HD Face, we need to import 2 assemblies: Microsoft.Kinect.dll and Microsoft.Kinect.Face.dll. Right click your project name and select “Add Reference”. Navigate to the Extensions tab and select those assemblies.

If you are using WinRT, Microsoft.Kinect is called WindowsPreview.Kinect.

Step 3: XAML

The user interface is pretty simple. Open your MainWindow.xaml or MainPage.xaml file and place a drawing canvas within your grid. Preferably, you should add the canvas within a Viewbox element. The Viewbox element will let your Canvas scale proportionally as the window size changes. No additional effort from your side.

<Viewbox Grid.Row="1">

<Canvas Name="canvas" Width="512" Height="424" />

</Viewbox>

Step 4: Declare the Kinect HD Face Objects

After typing the XAML code, open the corresponding C# file (MainWindow.xaml.cs or MainPage.xaml.cs) and import the Kinect namespaces.

For .NET 4.5, import the following:

using Microsoft.Kinect;

using Microsoft.Kinect.Face;

For WinRT, import the following:

using WindowsPreview.Kinect;

using Microsoft.Kinect.Face;

So far, so good. Now, let’s declare the required objects. Like Kinect Face Basics, we need to define the proper body source, body reader, HD face source, and HD face reader:

private KinectSensor _sensor = null;

private BodyFrameSource _bodySource = null;

private BodyFrameReader _bodyReader = null;

private HighDefinitionFaceFrameSource _faceSource = null;

private HighDefinitionFaceFrameReader _faceReader = null;

private FaceAlignment _faceAlignment = null;

private FaceModel _faceModel = null;

private List<Ellipse> _points = new List<Ellipse>();

Step 5: Initialize Kinect and body/face Sources

As usual, we’ll first need to initialize the Kinect sensor, as well as the frame readers. HD Face works just like any ordinary frame: we need a face source and a face reader. The face reader is initialized using the face source. The reason we need a Body source/reader is that each face corresponds to a specific body. You can’t track a face without tracking its body first. The FrameArrived event will fire whenever the sensor has new face data to give us.

_sensor = KinectSensor.GetDefault();

if (_sensor != null)

{

_bodySource = _sensor.BodyFrameSource;

_bodyReader = _bodySource.OpenReader();

_bodyReader.FrameArrived += BodyReader_FrameArrived;

_faceSource = new HighDefinitionFaceFrameSource(_sensor);

_faceReader = _faceSource.OpenReader();

_faceReader.FrameArrived += FaceReader_FrameArrived;

_faceModel = new FaceModel();

_faceAlignment = new FaceAlignment();

_sensor.Open();

}

Step 6: Connect a Body with a Face

The next step is a little tricky. This is how we connect a body to a face. How do we do this? Simply by setting the TrackingId property of the Face source. The TrackingId is the same as theTrackingId of the body.

private void BodyReader_FrameArrived(object sender, BodyFrameArrivedEventArgs e)

{

using (var frame = e.FrameReference.AcquireFrame())

{

if (frame != null)

{

Body[] bodies = new Body[frame.BodyCount];

frame.GetAndRefreshBodyData(bodies);

Body body = bodies.Where(b => b.IsTracked).FirstOrDefault();

if (!_faceSource.IsTrackingIdValid)

{

if (body != null)

{

_faceSource.TrackingId = body.TrackingId;

}

}

}

}

}

So, we have connected a face with a body. Let’s access the face points now.

Step 7: Get and Update the Facial Points!

Dive into the FaceReader_FrameArrived event handler. We need to check for two conditions before accessing any data. First, we need to ensure that the frame is not null. Secondly, we ensure that the frame has at least one tracked face. Ensuring these conditions, we can call the GetAndRefreshFaceAlignmentResult method, which updates the facial points and properties.

The facial points are given as an array of vertices. A vertex is a 3D point (with X, Y, and Z coordinates) that describes the corner of a geometric triangle. We can use vertices to construct a 3D mesh of the face. For the sake of simplicity, we’ll simply draw the X-Y-Z coordinates. Microsoft’s SDK Browser contains a 3D mesh of the face you can experiment with.

private void FaceReader_FrameArrived(object sender, HighDefinitionFaceFrameArrivedEventArgs e)

{

using (var frame = e.FrameReference.AcquireFrame())

{

if (frame != null && frame.IsFaceTracked)

{

frame.GetAndRefreshFaceAlignmentResult(_faceAlignment);

UpdateFacePoints();

}

}

}

private void UpdateFacePoints()

{

if (_faceModel == null) return;

var vertices = _faceModel.CalculateVerticesForAlignment(_faceAlignment);

}

As you can see, the vertices is a list of CameraSpacePoint. The CameraSpacePoint is a Kinect-specific structure that contains information about a 3D point.

Hint: we have already used CameraSpacePoints when we performed body tracking.

Step 8: Draw the Points on Screen

And now, the fun part: we have a list of CameraSpacePoint objects and a list of Ellipse objects. We’ll add the ellipses within the canvas and we’ll specify their exact X & Y position.

Caution: The X, Y, and Z coordinates are measured in meters! To properly find the corresponding pixel values, we’ll use Coordinate Mapper. Coordinate Mapper is a built-in mechanism that converts between 3D space positions to 2D screen positions.

private void UpdateFacePoints()

{

if (_faceModel == null) return;

var vertices = _faceModel.CalculateVerticesForAlignment(_faceAlignment);

if (vertices.Count > 0)

{

if (_points.Count == 0)

{

for (int index = 0; index < vertices.Count; index++)

{

Ellipse ellipse = new Ellipse

{

Width = 2.0,

Height = 2.0,

Fill = new SolidColorBrush(Colors.Blue)

};

_points.Add(ellipse);

}

foreach (Ellipse ellipse in _points)

{

canvas.Children.Add(ellipse);

}

}

for (int index = 0; index < vertices.Count; index++)

{

CameraSpacePoint vertice = vertices[index];

DepthSpacePoint point = _sensor.CoordinateMapper.MapCameraPointToDepthSpace(vertice);

if (float.IsInfinity(point.X) || float.IsInfinity(point.Y)) return;

Ellipse ellipse = _points[index];

Canvas.SetLeft(ellipse, point.X);

Canvas.SetTop(ellipse, point.Y);

}

}

}

That’s it. Build the application and run it. Stand between 0.5 and 2 meters from the sensor. Here’s the result:

But Wait!

OK, we drew the points on screen. So what? Is there a way to actually understand what each point is? How can we identify where they eyes are? How can we detect the jaw? The API has no built-in mechanism to get a human-friendly representation of the face data. We need to handle over 1,000 points in the 3D space manually!

Don’t worry, though. Each one of the vertices has a specific index number. Knowing the index number, you can easily deduce where does it correspond to. For example, the vertex numbers 1086, 820, 824, 840, 847, 850, 807, 782, and 755 belong to the left eyebrow.

Similarly, you can find accurate semantics for every point. Just play with the API, experiment with its capabilities and build your own next-gen facial applications!

If you wish, you can use the Color, Depth, or Infrared bitmap generator and display the camera view behind the face. Keep in mind that simultaneous bitmap and face rendering may cause performance issues in your application. So, handle with care and do not over-use your resources.

Source Code

PS: I’ve been quite silent during the past few months. It was not my intention and I really apologize for that. My team was busy developing the Orthosense app for Intel’s International Competition. We won the GRAND PRIZE and we were featured on USA Today. From now on, I promise I’ll be more active in the Kinect community. Please keep sending me your comments and emails.

Till the next time, enjoy Kinecting!

The post How to use Kinect HD Face appeared first on Vangos Pterneas.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin