Below is for Visual Studio 2017 integration:

Introduction

I've long been dissatisfied with the parser generator offerings available. Either they are limited and clunky like Cocos/R or they are huge and involved, like the latest renditions of ANTLR. Worse, their grammars can get overly complicated to the point of being unreadable, or they use complex engines like GLR or LL(k) parsing with FA to handle the parsing process, making them both more difficult to implement, and to use.

I also didn't care for how few of them expose a pull parser - something you can loop on and call read to get a chunk at a time. This is essential for parsing huge documents like mountains of JSON data, or even just anything with a big enough parse tree.

Originally, I was going to write a parser generator that allowed you to choose the underlying algorithm to use as a way of solving some of these limitations until I stumbled onto a much better and seemingly uncommon way to use a very simple algorithm - LL(1) - to parse very complex grammars and yield reasonable parse trees. Since it's LL, it allows for simple reading and traversal during the parse.

Background

To follow much of this, you'll probably need to be at least a little familiar with parser generators. If you aren't, I'll try to keep things as boiled down as I can, and the bits that are unfamiliar probably aren't important for using the end result, just understanding the full mechanics behind it.

I'm going to avoid talking code here, and instead talk about grammars. First, let's define what we mean by grammar, in this case, a Context Free grammar (Type-2 on the Chomsky hierarchy if you care)

All a grammar is essentially, is a series of rules. A rule is a left hand side, followed by one or more symbols on the right hand side, *or* epsilon, which can appear once, and by itself.

What is a symbol? It's just a handle. It can be anything, as long as you can get back to it, and find it again. For example, a symbol can be the string "foo" and you can tell if it's the same as another string because the string is the same.

A -> a B

A -> C B d

Here we have two rules, with the same left hand side symbol, and a list of symbols on the right hand side.

A group of rules with the same left hand side are collectively known as a "non-terminal". Here, we have defined the non-terminal A with two rules. We can reference it in any other rule, and we can even make a non-terminal self-referential / recursive. We can also of course define other non-terminals in the same grammar such as:

B -> B A

B -> A

B -> <epsilon>

Epsilon simply means "nothing" or "empty" here.

How do the rules define a parse? Well, we use these rules to get an idea of the hierarchy of the grammar we are parsing. And we've not covered terminals yet, just non-terminals.

Non-terminals are the nodes of the parse tree, terminals are the leaves. A Context Free Grammar can reference terminals by symbol, but does not care how they are defined. Usually terminals are defined using small regular expressions and string literals

Above, we can see that B references A. B also references itself. What this does is define a loop of zero or more times, using substitution. Each iteration, A gets replaced by the definition of A which is parsed, and B gets replaced by yet another instance of B. Luckily, we have the two other rules which define the termination of the loop - either A by itself, or nothing (empty/epsilon)

Let's stop right there. This may work fine for a computer, but it's a downright awful way to define something to parse. So rather than teach you about all the specifics here, let's just say a CFG is that ugly thing.

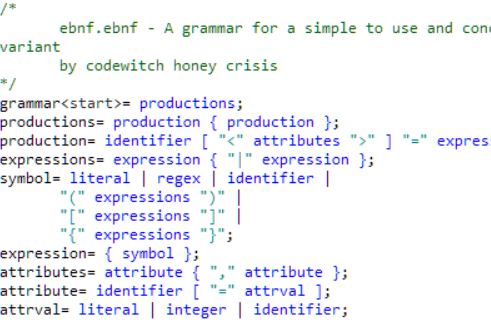

Fortunately, we don't have to make them by hand. We can use something like EBNF to describe expressions which can then produce these grammars. Consider the following self describing EBNF grammar:

grammar<start>=productions;

productions= productions production | production;

production=identifier ["<" attributes ">"] "=" expressions ";";

expressions= expressions "|" expression | expression;

symbol = literal | regex | identifier |

"(" expressions ")" |

"[" expressions "]" |

"{" expressions "}";

expression = { symbol };

attributes= attributes "," attribute | attribute;

attribute= identifier ["=" attrval];

attrval= literal | integer | identifier;

literal = '"([^"\\]|\\.)*"';

regex = '\'([^\'\\]|\\.)*\'';

identifier= '[A-Z_a-z][\-0-9A-Z_a-z]*';

integer='\-?[0-9]+';

whitespace<hidden>= '[ \v\f\t\r\n]';

lineComment<hidden>= '//[^\n]*';

blockComment<hidden,blockEnd="*/">="/*";

Sorry for the lack of syntax highlighting!

As you study this, assuming you know basic regex, it should start to come together. This describes an EBNF grammar in the syntax it describes.

[ ] optionally match whatever occurs between{ } repeatedly match whatever occurs between( ) standard grouping< > attribute sets - special declarators that modify or specify the grammar or parse tree in some way" " string literal' ' regex expression - non-backtracking, basic.| alternation - simple concatenation is implicit just like it is in regex.

This is a much easier way to describe a syntax! We can create a CFG from this. The trouble is, the CFG won't work out of the box with some common parser algorithms, including the one that ships with this article. The grammar is not "LL(1)" which just means there are two rules with the same left hand and same first right hand sides.

The grammar is also left-recursive because of expressions, which means it can create an infinite loop. We don't want that.

Fortunately, there are mathematical workarounds for these issues. They can be solved by what's known as left factoring. Left factoring at Tutorials Point

What we have to do is refactor the CFG grammar in order to make it usable with our little LL(1) parser.

The included project handles this. The problem with left factoring is that it bloats the parse tree. You have to create additional rules to workaround the limitations of the parsing algorithm. The extra rules mean extra non-terminals, which in turn means extra useless nodes on the parse tree. "What's the big deal!" you say. I say, talk to me when you've parsed a C# file that uses 7 nested non-terminals just to start an expression. Also, the deeper the parse tree, the more complicated and resource intensive it is to parse and schlep around.

What I've done is I've allowed the non-terminals and terminals to have attributes associated with them. You'll notice a few in the above EBNF. (Above "hidden", "start", and "blockEnd")

Here are the ones currently supported:

type - must be a literal string representing either a .NET or C# intrinsic type name - used on terminals for coercing the string value from the lexer into the specified type using System.ComponentModel.TypeConverter framework in .NET so you can add your own. See the MS docs.hidden - boolean, used on terminals for telling the parser to throw them away and not report them. This is useful for whitespace and comments, and can be useful in some other contexts too, like CDATA sections in HTML if you don't want to parse themblockEnd - string literal, used on terminals to specify a skip end-point for things like C and HTML comment blocks. These constructs are not easily matchable with a non-backtracking FA, so this is a special mode (but common to parsers) to simply skip until it finds the specified string literal in the output. It then takes that entire text and reports it as a terminal. Specifying "*/" would be useful for C block comments. Specifying "]]>" would be useful for CDATA sections. Specifying "-->" is useful for SGML/XML/HTML comments.

(not used above:)substitute - string literal, used on terminals or non-terminals to indicate a substitute symbol (which must be defined) to report in the place of this symbol. Does not impact the parsing, just the reporting of the parse. The same rules are still followed and internally the symbol still has its underlying identity - it's just never reported.

Another you don't see is one of the most powerful ones the system uses behind the scenes. It's called "collapse" and all it does is replace itself with a list of its children. In other words, it removes itself from the parse tree, and moves its children up the chain to its parent node, so it basically "hides" from the tree, but propagates its children.

Adding "collapse" to any non-terminals as created during left-factoring has yielded parse trees that look exactly like the grammar that generated them would suggest.

If you're familiar with parser generators you know that last bit is a tall order when the EBNF grammars are as expressive as the above.

Using the Code

As I usually do, I will be providing sample code here to do most of the talking. This builds on my regular expression engine.

Please note that while the API is fairly easy once you know how, this is a research project I started, and I haven't done much refactoring or commenting. I decided to submit it as is because I'll be working on it for the next year, and I'm at least two weeks out from a git hub submission, so you fine readers get it first. Vote for Pedro! (or me)

var grammarfile = @"..\..\..\ebnf.ebnf";

EbnfDocument doc = EbnfDocument.ReadFrom(grammarfile);

var cfg = doc.ToCfGrammar();

Console.WriteLine(cfg);

Console.WriteLine("Preparing grammar for LL(1) parsing.");

var msgs = cfg.PrepareLL1();

bool hasError = false;

foreach (var m in msgs) {

if (CfgErrorLevel.Error == m.ErrorLevel)

hasError = true;

Console.WriteLine(m);

}

if (hasError)

return;

Console.WriteLine();

Console.WriteLine("Modified grammar follows:");

Console.WriteLine();

Console.WriteLine(cfg);

Console.WriteLine();

var lexer = doc.ToLexer(cfg);

Console.WriteLine("Generating Table Driven Parser");

try

{

File.Delete(@"..\..\..\EbnfLLTableDrivenParser.Generated.cs");

}

catch { }

using (StreamWriter sw = new StreamWriter

(File.OpenWrite(@"..\..\..\EbnfLLDrivenTableParser.Generated.cs")))

cfg.WriteCSharpTableDrivenLL1ParserClassTo(sw, "EbnfLLTableDrivenParser", null, lexer);

Console.WriteLine("Generating Compiled Parser");

try

{

File.Delete(@"..\..\..\EbnfLLCompiledParser.Generated.cs");

}

catch { }

using (StreamWriter sw = new StreamWriter

(File.OpenWrite(@"..\..\..\EbnfLLCompiledParser.Generated.cs")))

cfg.WriteCSharpCompiledLL1ParserClassTo(sw, "EbnfLLCompiledParser", null, lexer);

LLParser parser = cfg.ToLL1Parser(lexer, null);

Console.WriteLine("Using generated parser.");

parser.Close();

var pc = ParseContext.CreateFromFile(grammarfile);

parser = new EbnfLLTableDrivenParser(pc);

parser.Restart(ParseContext.CreateFromFile(grammarfile));

OutputParseTo(Console.Out, parser, cfg);

parser.Close();

parser.Restart(ParseContext.CreateFromFile(grammarfile));

var tree = parser.ParseSubtree();

parser.Close();

Console.WriteLine(tree.ToString(cfg));

I've included a simple expression evaluator and the "newt" command line utility to generate code.

I've also added the remedial syntax highlighter back.

It's still an early submission - needs about a year of work before I'll be super happy with it but I'm already using it and it's fun, and pretty powerful.

If you still run VS2017 like I do, you may need to install the 4.7.2 or 4.8 targeting packs for Visual Studio to be able to build some of these projects.

The Other Beast, Cauldron

I've included a separate set of binaries for a VSIX package that integrates Newt into Visual Studio as a "custom tool" - the source for the project is too big to distribute here, but I'll offer it on request.

After installing it, what you do is you add a grammar to your project, set the custom tool property of the document in the solution explorer to NewtParserGenerator

Now, whenever you edit that file, and save it, code is generated automatically. The created class is <Input Grammar Filename>Parser so for example Ebnf.ebnf would create Ebnf.cs with the class EbnfParser underneath it. Eventually, I may add an attribute to the grammars that specify its incode representation so you can alter the class name, but for now, this is how it's done.

Cauldron has other generators, which I'll mention briefly here. Use the specified names in the Custom Tool field to instantiate the tool for a given input file. If you have questions about how this works in Visual Studio, use the comments here to ask them.

MergeMinifyPreprocessor takes an input file with a list of filenames in it (file relative path) and produces a single "brick" of minified C# source code you can more easily redistribute. I use it internally for my own projects, when I want to pull a chunk of code out of my massive codebase I've built. You can also specify ;foo to include the line "foo" somewhere in the list as raw text. Put it between files. Or at the top. using ;; will make it always appear at the top.RegexGenerator: I wouldn't use this if I were you. It works, but explaining why you would even use it requires its own article. It's for building hand rolled parsers with partial FA lexers in them. It also doesn't generate classes that support complex error recovery yet.TinyT4Preprocessor: I've run application centric, inproc webservers served by code generated with this little monster. All it is, is a barebones T4 processor that only generates C# and does not require Microsoft's CodeDom nuget package. The only template directive it supports is template but you shouldn't need the others for this. It supports basic T4. It has one major advantage over Microsoft's T4 engine as well - it streams. So you can use it with chunked transfer encoding in HTTP for better performance on large documents. Microsoft's does not stream for some reason. However, it's of primary import not for serving web pages, but for writing C# code generation routines. I more often just use more C# code, not HTML, in between those tags!

Points of Interest

I think this might be very similar to the algorithm used by NorKen Programmar, but I can't be sure because they never disclosed theirs. I didn't try to imitate them, but I do use something similar to their EBNF format because it's the cleanest I've seen.

History

- May 11, 2019: Initial submission

- May 13, 2019: Updated

- Removed the pure compiled parser support because it was too slow, and with it, I removed pre C#7 support.

- The generated table driven parser is just so much faster, I couldn't justify keeping the alternative.

- Added command line tool support and a new sample app. Removed the syntax highlighter since it wasn't a .NET core app. New updates coming shortly, including a .NET Core web based syntax highlighter that accepts your grammars.

- May 14, 2019: Updated

- I've added support back for later versions of the .NET framework. Previous revisions didn't work due to a different dictionary constructor in .NET core. I've also optimized table parser generation some, at the cost of a little more memory.

- I've included an additional sample now that .NET framework is back. The highlighter is back too.

Just a shiny lil monster. Casts spells in C++. Mostly harmless.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin