This fourth article in a series on portable multithreaded programming using OpenCL™ will discuss the OpenCL™ runtime and demonstrate how to perform concurrent computations among the work queues of heterogeneous devices. The previous article (part 3) introduced the OpenCL execution model and discussed how to share data and coordinate computations among the work items in a work group.

The example source code from part 3 will be adapted to utilize multiple command queues to allow experimentation with concurrent kernel execution across both CPU and GPU devices. A complete example will be provided to use multiple queues and provide the reader with a framework to experiment with random number generators (RNGs) in a massively parallel environment. Concurrent execution is initiated succinctly via an OpenMP pragma.

The source code in this article also demonstrates a simple yet useful preprocessor capability to pass C++ template types to an OpenCL kernel. This makes changing atomic types (e.g. float, double, int, long, etcetera) for performance evaluation as easy as editing a template type parameter. For example, changing OclTestClass<float> to OclTestClass<double> will utilize double-precision capability in both the C++ OclTestClass and the OpenCL kernel(s) built by the class!

It is important that OpenCL developers understand the thinking behind the OpenCL runtime model that allows developers to create application binaries that can automatically adjust – without recompilation - to run efficiently on a wide variety of hardware configurations. In short, programmers can use OpenCL command queue execution and events to specify runtime dependencies between arbitrary queued commands – effectively defining the links in a dependency graph that can be optimized by the runtime to best use the available hardware configuration and resources.

As has been discussed in the previous two tutorials, OpenCL provides the ability for developers to specify computational kernels that will recompile at runtime to utilize a wide variety of devices (e.g. CPU, GPU, DSP, Cell, and others). This powerful capability embodies a core aspect of OpenCL portable parallelism by allowing applications to utilize different hardware, even hardware that has not been designed yet, without requiring programmer intervention or even rebuilding the application binary.

However, the ability to run on many heterogeneous devices is of limited use unless the developer can also coordinate the work – again without recompilation or programmer intervention - to best use whatever hardware configuration happens to be available to the application at runtime. Defining dependency graphs among tasks embodies a second core aspect on how the OpenCL design facilitates “portable parallelism” as developers can define critical dependencies while giving the runtime the ability to choose the best order of execution of independent tasks to best exploit a given hardware configuration. In this way, OpenCL applications have the ability to run unchanged on a single CPU/GPU system, systems with multiple GPUs, and even distributed clusters that utilize MPI (Message Passing Interface) or other distributed communications frameworks.

Defining dependencies, distributing data and computation, coordinating tasks, and load-balancing in a hybrid CPU/GPU environment are very active and exciting areas of current research. Assuming the programmer partitions the work into a number of separate tasks, there is much interest in creating easy to use methods and software runtime packages that can:

- Associate and tie data to a computation as well as define efficient data layouts among multiple device memory address spaces.

- Define and enforce data dependencies between tasks.

- Load-balance work in a transparent fashion among multiple heterogeneous computational units.

- Expand the previous capabilities to work in both a threaded and distributed message passing environment.

- Build core libraries similar to the BLAS and LAPACK libraries on top of this infrastructure.

OpenCL offers an important foundation as noted in the paper, “StarPU: a unified platform for task scheduling on heterogeneous multicore architectures”:

[T]he OpenCL initiative is clearly a valuable attempt in providing a common programming interface for CPUs, GPGPUs, and possibly other accelerators. However, the OpenCL API is a very low-level one which basically offers primitives for explicitely [sic] offloading tasks or moving data between coprocessors. It provides no support for task scheduling or global data consistency, and thus cannot be considered as a true “runtime system”, but rather as a virtual device driver.

Much of the research and API specification is happening right now through exciting projects like MAGMA (Matrix Algebra on GPU and Multicore Architectures), which has the potential to become a new standard mathematical library API like BLAS and LAPACK, plus projects like Vancouver that seek to build on the portable parallelism of OpenCL to carry computing into the exascale era. All of the following projects allow users to download and freely use their software and/or read their papers.

- The Dague (Directed Acyclic Graph Environment) provides critical capabilities including a distributed multi-level dynamic scheduler, an asynchronous communication engine and a data dependencies engine. This library can be freely downloaded.

- MOSIX provides an example of an OpenCL distributed capability that is described in the paper “A Package for OpenCL Based Heterogeneous Computing on Clusters with Many GPU Devices”.

- While not strictly OpenCL related, it is worth noting that projects such as the MPC (MultiProcessor Computing) project are working to transition MPI from a process to thread-oriented runtime to reduce overhead and increase performance, which holds promise in moving thread-oriented OpenCL codes to distributed computational clusters.

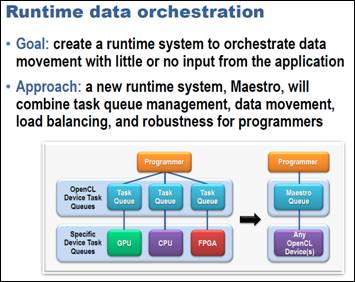

The potential and benefits of a unified runtime system is summarized in the following slide (slide 3) in Benedict Gaster’s October 2010 presentation for the OpenCL Programming Webinar Series:

The OpenCL Runtime

The host program generally implements the glue code that binds computational kernels together to create an application. As noted in part 3, various work-items can be grouped together into work-groups which also allows sharing and synchronization between work-items inside the group. It is assumed that the majority of the computational work will occur in the OpenCL kernels. Thus, the logic of the host application is generally focused on building the kernels; setting up the OpenCL contexts and devices; dispatching work to the devices through one or more command-queues; and coordinating the application workload task synchronization between all the kernels and command queues so that all data dependencies are satisfied – regardless of execution order - while also giving the runtime as much flexibility as possible in scheduling the work.

Both kernels and command-queues operate within a context that contains the state of the environment in which the kernels are executed. As discussed in part 2, OpenCL utilizes a relaxed memory consistency model that requires the programmer ensure that memory is used consistently among concurrent and asynchronous OpenCL operations. This makes the programmer responsible for synchronization of operations within a work-group, between the tasks in a command-queue, and across multiple-command queues.

The command queue is one of two mechanisms by which kernel execution is coordinated and data dependencies are enforced. For example, it would be very bad if a kernel that operates on some set of data were to run before the transfer operation that initializes the data on the device. Program crashes, incorrect and non-deterministic results are obvious consequences of using initialized memory. (The second mechanism is atomic synchronization operations among work items in a work group.)

Previous examples in this tutorial series utilized the simplest in-order, single device, and single queue implementation. This simplified the code as each queued task will be executed sequentially on the device in the order that it was placed in the queue. Since the data transfers are queued first and followed by the kernel invocation(s), the data dependencies are guaranteed to be satisfied without the need for additional code or complexity.

Synchronization is required when there are multiple queues and/or when out-of-order command execution is specified. In these cases, events must be utilized to enforce synchronization within and between queues in a context. (A special case also occurs when tasks are added to a queue asynchronously in a multi-threaded host application as the order of insertion is not guaranteed.)

The type of queue behavior (in-order vs. out-of-order) is specified when the queue is created. By default, queues are created to issue commands in-order with clCreateCommandQueue(). However, specifying CL_QUEUE_OUT_OF_ORDER_EXEC_MODE_ENABLE in

Queue-command properties will create an out-of-order queue. In addition, clSetCommandQueueProperty() can be used to change the queuing behavior after the queue has been created.

While the OpenCL specification allows more than a single queue to be created per device, it does not define how the runtime selects which task will be the next to run or if concurrent execution of kernels is possible on a device – assuming sufficient resources are available. Experienced parallel programmers know that asynchronous and concurrent scheduling of commands is a very good idea as it gives the runtime the greatest opportunity to maximize performance by running multiple tasks concurrently on one or many devices. Effectively, this leverages the scheduling capability of the runtime to optimize performance just as thread level parallelism allows the thread level scheduler on GPGPUs to achieve high performance.

The following host API calls are used to specify wait lists, return events and find out information about events:

- clWaitForEvents(num_events, *event_list): blocks until events are complete.

- clEnqueueMarker(queue, *event): returns an event for a marker that moves through the queue.

- clEnqueueWaitForEvents(queue, num_events, *event_list): inserts a “WaitForEvents” into the queue.

- clGetEventInfo(): gets command type and status as indicated by CL_QUEUED, CL_SUBMITTED,

CL_RUNNING, CL_COMPLETE, or an error code. - clGetEventProfilingInfo(): returns profiling information from the command queue such as submit, start, and end times.

With these API calls, very complex dependency graphs within a context can be specified. As discussed in the introduction, this provides OpenCL developers with an extremely powerful, general capability! Following is a graphic from slide 28 of the AMD tutorial demonstrating how wait events (indicated by arrows) can be used to support dependencies between two contexts – where each context contains two devices:

- C3 from Q1,1 depends on C1 & C2 from Q1,2

- C1 from Q1,4 depends on C2 from Q1,2

- In Q1,4, C3 depends on C2

It is very important to note that section 3.4.3 of the OpenCL specification is very explicit that synchronization can only occur:

- Between work-items in a single work-group.

- Among commands enqueued to command-queue(s) in a single context.(Italics were added to emphasize that synchronization can only occur within a context.)

For more coarse grained synchronization between queues and across contexts, the clFlush() and clFinish() provide a brute force capability to flush and wait for all queued commands to complete.

Derek Gerstmann’s provided an excellent presentation for SIGGRAPH ASIA 2009, “Advanced OpenCl Event Model Usage”, emphasizes the need to synchronize among command-queues in a single context. I highly recommend reviewing his slides as a continuation of this article as he walks through a number of usage models and provides easy to understand code snippets that show how to use events in various use cases including:

- 1x In-Order Queue, 1x Context, 1x Device.

- 1x Out-of-Order Queue, 1x Context, 1x Device.

- 2x In-Order Queues, 2x Separate Contexts, 2x Devices.

- 2x In-Order Queues, 1x Combined Context, 2x Devices.

Caveats and helpful hints:

- It is suggested that multiple heterogeneous devices should not be mixed within a context as noted on slide 6 of this AMD tutorial. Many factors can contribute to the performance drop. For example, some OpenCL implementations use a single thread to support a context. Mixing multiple device types can introduce delays into servicing device requests as the thread can become too slow to respond quickly to a device. Depending on the application, this can cause performance to drop.

- At this time, some OpenCL implementations exhibit poor performance when multiple queues are defined for a single device. For example, Markus Stürmer notes in his blog post, “funny things happen if you want to use multiple host threads to feed a number of OpenCL devices” that running two command-queues per device can adversely affect performance.

- Parallel memory transfers are required to get good streaming performance and can be essential to real-time applications. This thread, “Trying to get asynchronous transfer from SDK 2.3” discusses a number of caveats.

- The thread “SDK 2.3 and multiGPU”, notes that two and even three GPUs can now be used in Linux. Multiple GPUs did not work under Linux prior to SDK 2.3. However, all the GPUs need to be connected to a monitor or a dummy VGA connector.

- Out-of-order execution is not supported by the ATI drivers as of the SDK 2.3 release.

Example

There are unanswered questions about random number generators (RNG) on parallel computers. One of the easiest implementations is to provide a separate RNG per thread on the parallel computer and just use a different seed per thread. As the following article points out, such an implementation may or may not introduce artifacts and unwanted correlations into the random number sequence (link).

To help readers start investigating the interesting world of parallel RNGs, this article provides a simple framework using OpenCL that can run on variety of platforms. George Marsaglia's MWC (multiply with carry) algorithm, as describe on the Code Project, is used as a simple example of a good RNG. A sequential number generator is used as a simple counter example, because sequential numbers are certainly not random! The single-bit entropy test from ENT: A Pseudorandom Number Sequence Test Program is used to evaluate the performance of the RNGs. A more complete test would be Marsaglia's DIEHARD battery of tests.

From a code point of view, this example refactors the source code from part 3 to define a separate queue for both CPU and GPU devices. A new command-line argument, “both” has been added to run on both CPU and GPU resources. Of particular interest for flexibility and performance testing, the C++ template type parameter(s) used in the creation of the OclTest class are passed as preprocessor defines when building the OpenCL kernel(s) inside the class. Simply by changing the OclTest class template type as shown below, the OclTest can be tested using float, double, or other variable types. No change need be made to the OpenCL kernel.

OclTest<unsigned long> test1(…) OclTest<float> test1(…) OclTest<double> test1(…)

Also, OpenMP was utilized to concurrently queue commands in parallel on both devices. This provides a simple and concise way to introduce parallelism via an OpenMP pragma as seen in the code snippet below:

#pragma omp parallel for

for(int i=0; i < contextQueues.size(); i++) {

test[i].initData( contextQueues[i], (i*1000000));

contextQueues[i].enqueueNDRangeKernel(test[i].getKernel(),

cl::NullRange, test[i].getGlobalWorkItems(), test[i].getWorkItemsInWorkGroup(), NULL, test[i].getEventPtr());

contextQueues[i].finish();

}

However, queuing the commands in parallel may not be enough to start concurrent execution as noted in this thread, Using Multiple GPUs in a OpenCL program. On the 2.3 version of the AMD SDK the queue finish was needed to force concurrent execution:

contextQueues[i].finish();

More information on OpenMP can be found on the Internet. One good starting place is the Wikipedia article, OpenMP.

For convenience, the code from part 3 has been split into a main file, testRNG.cpp that handles all the glue code that specifies the queues and assigns work to them. The cl::vector template was used to make the code simple and readable. The OpenCL test class template, OclTest.hpp is included via the line

#include "OclTest1.hpp"

Following is the complete source for testRNG.cpp.

#define PROFILING // Define to see the time the kernel takes

#define __NO_STD_VECTOR // Use cl::vector instead of STL version

#define __CL_ENABLE_EXCEPTIONS // needed for exceptions

#include <CL/cl.hpp>

#include <fstream>

#include <iostream>

using namespace std;

#include "OclTest1.hpp"

void displayPlatformInfo(cl::vector< cl::Platform > platformList,

int deviceType)

{

cout << "Platform number is: " << platformList.size() << endl;

string platformVendor;

platformList[0].getInfo((cl_platform_info)CL_PLATFORM_VENDOR,

&platformVendor);

cout << "device Type "

<< ((deviceType==CL_DEVICE_TYPE_GPU)?"GPU":"CPU") << endl;

cout << "Platform is by: " << platformVendor << endl;

}

int main(int argc, char* argv[])

{

int seed=4;

if( argc < 2) {

cerr << "Use: {cpu|gpu|both} kernelFile" << endl;

exit(EXIT_FAILURE);

}

const string platformName(argv[1]);

cl::vector<int> deviceType;

cl::vector< cl::CommandQueue > contextQueues;

if(platformName.compare("cpu")==0)

deviceType.push_back(CL_DEVICE_TYPE_CPU);

else if(platformName.compare("gpu")==0)

deviceType.push_back(CL_DEVICE_TYPE_GPU);

else if(platformName.compare("both")==0) {

deviceType.push_back(CL_DEVICE_TYPE_GPU);

deviceType.push_back(CL_DEVICE_TYPE_CPU);

} else { cerr << "Invalid device type!" << endl; return(1); }

const char* kernelFile = argv[2];

try {

cl::vector< cl::Platform > platformList;

cl::Platform::get(&platformList);

for(int i=0; i < deviceType.size(); i++) {

displayPlatformInfo(platformList, deviceType[i]);

cl_context_properties cprops[3] =

{CL_CONTEXT_PLATFORM,

(cl_context_properties)(platformList[0])(), 0};

cl::Context context(deviceType[i], cprops);

cl::vector<cl::Device> devices =

context.getInfo<CL_CONTEXT_DEVICES>();

for(int j=0; j < devices.size(); j++ ) {

#ifdef PROFILING

cl::CommandQueue queue(context, devices[j],CL_QUEUE_PROFILING_ENABLE);

#else

cl::CommandQueue queue(context, devices[j],0);

#endif

contextQueues.push_back( queue );

}

}

cl::vector< OclTest<ulong> > test;

for(int i=0; i < contextQueues.size(); i++) {

test.push_back(OclTest<ulong>(contextQueues[i], kernelFile, argc-3, argv+3));

}

#pragma omp parallel for

for(int i=0; i < contextQueues.size(); i++) {

test[i].initData( contextQueues[i], (i*1000000));

contextQueues[i].enqueueNDRangeKernel(test[i].getKernel(),

cl::NullRange, test[i].getGlobalWorkItems(), test[i].getWorkItemsInWorkGroup(), NULL, test[i].getEventPtr());

contextQueues[i].finish(); }

for(int i=0; i < contextQueues.size(); i++) {

if(test[i].goldenTest( contextQueues[i] ) == 0) {

cout << "test passed" << endl;

} else {

cout << "TEST FAILED!" << endl;

}

}

} catch (cl::Error error) {

cerr << "caught exception: " << error.what()

<< '(' << error.err() << ')' << endl;

}

return EXIT_SUCCESS;

}

The C++ template header file, OclTest.hpp, provides a simple use of template specialization to get the typename of a parameter for several atomic types. Use of this method keeps it simple and does not complicate our code with too much C++ wizardry.

template<class T>

struct TypeName {

string getName();

private:

T *t;

};

template<> string TypeName<double>::getName() {return(string("double")); }

template<> string TypeName<float>::getName() {return(string("float")); }

template<> string TypeName<unsigned long>::getName() {return(string("ulong"));}

template<> string TypeName<long>::getName() { return(string("long")); }

template<> string TypeName<unsigned int>::getName() {return(string("uint"));}

template<> string TypeName<int>::getName() {return(string("int")); }

template<> string TypeName<unsigned char>::getName() {return(string("uchar"));}

template<> string TypeName<char>::getName() {return(string("char")); }

Since the kernel is built inside the instantiated class, we can pass the type to the OpenCL kernel build via preprocessor defines.

string buildOptions;

{ char buf[256];

sprintf(buf,"-D TYPE1=%s ", myType.c_str());

buildOptions += string(buf);

}

The kernel then just uses the types in the preprocessor define. Note: double-precision is an OpenCL extension that needs to be enabled in the OpenCL source code. The following pragma enables double-precision when using the AMD SDK.

#pragma OPENCL EXTENSION cl_amd_fp64 : enable

The OclTest class is now a class template that is included in testRNG.cpp.

For simplicity, this code performs one test per thread. A count of the bits containing ‘1’ is returned to the host which is used to calculate the single-bit entropy of the random number generator. It is simple to modify the code to calculate single-byte entropies as well.

Also note that the call to the constructor now only passes the command queue. This transitions the example code further away from the original C-code of Part 1 as all the device and context information can be determined as shown in the code snippet below:

cl::Device queueDevice = queue.getInfo<CL_QUEUE_DEVICE>();

std::string deviceName = queueDevice.getInfo<CL_DEVICE_NAME>();

The full source code for OclTest.hpp follows:

#include <cmath>

#include <algorithm>

template<class T>

struct TypeName {

string getName();

private:

T *t;

};

template<> string TypeName<double>::getName() {return(string("double")); }

template<> string TypeName<float>::getName() {return(string("float")); }

template<> string TypeName<unsigned long>::getName() {return(string("ulong"));}

template<> string TypeName<long>::getName() { return(string("long")); }

template<> string TypeName<unsigned int>::getName() {return(string("uint"));}

template<> string TypeName<int>::getName() {return(string("int")); }

template<> string TypeName<unsigned char>::getName() {return(string("uchar"));}

template<> string TypeName<char>::getName() {return(string("char")); }

template <typename TYPE1>

class OclTest {

private:

cl::Kernel kernel;

cl_int nTests;

cl_int vLen, vSize;

TYPE1 *h_oneCnt;

cl::Buffer d_oneCnt;

string myType;

cl::Event event;

long seed1,seed2;

int seedOffset;

unsigned long nIter;

const static double log2of10=3.32192809488736234787;

inline double rt_log2(double x) {

return log2of10 * log10(x);

}

public:

cl::Event *getEventPtr() { return &event;}

OclTest() {}

OclTest( cl::CommandQueue& queue, const char* kernelFile,

int argc, char *argv[])

{

cl::Device device = queue.getInfo<CL_QUEUE_DEVICE>();

cl::Context context = queue.getInfo<CL_QUEUE_CONTEXT>();

myType= TypeName<TYPE1>().getName();

cout << "My type is " << myType.c_str() << endl;

if(argc < 4) {

cerr << "Ocl kernel use: nTests seed1 seed2 nIterPerTest" << endl;

exit(EXIT_FAILURE);

}

nTests = atol(argv[0]);

seed1 = atol(argv[1])+seedOffset;

seed2 = atol(argv[2])+seedOffset;

nIter = atol(argv[3]);

seedOffset = 0;

vLen = nTests;

vSize = vLen * sizeof(TYPE1);

string buildOptions;

{ char buf[256];

sprintf(buf,"-D TYPE1=%s ", myType.c_str());

buildOptions += string(buf);

}

ifstream file(kernelFile);

string prog(istreambuf_iterator<char>(file),

(istreambuf_iterator<char>()));

cl::Program::Sources source( 1, make_pair(prog.c_str(),

prog.length()+1));

cl::Program program(context, source);

file.close();

try {

cerr << "buildOptions " << buildOptions << endl;

cl::vector<cl::Device> foo;

foo.push_back(device);

program.build(foo, buildOptions.c_str() );

} catch(cl::Error& err) {

cerr << "Build failed! " << err.what()

<< '(' << err.err() << ')' << endl;

cerr << "retrieving log ... " << endl;

cerr

<< program.getBuildInfo<CL_PROGRAM_BUILD_LOG>(device)

<< endl;

exit(-1);

}

string kernelName = string(kernelFile)

.substr(0,string(kernelFile).find(".cl"));

cerr << "specified kernel: " << kernelName << endl;

kernel = cl::Kernel(program, kernelName.c_str());

h_oneCnt = new TYPE1[vLen];

d_oneCnt = cl::Buffer(context, CL_MEM_READ_WRITE, vSize);

kernel.setArg(0, nIter);

kernel.setArg(1, seed1);

kernel.setArg(2, seed2);

kernel.setArg(3, d_oneCnt);

}

inline void initData(cl::CommandQueue& queue, int seed)

{

seedOffset = seed;

}

inline cl::Kernel& getKernel() { return(kernel); }

cl::NDRange getGlobalWorkItems() {

return( cl::NDRange( vLen ) );

}

cl::NDRange getWorkItemsInWorkGroup() {

return( cl::NDRange(1, 1) );

}

inline int goldenTest(cl::CommandQueue& queue)

{

event.wait();

#ifdef PROFILING

cl::Device queueDevice = queue.getInfo<CL_QUEUE_DEVICE>();

std::string deviceName = queueDevice.getInfo<CL_DEVICE_NAME>();

cl_ulong start=

event.getProfilingInfo<CL_PROFILING_COMMAND_START>();

cl_ulong end=

event.getProfilingInfo<CL_PROFILING_COMMAND_END>();

double time = 1.e-9 * (end-start);

double startTime = start * 1.e-9;

double endTime = end * 1.e-9;

cout << "Kernel (start,end) " << startTime << "," << endTime

<< " Time for kernel to execute " << time

<< " device \"" << deviceName << "\"" << endl;

#endif

queue.enqueueReadBuffer(d_oneCnt, CL_TRUE, 0, vSize, h_oneCnt);

double minBitEntropy=10;

double minBitMean=10;

for(int i=0; i < vLen; i++) {

double totalBitCount = 32*nIter;

double bitEntropy=0.;

double bitProb[2];

double bitMean = h_oneCnt[i]/totalBitCount;

bitProb[1] = ((double)h_oneCnt[i])/totalBitCount;

bitProb[0] = 1. - bitProb[1];

if( (bitProb[0] > 0.f) && (bitProb[1] > 0.f) ) {

bitEntropy = bitProb[0] * rt_log2(1./bitProb[0])

+ bitProb[1] * rt_log2(1./bitProb[1]);

}

minBitEntropy = min(minBitEntropy,bitEntropy);

minBitMean = min(minBitMean,bitMean);

}

cout << "Minimum bitEntropy " << minBitEntropy

<< " Minimum bitMean " << minBitMean << endl;

cout << "Optimum compression would reduce "

<< "the size of this random bit sequence by "

<< (1.-minBitEntropy) << '%' << endl;

if(minBitEntropy >= 0.9999) return(0);

else return(1);

}

};

The following commands build and run the code using the recently released AMD version 2.3 SDK:

echo "---------------"

export ATISTREAMSDKROOT=$HOME/AMD/ati-stream-sdk-v2.3-lnx64

export ATISTREAMSDKSAMPLESROOT=$HOME/AMD/ati-stream-sdk-v2.3-lnx64

g++ -I $ATISTREAMSDKROOT/include -fopenmp testRNG.cpp -L $ATISTREAMSDKROOT/lib/x86_64 -lOpenCL -o testRNG

Following is a simple random number generator written in OpenCL, simpleRNG.cl.

inline void SetSeed(unsigned int m_z, unsigned int u, unsigned int m_w, unsigned int v)

{

m_z +=u;

m_w +=v;

}

inline unsigned int GetUint(unsigned int m_z, unsigned int m_w)

{

m_z = 36969 * (m_z & 65535) + (m_z >> 16);

m_w = 18000 * (m_w & 65535) + (m_w >> 16);

return (m_z << 16) + (m_w & 65535);

}

inline

__kernel void simpleRNG(unsigned long n, long seed1,

long seed2,

__global TYPE1* c)

{

unsigned int m_z=521288629;

unsigned int m_w=362436069;

int index = get_global_id(0);

TYPE1 oneCnt=0;

SetSeed(m_z, seed1+index, m_w, seed2+index);

for(unsigned long iter=0; iter < n; iter++) {

unsigned int rnd = GetUint(m_z, m_w);

for(int i=0; i< 32; i++) {

if( (rnd&0x01) ) oneCnt++;

rnd = rnd >> 1;

}

}

c[index] = oneCnt;

}

Note that the two tests run concurrently on the CPU and GPU as seen in the table below. The runs start on both devices before either device completes its task.

| | Start Time | End Time |

| GPU | 42549.7 | 42557.0 |

| CPU | 42548.4 | 42563.1 |

For comparison, we provide a sequential number generator as an example “random” number generator. The code follows for badRNG.cl:

inline

__kernel void badRNG(unsigned long n, long seed1,

long seed2,

__global TYPE1* c)

{

int index = get_global_id(0);

TYPE1 oneCnt=0;

for(unsigned long iter=0; iter < n; iter++) {

unsigned int rnd = iter;

for(int i=0; i< 32; i++) {

if( (rnd&0x01) ) oneCnt++;

rnd = rnd >> 1;

}

}

c[index] = oneCnt;

}

As expected, this produces poor results. Examining the start times shows that these runs also happen concurrently on both devices.

bda$ ./testRNG both badRNG.cl 1024 1 2 1000000

Platform number is: 1

device Type GPU

Platform is by: Advanced Micro Devices, Inc.

Platform number is: 1

device Type CPU

Platform is by: Advanced Micro Devices, Inc.

My type is ulong

buildOptions -D TYPE1=ulong

specified kernel: badRNG

My type is ulong

buildOptions -D TYPE1=ulong

specified kernel: badRNG

Kernel (start,end) 42775.5,42783.1 Time for kernel to execute 7.58799 device "Cypress"

Minimum bitEntropy 0.891907 Minimum bitMean 0.308906

Optimum compresion would reduce the size of this random bit sequence by 0.108093%

TEST FAILED!

Kernel (start,end) 42774.1,42788 Time for kernel to execute 13.8672 device "AMD Phenom(tm) II X6 1055T Processor"

Minimum bitEntropy 0.891907 Minimum bitMean 0.308906

Optimum compresion would reduce the size of this random bit sequence by 0.108093%

TEST FAILED!

Summary

OpenCL advances the concept of “portable parallelism” as it is not just a language to create kernels that can run on CPUs, GPUs, DSPs, and other devices. It also defines a platform API to coordinate heterogeneous parallel computations. While the technical literature is rich with parallel coordination languages and APIs, OpenCL is unique in its ability to facilitate the coordination of kernels concurrently running on multiple heterogeneous devices. In combination, these two core capabilities (portable kernels and the ability to define and enforce data dependencies in a flexible manner) empower developers so they can create applications that can run efficiently on a variety of hardware platforms and configurations.

Key OpenCL coordination concepts include:

- Each device has its own asynchronous work queue.

- Synchronize between OpenCL computations via event handles from different (or same) devices.

- Enables algorithms and systems that use all available computational resources.

- Enqueue “native functions” for integration with C/C++ code. (A native function is one provided by a library or an operating system.)

Additional resources:

Rob Farber is a senior scientist and research consultant at the Irish Center for High-End Computing in Dublin, Ireland and Chief Scientist for BlackDog Endeavors, LLC in the US.

Rob has been on staff at several US national laboratories including Los Alamos National Laboratory, Lawrence Berkeley National Laboratory, and at Pacific Northwest National Laboratory. He also served as an external faculty member at the Santa Fe Institute, co-founded two successful start-ups, and has been a consultant to Fortune 100 companies. His articles have appeared in Dr. Dobb's Journal and Scientific Computing, among others. He recently completed a book teaching massive parallel computing.