Introduction

In this article, I will show you how to write and run a simple app that synchronizes local folders with remote Google drive folders, using nodeJs and the latest Google drive JavaScript SDK.

Background

I wanted to prevent specific subfolders from being backed-up (hidden .svn folders). Since Google drive for Windows does not have such a feature, I decided to write my own solution, using the Google Drive API.

NodeJs Application

The structure of our nodeJs application is fairly straightforward, it contains:

- an imports section at the top,

- followed by the main method invocation,

- and finally the main method definition

var fs = require('fs');

var readline = require('readline');

var google = require('googleapis');

var OAuth2 = google.auth.OAuth2;

var path = require('path');

var crypto = require('crypto');

var util = require('util');

syncFolder('C:/tmp/drive123', 'dev2/test4');

function syncFolder(localFolderPath, remoteFolderPath) {

const CREDENTIALS_FILE = "client_secret.json";

const AUTH_FILE = "auth.json";

const FOLDER_MIME = "application/vnd.google-apps.folder";

var drive;

loadCredentials(function () {

createRemoteBaseHierarchy('root', function (folderId) {

syncLocalFolderWithRemoteFolderId(localFolderPath, folderId);

});

});

[...]

}

The main method has 3 steps:

loadCredentials - Retrieves the Google API access token and generates the authorization url as neededcreateRemoteBaseHierarchy - Creates Google drive remote folders base hierarchysyncLocalFolderWithRemoteFolderId - Synchronizes local folders with remote folders, recursively

In loadCredentials(), we start by loading the file client_secret.json that contains both client_id and client_secret (downloaded from your Google API account - see initial setup at the end). Then we attempt to load the file auth.json that contains access tokens to your Google Drive account. If the auth file is not found, the user is prompted to authorize access to the drive and to enter the code at the prompt. Received tokens are saved on disk in auth.json. If the file is found, we initialize the Google Drive API credentials and move to the next step.

In createRemoteBaseHierarchy(), we ensure that the full remote Google Drive folder hierarchy exists. If it does not, we create folders to match the path as needed. The folder ID of the last/bottom folder is used as input to the third step.

And finally in syncLocalFolderWithRemoteFolderId(), we scan local and remote folders, and make sure that all files in the local folder exist in the remote folder, recursively. We use files' md5checksum to detect changes to existing files.

As you can see in the source code, the 3 functions are chained using callbacks because Google API functions are all running asynchronously. If you tried invoking the 3 functions sequentially instead, like this:

loadCredentials();

createRemoteBaseHierarchy('root', remoteFolderPath);

syncLocalFolderWithRemoteFolderId(localFolderPath, folderId);

This would not work, because reading the credentials in loadCredentials() occurs in the callback function for fs.readFile(), and not when loadCredentials() execution returns.

To better understand this, let's take a look at loadCredentials():

function loadCredentials(callback) {

fs.readFile(CREDENTIALS_FILE, function (err, content) {

if (err) { console.log('File',

CREDENTIALS_FILE, 'not found in (', __dirname, ').'); return; }

var clientSecret = JSON.parse(content);

var keys = clientSecret.web || clientSecret.installed;

var oauth2Client = new OAuth2(keys.client_id, keys.client_secret, keys.redirect_uris[0]);

drive = google.drive({ version: 'v2', auth: oauth2Client });

fs.readFile(AUTH_FILE, function (err, content) {

if (err)

fetchGoogleAuthorizationTokens(oauth2Client);

else {

oauth2Client.credentials = JSON.parse(content);

callback();

}

});

});

}

The callback function parameter is invoked only after both CREDENTIALS_FILE and AUTH_FILE files are read asynchronously.

A similar logic is used in createRemoteBaseHierarchy():

function createRemoteBaseHierarchy(parentId, callback) {

var folderSegments = remoteFolderPath.split('/');

var createSingleRemoteFolder = function (parentId) {

var remoteFolderName = folderSegments.shift();

if (remoteFolderName === undefined)

callback(parentId);

else {

var query = "(mimeType='" + FOLDER_MIME +

"') and (trashed=false) and (title='"

+ remoteFolderName + "') and

('" + parentId + "' in parents)";

drive.files.list({

maxResults: 1,

q: query

}, function (err, response) {

if (err) { console.log('The API returned an error: ' + err); return; }

if (response.items.length === 1) {

var folderId = response.items[0].id;

createSingleRemoteFolder(folderId);

} else {

drive.files.insert({

resource: {

title: remoteFolderName,

parents: [{ "id": parentId }],

mimeType: FOLDER_MIME

}

}, function (err, response) {

if (err) { console.log('The API returned an error: ' + err); return; }

var folderId = response.id;

console.log('+ /%s', remoteFolderName);

createSingleRemoteFolder(folderId);

});

}

});

}

};

createSingleRemoteFolder(parentId);

}

createRemoteBaseHierarchy recursively reads or creates folders on Google drive until it gets to the last folder segment. It contains an inner-function createSingleRemoteFolder used to deal with (read or create) a specific folder segment under a specific folderId. The inner-function is invoked recursively until the last folder segment is reached. When this happens, the callback function is invoked with the folderId of that last segment.

So with:

syncFolder('C:/tmp/drive123', 'dev2/test4');

createRemoteBaseHierarchy will ensure that the root folder of your Google drive has a folder named dev2. Then it will check for the existence of a folder named test4 under dev2. The callback will be invoked with test4.

Let's look at the last method in the chain: syncLocalFolderWithRemoteFolderId. Remember that when that function is invoked, Google drive credentials are valid, base folders are created (dev2/test4) and we know the folderId of the last folder segment (test4).

function syncLocalFolderWithRemoteFolderId(localFolderPath, remoteFolderId) {

retrieveAllItemsInFolder(remoteFolderId, function (remoteFolderItems) {

processRemoteItemList(localFolderPath, remoteFolderId, remoteFolderItems);

});

}

The first step is retrieveAllItemsInFolder that fetches all items (files and folders) under remoteFolderId (test4). When the full list is populated, we invoke processRemoteItemList that will create/update/delete items from Google Drive as needed, and recursively look at subfolders.

Here is the code for retrieveAllItemsInFolder:

function retrieveAllItemsInFolder(remoteFolderId, callback) {

var query = "(trashed=false) and ('" +

remoteFolderId + "' in parents)";

var retrieveSinglePageOfItems = function (items, nextPageToken) {

var params = { q: query };

if (nextPageToken)

params.pageToken = nextPageToken;

drive.files.list(params, function (err, response) {

if (err) {

invokeLater(err, function () {

retrieveAllItemsInFolder(remoteFolderId, callback);

});

return;

}

items = items.concat(response.items);

var nextPageToken = response.nextPageToken;

if (nextPageToken)

retrieveSinglePageOfItems(items, nextPageToken);

else

callback(items);

});

}

retrieveSinglePageOfItems([]);

}

The inner-function retrieveSinglePageOfItems is used to retrieve all items under remoteFolderId. Because the Google Drive API limits how many items are returned at once (100+), the inner-function may be invoked many times, until all items are returned. When there are no more 'nextPage', the callback is invoked with the fully-populated item list as parameter.

Finally, let's take a look at the last interesting function, processRemoteItemList:

function processRemoteItemList(localFolderPath, remoteFolderId, remoteFolderItems) {

var remoteItemsToRemoveByIndex = [];

for (var i = 0; i < remoteFolderItems.length; i++)

remoteItemsToRemoveByIndex.push(i);

fs.readdirSync(localFolderPath).forEach(function (localItemName) {

var localItemFullPath = path.join(localFolderPath, localItemName);

var stat = fs.statSync(localItemFullPath);

var buffer;

if (stat.isFile())

buffer = fs.readFileSync(localItemFullPath);

var remoteItemExists = false;

for (var i = 0; i < remoteFolderItems.length; i++) {

var remoteItem = remoteFolderItems[i];

if (remoteItem.title === localItemName) {

if (stat.isDirectory())

syncLocalFolderWithRemoteFolderId(localItemFullPath, remoteItem.id);

else

updateSingleFileIfNeeded(buffer, remoteItem);

remoteItemExists = true;

remoteItemsToRemoveByIndex =

remoteItemsToRemoveByIndex.filter(function (val) { return val != i });

break;

}

}

if (!remoteItemExists)

createRemoteItemAndKeepGoingDownIfNeeded

(localItemFullPath, buffer, remoteFolderId, stat.isDirectory());

});

remoteItemsToRemoveByIndex.forEach(function (index) {

var remoteItem = remoteFolderItems[index];

deleteSingleItem(remoteItem);

});

}

The function reads local files and folders located in localFolderPath, and compare these files/folders with remoteFolderItems (items on Google Drive).

If it is a file, it reads it in full in a buffer, so it can later-on calculate md5hash and/or push its contents to Google Drive. If it is a folder, it invokes the function syncLocalFolderWithRemoteFolderId so the same processing occurs on subfolders.

The function keeps track of remote items that were visited - if a specific item was not visited, then it does not exist locally and it should be removed (this was my use-case).

You may look at the full source code to see how I implemented updateSingleFileIfNeeded, createRemoteItemAndKeepGoingDownIfNeeded and deleteSingleItem.

Working with the JavaScript Google API

A good way to work with the latest JavaScript API is to download its source code and look at function comments to understand usage and expected parameters.

When many API calls are made in a very short amount of time, the Google API will start throwing user-quota related errors. To deal with this problem, I created the function invokeLater() that retries to invoke the method again (using setTimeout and a random number).

Here is the list of Google API methods that were used:

oauth2Client.generateAuthUrl - Generate the google url that users should follow to request the access tokenoauth2Client.getToken - Use the user-entered code to fetch the Google auth tokensdrive.files.list - Get the list of files and folders. Used with 'parentId in parents' to fetch items under a specific directory, and with the condition (trashed=false) to skip trashed items. When a folder has many items (100+), the function may need to be invoked many times until nextPageToken is undefined.

drive.files.insert - Create a file or folder under a specific parentId folder. Set the mime type to 'application/vnd.google-apps.folder' to create a folder item. To create a file, populate the item's media property with the file's contents (buffer).drive.files.update - Update the remote file if its md5Checksum property is different than the local file's md5 hash. Same as for drive.files.insert(), populate the file's media property with the file's contents (buffer).drive.files.delete - Delete a file or folder with the specified file ID.

Run the Application

Setup Dependencies

Install nodeJs on your computer, see steps at:

https://nodejs.org/en/download/package-manager/#windows

Enable the Google drive Api - see steps at: https://developers.google.com/drive/web/quickstart/nodejs#step_1_enable_the_api_name

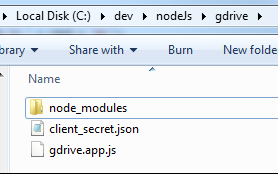

Create a folder that will contain the gdrive app and copy the file gdrive.app.js into it, for instance c:\dev\nodeJs\gdrive.

Install the Google drive client library, at the prompt type:

npm install googleapis --save

Now your folder should look like this:

Authorize the App

Run the app:

node gdrive.app.js

Because the app has not yet been authorized, the JavaScript Google SDK will generate a URL with the request. Copy the url into your browser and allow the request:

The code will either be shown in the browser (see below) or in the redirect query string. Paste the code into the nodejs app.

Your nodeJs app folder should now look like this: (notice the new auth.json file)

If refresh_token is missing from auth.json, it is likely because you have already authorized the user. An easy way to resolve this is to remove/revoke the access in your Google security web UI and try again.

Run the Code

Tweak the main method invocation syncFolder to match your needs. For instance:

syncFolder('C:/tmp/gdrive', 'tmp/test123');

This will upload files and folders found in your local folder c:/tmp/gdrive to your Google drive account at /tmp/test123/. Remote folders tmp and test123 will be created as needed. If you leave the target remote folder empty, files and folders will be uploaded to the root of your Google drive account (not recommended).

If the files to be backed-up are stored in several hard-drives, you can just invoke the syncFolder function several times:

syncFolder('C:/photos', 'photos/c');

syncFolder('D:/photos', 'photos/d');

Important: Do not use backslashes in the path, so type 'c:/photos' and not 'C:\photos'.

Future Enhancements

A few things that could be done to improve the code:

- Pass folders as input parameters

- Store API keys/token securely

- Batch upload files instead of one-at-a-time

- After the initial run, use a file/folder watcher to only work on new/updated files/folder

- Do not retry calls in all cases (non-quota related issues, such as remote folder full)

Conclusion

With just under 300 lines of code, we wrote a nodeJs app that lets you backup important folders into your free Google drive account.