Introduction

In this article, I hope to show a very useful architecture for building a network server over TCP/IP communication. I know there are a lot of TCP and UDP servers you can buy, and there are some more you can download for free, but the problem is that those you can buy usually gives you a "black box". They also cost money (the good ones cost a lot of money). Another problem is that they were designed to work on heavy and resourceful machines. The others you can download seem to go straight into a certain architecture which is usually incorrect, or at the least, they use an architecture which I believe is not the best we can use to milk a machine. One of my supervisors once told me that the enemy of the "good" is the "excellent". Well, obviously, he was correct! But, I still want the excellent, don't you feel the same?

Well, enough chit-chat, let's examine the problem

Sockets can use three modes for communicating:

- Synchronous communication - using the block mode which halts execution until something happens in the socket.

- Synchronous communication - without using the block mode - but, this means, we will get errors which we will have to catch.

- Asynchronous communication - which magically opens a thread per socket activity, thus not halting execution.

And, of course, it is possible to mix synchronous calls with asynchronous calls if you seriously feel you don't have enough problems to sustain a tremendous amount of humor in your life.

The first examination is why and when would we want to use asynchronous communication

Since async calls open a thread for every socket operation, it is a great solution for client programs. It will manage the communication without halting other procedures, it is tested, and it is absolutely not suitable for servers. Why? The server using async calls will open a thread for every socket method, which will mean our server is going to end up having way too many threads than needed, and further, adding more threads at some point will make the entire list of other threads to run slower and slower until the hardware will require an upgrade. Now, that will happen no matter what logic we will implement; but, the question is when is it going to happen? The more threads we open, the sooner we need a hardware upgrade, so for now, we can determine that using async calls is out of the question if we want a low resource waste with maximal optimization in our server.

So, our goal is to use the smallest number of threads as long as our Latency definition (a.k.a. Lag) is meeting our design requirements. Yes, I know some of you would say something like: "what are you talking about, most servers work with async communication!". They are correct - so if things are as I explained in the previous paragraph, why do so many servers use the async architecture? The answer is in an assumption that will not hold water, and in a non-elegant workaround that brutally forces things to work, as I will explain. Behind this architecture lays an assumption that clients keep communicating to the server, making the threads behind close and open all the time since an operation like receiving data or sending data is a short operation, and since it's short, it takes resources only for few nano-secs and then they are released, and so many threads can open and close without actually wasting resources in a constant way, which is actually very true (so far).

However, if for example I connect to such a server from my client and I decide not to send data to that server, it would mean that that server has opened a BeginRecieve method that is waiting for my client to send something, and as long as I don't send anything, the thread that waits for data remains open, and does not close.. If we have 2000 clients doing that, the server will be in trouble, and the architect who designed it will be either unemployed, or possibly promoted to a division manager - that depends on where you work. To workaround this problem, the architecture requires a non-idle policy, so threads can close and malicious clients can not use that exploit. One of those policies can be a timer that closes idle clients, another can be based on a ping mechanism, and few would go for even smarter solutions that keep track of resources and disconnect idle clients only when resources are getting low. But, all those are workarounds which point out that they deal with the symptoms and not the problem that causes them! For the async server architecture, which as I explained, this is not suitable.

Another approach would be to use sync calls in block mode to our sockets - this means that execution will halt, so to workaround this, we can open a thread per client. But again, we shall have many threads running in our system, and that is something we already killed as a server solution.

The last option remaining is to use the sync calls without block mode. The problem is that 2000 clients connected to our machine will need to be scanned repeatedly, and that would take too long to scan.

The remedy

So, the remedy is to use a few threads to scan our clients - for example, every 100 clients will be scanned by a thread. However, we don't have the slightest idea regarding how long would it take to scan 100 clients in different machines. Another problem is that constraining to 100 is an arbitrary definition, which means we will not be dynamic.

What I did at that point is create a class which I called ServerNode. The ServerNode holds clients, and return latency information of the scan (how long did it take to scan all the clients in the node). In the server base, I created a property which I named RequiredLatencyPerClient. From that point, things became much clearer.

When the server clients list receives a new client, the server will scan all nodes and decide which node to add the new client to. If no node latency meets the RequiredLatencyPerClient, the server will create a new node and will add the new client to that node.

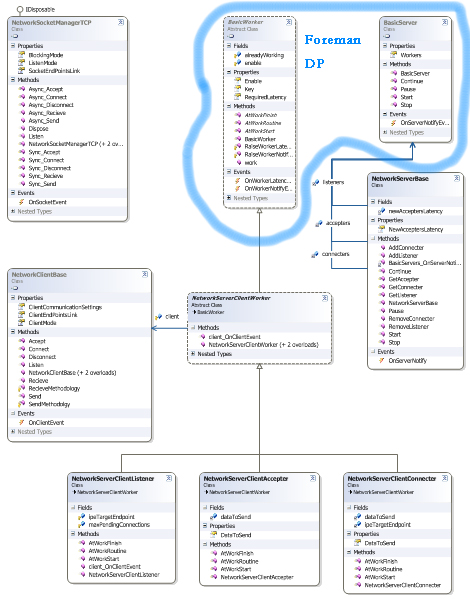

Foreman Design Pattern - Is that a new design pattern ? (If not, I got a new and very generic idea instead - I will call it the "Wheel"!)

Well, so far so good, but while writing the code, I couldn't help thinking that I somehow was doing something which is very generic in many situations. The idea that we have a client that needs to be organized in nodes in order to maintain a certain latency is something that can be useful in many situations. So, I decided it would be even better to take that work pattern out of the server and implement it independently so it can be used dynamically and in other situations as well.

I seeked the books and the internet to see if there is a known design pattern that actually does that - and found that there isn't, so if no one objects, I am taking the right to call it the Foreman Design Pattern.

But... there is something that bothers me. What if some clients need a different latency than others ? Hmm.. and what if I want to be able to change a client latency dynamically?

So, scratching my head, I finally realized that a simple solution was staring at me all the time! The Foreman will not have a RequiredLatencyPerClient. Instead, each client, which from now on we shall call a worker (since we are tinkering with a design pattern and not just a communication server), will have a latency information, and the Foreman will put the worker in a suitable node according to its latency requirements.

Based on the Foreman Design Pattern, I can build a very cool communication server.

So, after building the Foreman, I began writing a simple code to wrap the socket communication options. I opened a project and called it NetworkSocketManager - it will be used in two other projects, a server and a client. The NetworkSocketManager will let us choose between a socketing work methodology and it will wrap all the synchronous and asynchronous communication options (including the block mode).

Having done with that part, I coded a simple client that contains the NetworkSocketManager. The project is called NetworkClient.

Now, the client itself is also very nice and friendly since it already implements a basic application level transmission control. Any data that is going to be sent is being wrapped in an envelope which contains: | prefix length = 1 digit | Prefix = length of data | data |. So, when receiving, the event for receiving a message is going to happen only when the full data accumulated through the socket. The situation for getting partial data can happen if I don't implement the application level transmission control. If, for example, the server out buffer is set to 1024 and the receive buffer is set to 256 - it would cause partial data coming or full data coming in 4 application level packets. But, as I said, this problem is already solved.

If you wish to have encryption on your data, make sure to encrypt before you pass on the data to the send, and if you wish to manipulate your message before sending it (for example, if you need integration with another server), you will have to inherit the communication class and override the communication functions to your needs.

The next task was to build a server that uses the already mentioned ForemanDP. So, I opened another project and called it NetworkServer. In it, I derived the ForemapDP worker class to CommunicationWorker class and the server class derives from Foreman BasicServer. And voila! If everything so far is clear, you will be able to understand my code and why it is so powerful.

The fact that this is a powerful code implementation lies in the fact that all the programmer needs to do is derive a worker and a server to have an out of the box, running, and opened source client/server architecture which uses the correct resource methodology.

Comparing to Classic IOCP

As we can see, the model based on the foreman will "milk" the machine better since the definition of the required worker (client) latency has a meaning regarding who needs more focus from the machine. To be even more exact, the clients that require latency < 100 are treated in a different thread. This is what makes the difference from the classic IOCP, where all clients are treated in the same threadpool - there is a waste there since a client that requires a latency of 1000 (1 sec) will cause the other queued clients to hold until it has finished processing.

However, ForemanDP knows how to deal with such a case: in the case a client latency is not met, a new thread (client's node) will be opened, and that client will be moved to it to make sure it can receive the latency it requires.

Using the Code

OK, so how do we get started?

Step 1

The first thing is to either add just references or the projects into your solution. For those who need to have servers, you will need the NetworkSocketManager, ForemanDP, and NetworkServer. For those who need to have clients, you will need the NetworkSocketManager and NetworkClient. For those who need both, just add it all...

Step 2

Server developers: In your class, write your code to contain the NetworkServerBase (or your class which derives it).

private NetworkServerBase MyServer = null;

The next thing is to instantiate the server. You would want to put it in the Form_Load or a constructor of your class:

MyServer = new NetworkServerBase(new TimeSpan(1000));

MyServer.OnServerNotify +=

new ForemanDP.BasicServer.DelegateServerNotification(MyServer_OnServerNotify);

MyServer.AddListener(

new NetworkServerClientListener(new TimeSpan(2000),

new System.Net.IPEndPoint("replace this with the ip you want", 5500), 100));

The last line is interesting because what it actually does is add a listener with a required latency of 2 seconds to the server.

The NetworkServerClientListener is derived from NetworkClientWorker which derives from ForemanDP worker. The line of code sets port 5500 to be a listening port, and puts a 100 backlog space for queuing clients which need to connect. You can add more listeners - as many as you want! Run a loop on that line or just copy paste it, whatever you need.

From that point forward, every communication event to that port is piped to MyServer_OnServerNotify:

void MyServer_OnServerNotify(ForemanDP.BasicWorker worker, object data)

{

switch (((NetworkServerClientWorker.NetworkClientNotification)data).EventType)

{

case NetworkClient.NetworkClientBase.ClientEventTypeEnum.Accepted:

connectedUsers++;

break;

case NetworkClientBase.ClientEventTypeEnum.Disconnected:

connectedUsers--;

break;

}

}

You can catch messages and anything else you need from that event.

Clients: In your class, write your code to contain NetworkClient:

private NetworkClientBase clientCommunication = null;

---- your constuctor or Form_Load -----

clientCommunication = new NetworkClientBase(

new CommunicationSettings(

CommunicationSettings.SocketOperationFlow.Asynchronic,

CommunicationSettings.SocketOperationFlow.Asynchronic,

CommunicationSettings.SocketOperationFlow.Asynchronic,

CommunicationSettings.SocketOperationFlow.Asynchronic,

CommunicationSettings.SocketOperationFlow.Asynchronic, false, 64));

clientCommunication.OnClientEvent +=

new NetworkClientBase.DelegateClientEventMethod(clientCommunication_OnClientEvent);

:

:

:

---------------------------------------

private void clientCommunication_OnClientEvent(

NetworkClientBase.ClientEventTypeEnum EventType, object EventData)

{

switch (EventType)

{

case NetworkClientBase.ClientEventTypeEnum.None:

break;

case NetworkClientBase.ClientEventTypeEnum.Accepted:

break;

case NetworkClientBase.ClientEventTypeEnum.Connected:

break;

case NetworkClientBase.ClientEventTypeEnum.RawDataRecieved:

break;

case NetworkClientBase.ClientEventTypeEnum.MessageRecieved:

break;

:

:

:

and so on to catch what ever you need or want.

Latest Version Additional Info

As an example, I added a simple chat implementation. In order to run it:

- From Visual Studio, click the green triangle button (F5) - this will run the chat server.

- While the chat server is running, go back to Visual Studio and right click on the chat client project - a popup menu will appear. From the popup-menu, click Debug -> Start New Instance.

You can repeat step 2 to create and run as many instances as you want.

Note: The chat-client/server is just an example of how to use the entire architecture. It is not the focus of this article, and it is not intended to be bug-free.

Benchmark Program Addition

I have repaired much of the TCPSocket layer which was causing problems in certain situations. If more bugs arise, please let me know so I can fix them as well (in my machine, it works well).

The benchmark opens a server and multiple async clients to communicate with the server. The server will echo each incoming packet back to the client who sent it (much like ping!).

Since it was necessary to identify each async client, I created another client which contains a NetworkClientBase and adds identity to it. Normally, you will not need such a client unless you want to work with multiple clients without a server or a main node to manage them inside it.

Bug fix (28.10.2008)

Thanks to BeAsT[Ru] from this forum, a bug was found and repaired in a queue which is used inside the accepter to send data. An asymmetric handling was found in the queue and a critical section was implemented in the code. After several tests, I can conclude now that this bug is no more.

Bug fix (20.12.2008)

Thanks NapCrisis who fixed a minor bug in the chat example.

Finally

Most programmers believe that they have the best code that only God could match in perfectness. And, I am no different! But, in order to really learn something, we must put our ego very far away. So, please let me know what you think, and feel free to criticize.

The connecter area in the server is not in use - I didn't finish it - but, unless you want a server that can initiate communication to other servers like a proxy, for example, it will not bother you. I promise to finish it if you CodeProject folks want it.

Last thing: Some might ask why not use WCF instead of all this? WCF is a very advanced request-response architecture, and as progressed as it is, it will not give you "the always online in real time connection". Check this out: MSDN, if you don't believe me.