Introduction

This post discusses about bringing speech recognition to the web. Speech recognition is in short a technology that converts spoken words to text. Voice or speech recognition has been popular in the desktop software world. Popular examples of this include the speech recognition system used in Windows XP, Vista and Seven for giving voice commands and controlling the system. Another popular example would be the speech recognition feature in Microsoft Office that helps in dictating text so that the users can write text just by dictating it to the computer.

With the new draft specification of HTML5 Speech Input, this facility will be made available for the web so that the speech recognition can be carried out in the web world with ease.

Note: To read the full specifications of HTML5 Speech Input API, visit here.

Applications

Refer to this Wikipedia page

Technology

The API itself is agnostic of the underlying speech recognition implementation and can support both server based as well as embedded recognizers. In case of embedded recognizers, the browser itself would have the capability of speech recognition and this would be quite similar to the current software that does speech recognition. In this approach, the browser would record the voice from the microphone and perform the speech recognition process on the input voice locally and generate the resultant text.

This would be a fast process and could be done offline as well. Whereas in the second approach, the browser would record the voice from the microphone and stream the audio data to its server which is responsible for the speech recognition and after the speech recognition process at the server, it would send the result text to the browser.

The advantage of using a server based approach is that speech recognition would be more precise and accurate than the local approach because large amount of training data collected at the central servers help improve accuracy of the speech recognition. The API is designed to enable both one-off speech input and continuous speech input requests. Speech recognition results are provided to the web page as a list of hypotheses along with other relevant information for each hypothesis.

In my demonstration, Chrome is the browser which captures audio and streams to Google’s servers for speech recognition and the text is resulted from the servers and sent to Chrome browser. In this demonstration, the software part that has the responsibility to capture audio and stream to servers is embedded directly in the Chrome web browser.

- For extra research, you can look at the Chrome web browser source code related to speech. Audio is collected from the microphone, and then sent to a Google server (a Gwebservice) using HTTPS POST, which returns a JSON object with results. Check out the source code or visit Accessing Google Speech API / Chrome 11 for a little more information.

- As it is clear that unless you have your own browser product like Google has Chrome, you will have to build an extension that will be attached to the browser and will handle the audio capture and streaming responsibilities. And you also need servers that will do the speech recognition for you. Or you can also opt for the first approach and embed your recognizer in your extension that you built.

Other Approaches

There are other approaches as well that do not relate to HTML5 or SPEECH INPUT API. They implement speech recognition for web using different implementations but using the same technology as I discussed above. The strategy followed by them is that a flash based component resides on the web page which captures the audio and streams the audio to their servers and gets the result back from the server.

Note: There can be and would be many more implementations to use speech recognition on the web. These are the ones I came across.

Prerequisites

HTML5

HTML5 is a language for structuring and presenting content for the World Wide Web, a core technology of the Internet. It is the fifth revision of the HTML standard. In particular, HTML5 adds many new syntactical features. HTML5 introduces a number of new elements and attributes that reflect typical usage on modern websites. In addition to specifying markup, HTML5 specifies scripting application programming interfaces (APIs).[HTML5 new features and specifications are not achievable without CSS and JS. So bluntly HTML5 =HTML + CSS +JS. Knowing briefly about HTML will help us to better understand the details of SPEECH INPUT.

The <input> html element (<input type="text" name="text 1">) is extended in the HTML5 Speech Input specification to allow speech recognition and input facilities. The input element is extended because the intended aim of the API is to allow input of data by voice or speech. This makes it clear for the name "Speech Input API".

- A basic knowledge of the input element is needed. Full practical details will be discussed at a later stage.

JavaScript

Webpage authoring is beautifully separated into three layers that provide world wide web the flexibility and extensibility it enjoys today. This three layer pattern has come from our past experiences and mistakes which helped in the evolution of the world wide web and web authoring. Web authoring is separated into the layers of content, presentation and behavior where content and structure is controlled by HTML, presentation and styling is controlled by CSS and the behavior and responsiveness of elements is controlled by the JavaScript. So in brief all elements structured by HTML are represented in the DOM (Document Object Model) as objects and JS is the language that interacts with those DOM objects. JS can be used to access the object, their properties, subscribe to events associated with them and respond to those events when the event triggers.

To understand the events caused by Speech Input and to respond to them, basic knowledge of JavaScript is required.

Implementation

For a working demonstration of the Speech API, visit http://www.robinrizvi.info/speechapidemo/.

In this demonstration, I am presenting an example of navigating the website by issuing voice commands, i.e., the user can speak the links through his microphone to navigate to the link.

HTML

<div id="speechinput">

<input id="speech" type="text"

speech="speech" x-webkit-speech="x-webkit-speech"

onspeechchange="processspeech();" onwebkitspeechchange="processspeech();" />

<img src="image/mic_disabled.png"/>

</div>

The main line in the above HTML code that does the magic is:

<input id="speech" type="text" speech="speech" x-webkit-speech="x-webkit-speech"

onspeechchange="processspeech();" onwebkitspeechchange="processspeech();" />

Here the <input> HTML element is extended to include more properties/attributes and events so that the speech functionality could be achieved. The new attributes and methods added are:

speech="speech"

x-webkit-speech="x-webkit-speech"

onspeechchange="processspeech();"

onwebkitspeechchange="processspeech();"

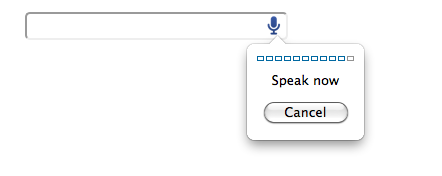

speech="speech": tells the browser that it is not a normal <input> element, rather it is an <input> element that can take input by speech or voice. This adds a small mic to the right of the <input> element which can be clicked so that the browser can capture voice from the microphone. x-webkit-speech="x-webkit-speech", this attribute is just a redundant attribute which will possibly be removed. This attribute is not in the draft specification. But this attribute is necessary for the demonstration to work because Google Chrome recognizes the x-webkit-speech attribute instead of the speech attribute. speech is just prefixed with x-webkit. It's just a difference of name as specified in the browser’s engine, nothing very special about it.

For extra knowledge, webkit is the web browser engine (called layout engine or rendering engine) of Google Chrome web browser. Each browser has an underlying engine that does the work of interpreting HTML, CSS and JS and laying out the elements on the browser screen. For instance, Gecko is the layout engine of Firefox, Trident is the layout engine of Internet Explorer and Presto is the engine for Opera. These layout engines are the core or kernel of any web browser and most of them are open source including gecko, webkit and others.

onspeechchange="processspeech();"

This subscribes the processspeech() event handler to the speech change event which occurs when the speech or voice input changes the value of the <input> element. processspeech() is just a function name and could have been anything else.

onwebkitspeechchange="processspeech();"

This event is just a redundant event as the redundant attribute discussed above. But this event is necessary for the demonstration to work because Google Chrome recognizes the onwebkitspeechchange event instead of the onspeechchange event.

This phenomenon of redundant attribute and event may seem familiar if you are acquainted and worked with some of the CSS properties that are prefixed with -moz and work only on mozilla/gecko browsers like -moz-transform and others.

JavaScript

$(document).ready(function() {

var d=document.getElementById("speech");

if(!d.onwebkitspeechchange&&!d.onspeechchange)

{

$("#speechinput").css("border-color","#900");

$("#speechinput input").css("display","none");

$("#speechinput img").css("display","block");

var notification= "Voice input functionality

is currently not supported in your browser.

Please install the latest version (11+) of Google Chrome to access this functionality";

notify(notification,3000);

$("#speechinput").click(function(){

var notification= "Voice input functionality

is currently not supported in your browser.

Please install the latest version (11+) of Google Chrome to access this functionality";

notify(notification,3000);

});

}

else

{

var notification= "Voice input

functionality is supported in your browser.

*Valid voice commands are: CHAT, VIDEO, PICTURE, LIVE, CONTACT";

notify(notification,3000);

}

});

function processspeech()

{

var speechtext=$("#speech").val();

var flag=1;

switch (speechtext)

{

case "chat":

$("#chat").click();

break;

case "video":

$("#video").click();

break;

case "picture":

$("#picture").click();

break;

case "live":

$("#live").click();

break;

case "contact":

$("#contact").click();

break;

default:

flag=0;

for (i=1;i<=3;i++) $("#speechinput").animate

({"border-color":"#900"},500).animate

({"border-color":"#fff"},500);

}

if (flag==1) for (i=1;i<=3;i++) $("#speechinput").animate

({"border-color":"#060"},500).animate({"border-color":"#fff"},500);

else

{

var notification="\"<span>"+ speechtext + "</span>\" is an invalid voice command.

*Valid voice commands are: CHAT, VIDEO, PICTURE, LIVE, CONTACT";

notify(notification);

}

}

function notify(notification,time)

{

if (typeof time == 'undefined' ) time = 2000;

$("#speechnotification").html(notification);

$("#speechnotification").animate({"left":0},1500).delay(time).animate

({"left":-(($(this).width())+5)},1500);

}

The first section of code executes when the document gets ready. It simply checks whether the two events are available in the speech input element. As it is known that JavaScript is an object-oriented language, so the above used notion for checking whether an attribute or event is present or not is an intuitive one. Here, for example, d.onwebkitspeechchange returns undefined(=NULL) on Firefox but on Chrome it does not return undefined. After checking, it just notifies the user about it.

The second section of the code is processspeech() event handler for the speechchange event. After the speech is converted to text and saved in the input text box, the event handler gets executed. The rest of the code here is quite easy to understand so I will not be discussing it.

- The various animations of the interface that I built, I will not be discussing those to keep the content concise.

CSS

CSS does not play any significant role in the SPEECH INPUT API. Speech Input is all about the HTML <input> element and the handling of events by js which are triggered by that <input> element. I have used CSS here just to hide the textbox associated with the HTML <input> element and to show only the microphone icon that is to the right of the textbox. We also scale the microphone so that it looks bigger and replace the text cursor that comes when we hover on the microphone with a hand cursor.

#speechinput input {

cursor:pointer;

margin:auto;

margin:15px;

color:transparent;

background-color:transparent;

border:5px;

width:15px;

-webkit-transform: scale(3.0, 3.0);

-moz-transform: scale(3.0, 3.0);

-ms-transform: scale(3.0, 3.0);

transform: scale(3.0, 3.0);

}

- I have not discussed all the CSS that was used to style and position the speech input element. Just take a look at the source while you are viewing the demonstration.