1. Why Search is so Important?

In 1970s, information stayed only in certain places with certain people in a certain format. In 2010 era, information is at your finger tip due to the huge revolution in the IT industry. When you have a tons of details, search plays a vital role to extract the right information at the right time.

Let's observe the below two analysis.

Analysis1

66% of the people that responded state that they have an average of less than 2 page views per visit on their developer oriented blog. The remaining 33% state that they have more than 2 pages per visit (actually 24% even more than 3). But this is probably due to different metrics or way of identifying pages per view.

In a nut shell, developer-focused blogs don’t retain the reader for more than one or two pages.

Analysis2

In Two-Second Advantage book by Vivek Randave and Kevin Maney, they mention that hockey players, musicians and CEOs who can predict what will happen and will win. Over the past fifteen years, scientists have found that what distinguishes the greatest musicians, athletes, and performers from the rest of us isn't just their motor skills or athletic abilities – it is the ability to anticipate events before they happen. A great musician knows how notes will sound before they're played, a great CEO can predict how a business decision will turn out before it's made.

The inferred message is that information and time frame are key for success and so complex free flow search plays vital role in the fast growing industry.

2. Performance Matters

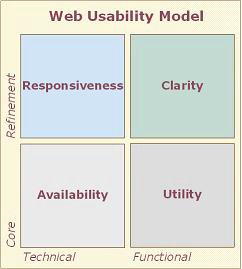

In a fast growing technology trend, Web performance really matters. Responsive sites can make the online experience effective, even enjoyable. A slow site can be unusable. To satisfy customers, a Web site must fulfill four distinct needs:

- Availability: A site that's unreachable, for any reason, is useless.

- Responsiveness: Having reached the site, pages that download slowly are likely to drive customers to try an alternate site.

- Clarity: If the site is sufficiently responsive to keep the customer's attention, other design qualities come into play. It must be simple and natural to use – easy to learn, predictable, and consistent.

- Utility: Last comes utility -- does the site actually deliver the information or service the customer was looking for in the first place?

These four dimensions are not alternative functional goals, to be weighed against one another and prioritized.

3. What is Lucene?

Apache Lucene a high-performance, full-featured text search engine. It's written in Java as open source.

4. Architecture of Lucene

In a nutshell, Lucene is defined as:

- simple document model

- document is a sequence of fields

- fields are name/value pairs

- values can be

strings or Reader's

- clients must strip markup first

- field names can be repeated

string-valued fields can be stored in index - plug-in analysis model

- grammar-based tokenizer included

- tokenizers piped through filters: stop-list, lowercase, stem, etc.

- storage API: named, random-access blobs

- index library

- search library

- query parsers

5. Core Lucene APIs

Lucene has FOUR core libraries(API) as below:

org.apache.lucene.document org.apache.lucene.analysis org.apache.lucene.index org.apache.lucene.search

document

- A Document is a sequence of Fields.

- A Field is a pair. name is the name of the field, e.g., title, body, subject, date, etc. value is text.

- Field values may be stored, indexed or analyzed (and, now, vectored).

analysis

- An Analyzer is a

TokenStream factory.

- A

TokenStream is an iterator over Tokens. input is a character iterator (Reader)

- A Token is tuple text (e.g., “pisa”). type (e.g., “word”, “sent”, “para”). start & length offsets, in characters (e.g, <5,4>) positionIncrement (normally 1)

- Standard

TokenStream implementations are Tokenizers, which divide characters into tokens and TokenFilters, e.g., stop lists, stemmers, etc.

index

- batch-based: use file-sorting algorithms (textbook) + fastest to build + fastest to search - slow to update

- b-tree based: update in place (http://lucene.sf.net/papers/sigir90.ps) + fast to search - update/build does not scale - complex implementation

- segment based: lots of small indexes (Verity) + fast to build + fast to update - slower to search

The above indexing diagram has the listed parameters:

- M = 3

- 11 documents indexed

- stack has four indexes

- grayed indexes have been deleted

- 5 merges have occurred

search

Primitive queries in Lucene search are listed as:

TermQuery: match docs containing a Term PhraseQuery: match docs w/ sequence of Terms BooleanQuery: match docs matching other queries. e.g., +path:pisa +content:“Doug Cutting” -path:nutch new: SpansQuery - derived queries:

PrefixQuery, WildcardQuery, etc.

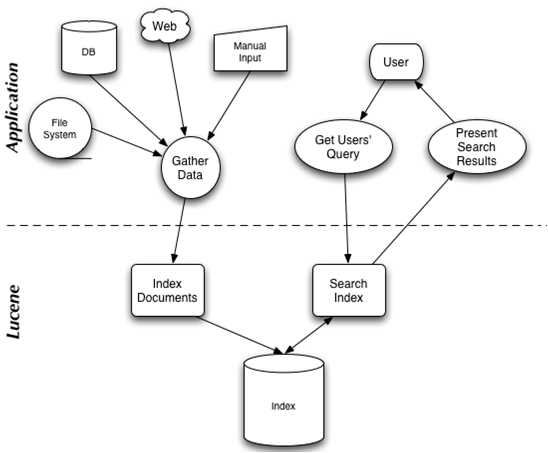

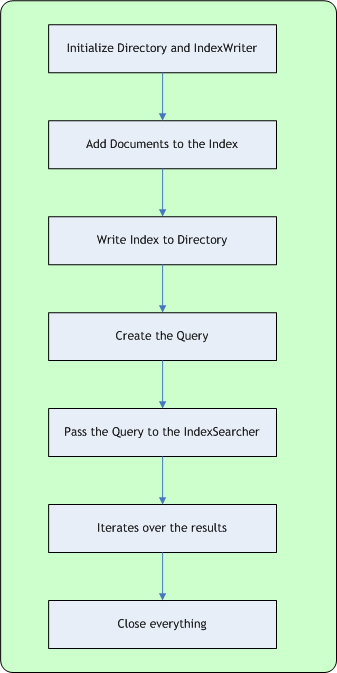

6. Methodology to Implement

7. Application Development

Initially, I wrote a simple console application which has two methods, namely:

IndexerQuerySearcher

Application layers are drawn as:

Indexer Component

Our module Indexer gets the input as a flat file with 4 columns. File content are read and stored in lucene 'document' record. A document is a single entity that is put into the index. And it contains many fields which are, like in a database, the single different pieces of information that make a document. Different fields can be indexed using different algorithm and analyzers.

IndexWriter component is responsible for the management of the indexes. It creates indexes, adds documents to the index, optimizes the index. analyzer contains the policy for extracting index terms from the text. StandardAnalyzer, which tokenizes the text based on European-language grammars, sets everything to lowercase and removes English stop words. Apparently, the above generated documents are indexed in lucene optimized way and written in the unread lucene properitary format file at declared LUCENE_DIRECTORY macro. Code snap as:

public void Indexer()

throws Exception {

IndexWriter writer=null;

StandardAnalyzer analyzer = null;

File file = null;

String strLine = "";

File readFile = new File

("C:\\Dev\\POC\\Lucene\\ConsoleApp\\datafeed\\employee.csv");

System.out.println("Start indexing");

file = new File(LUCENE_INDEX_DIRECTORY);

analyzer = new StandardAnalyzer(Version.LUCENE_CURRENT);

writer = new IndexWriter(FSDirectory.open(file),

analyzer,true,IndexWriter.MaxFieldLength.LIMITED);

writer.setUseCompoundFile(false);

try {

FileInputStream fstream = new FileInputStream(readFile);

DataInputStream in = new DataInputStream(fstream);

BufferedReader br = new BufferedReader(new InputStreamReader(in));

Field empIdField = null;

Field joinDateField = null;

Field empNameField = null;

Field empTeamField = null;

Document document = null;

String[] array = null;

while( (strLine = br.readLine()) != null){

document = new Document();

array = strLine.split(",");

String str0 = (array[0] !=null) ? array[0] :"";

empIdField = new Field("empIdField",str0,

Field.Store.YES,Field.Index.NOT_ANALYZED);

document.add(empIdField);

String str1 = (array[1] !=null) ? array[1] :"";

joinDateField = new Field("joinDateField",str1,

Field.Store.YES,Field.Index.NOT_ANALYZED);

document.add(joinDateField);

String str2 = (array[2] !=null) ? array[2] :"";

empNameField = new Field("empNameField",str2,

Field.Store.YES,Field.Index.ANALYZED);

document.add(empNameField);

String str3 = (array[3] !=null) ? array[3] :"";

empTeamField = new Field("empTeamField",str3,

Field.Store.YES,Field.Index.NOT_ANALYZED);

document.add(empTeamField);

writer.addDocument(document);

}

in.close();

System.out.println("Optimizing index");

writer.optimize();

writer.close();

}

catch (Exception e){ e.printStackTrace();

System.err.println("Error: " + e.getMessage());

}

}

QuerySearcher Component

To fetch the user input query (at UserQuery variable), let's create an IndexReader with reference to LUCENE_INDEX_DIRECTORY macro. IndexReader scans the index files and returns results based on the query supplied.

Standard analyzer object is created to simulate the query parser in the development process. Query parser is responsible for parsing a string of text to create a query object. It evaluates Lucene query syntax and uses an analyzer (which should be the same you used to index the text) to tokenize the single statements On executing the searcher based on the user query, the result set is stored in 'hits' object; apparently the result set is iterated to fetch the expected result info. Code snap as:

public static void QuerySearcher()

throws Exception

{

IndexReader reader = null;

StandardAnalyzer analyzer = null;

IndexSearcher searcher = null;

TopScoreDocCollector collector = null;

QueryParser parser = null;

Query query = null;

ScoreDoc[] hits = null;

try{

String userQuery = "EmployeeName1000109";

IndexBuilder builder = new IndexBuilder();

builder.Indexer();

analyzer = new StandardAnalyzer(Version.LUCENE_CURRENT);

File file = new File(LUCENE_INDEX_DIRECTORY);

reader = IndexReader.open(FSDirectory.open(file),true);

searcher = new IndexSearcher(reader);

collector = TopScoreDocCollector.create(1000, false);

parser = new QueryParser("empNameField", analyzer);

query = parser.parse(userQuery);

searcher.search(query, collector);

hits = collector.topDocs().scoreDocs;

if(hits.length>0){

for(int i=0; i<hits.length; if(reader!="null)" scoreid="hits[i].doc;" />

8. Application Architecture

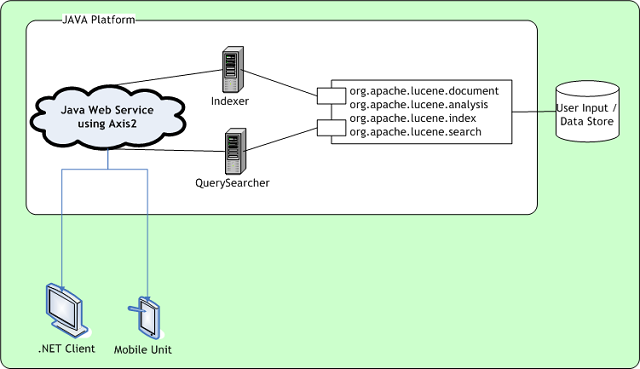

As .NET developer, my target is to perform the free text search process using .NET client. So, the below application architecture is developed.

Same Java console application is upgraded to Service based system by exposing the functionality as Java Web Service using AXIS. This newly developed Java web method can be easily accessed by adding service reference in .NET client application, since it follows standard WSDL pattern. On submitting the user query from .NET UI control, the code behind calls the referenced Java web service, in turn executes the Lucene search. Result set are returned in Response object to show in .NET UI page.

9. Points of Interest

Lucene.NET is a port of the Lucene search engine library, written in C# and targeted at .NET run time users. The Lucene search library is based on an inverted index. Apache Lucene.Net is an effort undergoing incubation at The Apache Software Foundation (ASF), sponsored by the Apache Incubator. Still, this paper gives the exposure to perform the interoperability between .NET front end and Java back end.

History

- Version 1.0 - Initial version