Introduction

In recent years, face recognition has attracted much attention and its research has rapidly expanded by not only engineers but also neuroscientists, since it has many potential applications in computer vision communication and automatic access control system. Especially, face detection is an important part of face recognition as the first step of automatic face recognition. However, face detection is not straightforward because it has lots of variations of image appearance, such as pose variation (front, non-front), occlusion, image orientation, illuminating condition and facial expression.

Color Segmentation

Detection of skin color in color images is a very popular and useful technique for face detection.

Many techniques have reported locating skin color regions in the input image. While the input color image is typically in the RGB format, these techniques usually use color components in the color space, such as the HSV or YIQ formats. That is because RGB components are subject to the lighting conditions, thus the face detection may fail if the lighting condition changes. Among many color spaces, this project used YCbCr components since it is one of existing Matlab functions which would save the computation time. In the YCbCr color space, the luminance information is contained in the Y component; and, the chrominance information is in Cb and Cr. Therefore, the luminance information can be easily de-embedded. The RGB components were converted to the YcbCr components using the following formula:

Y = 0.299R + 0.587G + 0.114B

Cb = -0.169R - 0.332G + 0.500B

Cr = 0.500R - 0.419G - 0.081B

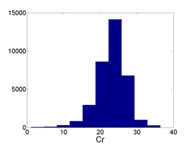

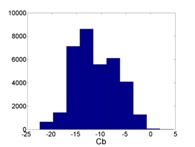

In the skin color detection process, each pixel was classified as skin or non-skin based on its color components. The detection window for skin color was determined based on the mean and standard deviation of Cb and Cr component. Their histogram distribution is shown in the figure below:

The color segmentation has been applied to a training image and its result is shown in the below figure. Some non-skin objects are inevitably observed in the result as their colors fall into the skin color space.

This is my code for color segmentation:

public int[] FaceDetection(Form1 Main, PictureBox PicFirst,

PictureBox Picresult,TextBox TWskin,PictureBox face,TextBox TBskin)

{

Testdetection = 0;

Graphics hh = PicFirst.CreateGraphics();

Bitmap PreResult = new Bitmap(PicFirst.Image);

Bitmap Result = new Bitmap(PicFirst.Width, Picresult.Height);

Bitmap HisResult = new Bitmap(PicFirst.Width, Picresult.Height);

Bitmap Template50 = new Bitmap("sample50.bmp");

Bitmap Template25 = new Bitmap("sample25.bmp");

Bitmap facee = new Bitmap(face.Width, face.Height);

for (int i = 0; i < PicFirst.Width; i++)

{

for (int j = 0; j < PicFirst.Height; j++)

{

R = PreResult.GetPixel(i, j).R;

G = PreResult.GetPixel(i, j).G;

B = PreResult.GetPixel(i, j).B;

Cr = 0.500 * R - 0.419 * G - 0.081 * B;

if (Cr < 0)

Cr =0;

HisResult.SetPixel(i, j, Color.FromArgb((int)Cr, (int)Cr, (int)Cr));

Image Segmentation

The next step is to separate the image blobs in the color filtered binary image into individual regions. The process consists of three steps. The first step is to fill up black isolated holes and to remove white isolated regions which are smaller than the minimum face area in training images. The threshold (170 pixels) is set conservatively. The filtered image followed by initial erosion only leaves the white regions with reasonable areas as illustrated in the figure below:

This code is used for image segmentation:

if (Cr > 10)

Cr = 255;

Result.SetPixel(i, j, Color.FromArgb((int)Cr, (int)Cr, (int)Cr));

}

}

Secondly, to separate some integrated regions into individual faces, the Roberts Cross Edge detection algorithm is used. The Roberts Cross Operator performs a simple, quick to compute, 2-D spatial gradient measurement on an image. It thus highlights regions of high spatial gradients that often correspond to edges.The highlighted region is converted into black lines and eroded to connect crossly separated pixels.

This code is used for image Edge detection:

FiltersSequence processingFilter = new FiltersSequence();

processingFilter.Add(new Edges());

WPresentdetection=0;

BPresentdetection1= 0;

Picresult.Image =processingFilter.Apply( Result);

Edge Extraction Template Face

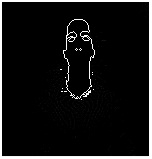

We then resorted to a different method of face template. Realizing that much of what distinguishes a face lies within its feature, we decided to convert all grayscale images to black and white, and then use edge extraction to extract the important features, then combine them to form a template face. The following face was constructed from a sampling of 25 faces taken from the training set.

for (int d = 0; d <150-50; d+=20)

{

Testdetection = 0;

WPresentdetection = 0;

BPresentdetection1 = 0;

for (int c = 0; c <150-50; c+=20)

{

Testdetection = 0;

WPresentdetection = 0;

BPresentdetection1 = 0;

for (int w = 0; w < Template50.Width; w++)

{

for (int h = 0; h < Template50.Height; h++)

{

if (Template50.GetPixel(w, h).B == 255)

{

if (Result.GetPixel(d + w, c + h).B == Template50.GetPixel(w, h).B)

{

WPresentdetection++;}

}

}

}

TWskin.Text = WPresentdetection.ToString();

TBskin.Text = BPresentdetection1.ToString();

if (WPresentdetection > 377) {

hh.DrawRectangle(new Pen(Color.Red), d, c,60, 60);

for (int i = 0; i < 60; i++)

{

for (int j = 0; j< 60; j++)

{

r = PreResult.GetPixel(i + d, j + c).R;

facee.SetPixel(i, j, Color.FromArgb((int)r, (int)r, (int)r));

face.Image = facee;

}

}

TWskin.Text = WPresentdetection.ToString();

TBskin.Text = BPresentdetection1.ToString();

WPresentdetection = 0;

BPresentdetection1 = 0;

Testdetection = 1;

break;

}

}

if (Testdetection == 1 || Testdetection == 2)

{

WPresentdetection = 0;

BPresentdetection1=0;

Testdetection = 0;

break;

}

}

Reference

http://stanford.edu

"I Rely Solely On God."

History

- 18th August, 2009: Initial post

- 20th February, 2012: Article update