Introduction

Some time ago, I had to make a project to determine the shortest path inside a matrix. I thought to myself, "There's nothing better than path-finding for this." There is a huge amount of links and explanation about Path Finding, but didn't find a version written in C# that could meet my needs. So, I decided to make the A* implementation in C#. This code was really useful for me and I bet it can be useful for many other people, too. I won't explain the algorithm implementation too much because just typing "pathfinding algorithm A*" into Google brings up a lot of documents wherein you can find every single detail about it.

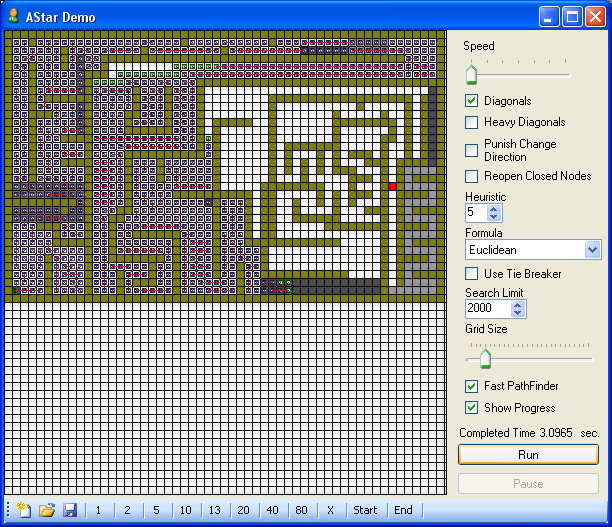

About the application

A* is a generic algorithm and there are no perfect parameters to be set. The algorithm has many things that can be set, so the best way to determine the appropriate parameters for your project is to test different combinations. As a note: usually a good A* implementation does not use a standard ArrayList or List for the open nodes. If a standard List is used, the algorithm will spend a huge amount of time searching for nodes in that list. Instead, a priority queue should be used. I borrow code from BenDi for the priority queue implementation. I changed it a little bit and I also changed the implementation to use generics instead of ArrayList, which makes it run faster. This project is really useful for two reasons.

- I followed the A* implementation and I tried to implement it to run with good performance, so the algorithm itself can be easily reused in any project.

- The front-end gives a full chance to experiment with many variables where you can really watch and learn how it works, changing the heuristic, formula or options to analyze what could be the best setting for your project.

The call in the source code that does the entire job is the following:

public List<PathFinderNode> FindPath(Point start, Point end, byte[,] grid);

This method takes as parameters a start point, end point and the grid. It will return the path as a list of nodes with the coordinates. I made this call on a separate thread, which gives the opportunity to keep the control of the application when PathFinder is working.

Algorithm settings

Speed

This is the rendering speed; reducing speed permits detailed examination of how the algorithm opens and closes the nodes in real-time.

Diagonals

This hint will tell the algorithm if it is allowed to process diagonals as a valid path. If this check box is not set, the algorithm will process just 4 directions instead of 8.

Heavy Diagonals

If this check box is set, the cost of the diagonals will be bigger; this will make the algorithm avoid using diagonals.

Punish Change Direction

If this check box is set, every time the algorithm changes direction it will have a small cost. The end result is that if the path is found it will be comparatively smooth without too many direction changes, thus looking more natural. The downside is that it will take more time because it must research for extra nodes.

Reopen Closed Nodes

If this is checked, the algorithm is allowed to reopen nodes that were already closed when the cost is less than the previous value. If reopen nodes is allowed it will produce a better and smoother path, but it will take more time.

Heuristic

This is a constant that will affect the estimated distance from the current position to the goal destination. A heuristic function is used to create an estimate of how long it will take to reach the goal state. The better the estimate, the shorter the found path.

Formula

This is the equation used to calculate the heuristic. Different formulas will give different results: some will be faster, others slower and the end may vary. The formula to be used depends strongly on the A* algorithm's use.

Use Tie Breaker

Sometimes when A* is finding the path, it will find many possible choices for the same cost and destination. The tie breaker setting tells the algorithm that when it has multiple choices to research, instead it should keep going. As it goes, the changing costs can be used in a second formula to determine the "best guess" to follow. Usually, this formula is incrementing the heuristic from the current position to the goal, multiplied by a constant factor.

Heuristic = Heuristic + Abs(CurrentX * GoalY - GoalX * CurrentY) * 0.001

Search Limit

This is the maximum number of closed nodes to be researched before a "Path not found" message is returned. This is a useful parameter because sometimes the grid can be too big and we need to know a path only if the goal is near the current position. It is also useful if the process can't spend too much time calculating the path.

Grid Size

This parameter just affects the front-end. It can change the grid size, where reducing the grid size gives a chance to create a bigger test but will take longer to render.

Fast PathFinder

When unchecked, the implementation used is the algorithm as it usually appears in the books. When checked, it will use my own PathFinder implementation. It requires more memory, but it is about 300 to 1500 times faster depending on the map complexity. See the PathFinder Optimization section below for more details.

Show Progress

This permits observation of the algorithm as it operates in real-time. If this box is checked, the completion time will be the calculation time plus the rendering time.

Completed Time

This is the time that the algorithm took to calculate the path. To know the true value, uncheck "Show Progress."

Path finder optimization

After I implemented the original algorithm, I got frustrated because of the amount of time it took to resolve paths. This was especially the case for bigger grids and when there was no solution to the path. Basically, I noticed that the open and closing list were killing the algorithm. The first optimization was to replace the open list by a sorted list and the close list by a hashtable. This improved the calculation time, but was still not what I had hoped for. Later, I replaced the open list from a sorted list to a priority queue; it made a change but still not a big difference.

The big problem was that when the grid was big (1000x1000), the amount of open and closed nodes in the list was huge. Searching those lists took a long time, whatever method I used. At first, I thought to use classes to keep the node information, but that was going to make the garbage collection crazy between the nodes' memory allocation and releasing the memory of the nodes that were disposed of. Instead of using classes, I decided to use structures and reuse them in the code. I got more improvements from this, but still nothing close to what maybe StarCraft does to handle eight hundred units in real-time.

My current application is like a Visio application where I need to find a path between objects. The objects are not moving, so I didn't need a super-fast algorithm, but I needed something that could react in less than one second. I needed to find a way to get nodes from the open list in O(1) or closer to that. I thought the only way to get that was to not have an open list at all. That is when I thought of using a calculation grid. This calculation grid was going to store the nodes. Thus, if I knew the (X, Y) location then I could reach the node immediately O(1).

Because of this I could get rid of the close list; I could mark a closed node directly in the calculation grid. Also, since every node has a link to the parent node, I would not need a close list at all. However, I could not get rid of the open list because I needed to keep getting the nodes with less total cost (F), and the second grid didn't help with that. This time, I kept using the open list (priority queue), but it was just to push and to get the node with the lowest cost. Priority queues are excellent for that, no linear access at all.

The only cons were that the memory consumption was huge to keep a second grid. That is because every cell stored a node and every node was 32 bytes long. Basically for a map (1000x1000) I needed 32MB of RAM. Because I was now accessing the second grid by coordinates (X, Y), I didn't need those values in the node anymore. That reduced 8 bytes multiplied by 1,000,000. I therefore saved 8MB of ram. Every node had a link to the parent nodes and those were int (32 bits) because the grid can never be too big. Then I replaced them for ushort (16 bits) and that made me save another 2 bytes by node. This saved me another 2MB. I also realized that the heuristic (H) is calculated for every node on the fly and it is not used for the algorithm anymore. So, I removed this from the node structure, too. Heuristic was an int (4 bytes) and so I saved another 4MB. I also had minimum nodes of 13 bytes, but because of memory alignment they took 16 bytes at run-time. I had to set the struct to use memory alignment for every byte:

[StructLayout(LayoutKind.Sequential, Pack=1)]

Finally, I got my 13 bytes for every node. For that reason, when running the sample, you will see that running FastPathFinder takes 13MB of extra memory.

- If the grid is 1024x1024 the calculation grid will take 13MB

- If the grid is 512x512 the calculation grid will take 3.2MB

- If the grid is 256x256 the calculation grid will take 832KB

I have to admit that it took me a fair amount of time to make it work because of the complexity of the A* debugging. I got my satisfaction when it worked, though. I could not believe that I got a minimum of 300 times faster for simple grids and more than 1500 times faster for complex grids. Still, I was inserting and removing nodes from the priority queue, which meant memory work and garbage collection coming into play. So, I figured out a way around keeping the nodes inside the priority queue: Instead of pushing and popping the node, I pushed and popped the address where the node is in the calculation grid. That let me save memory and run faster. The way I was storing coordinates in the priority queue was translating an (X, Y) coordinate into a linear coordinate:

Location = Y * grid width + X;

The problem arising there was that I had to translate back and forth between a linear coordinate and a map coordinate. So, I changed my calculation grid from a fixed map array:

PathFinderNode[,]

To a linear array:

PathFinderNode[]

That made the code a lot clearer because there was no translation between map and linear coordinates. I also added a constraint to the size of the grid. This constraint was that the map must be a power of 2 in width and height. Doing that allowed shifting and logical operations, which are much faster than mathematical operators. Where before it was:

LinearLocation = LocationY * Width + LocationX;

…

LocationY = LinearLocation / Width;

LocationX = LinearLocation – LocationY;

It now became:

LinearLocation = (LocationY << Log(Width, 2)) | LocationX;

…

LocationX = LinearLocation & Width;

LocationY = LinearLocation >> Log(Width, 2);

After all this, when the standard PathFinder algorithm resolved the path for the map HardToGet.astar in 131 seconds, the optimized PathFinder got the same result in 100 milliseconds. It was a 1300-times improvement. Another optimization was found in that if the current open node was already in the open list and the current node total cost was less than the store in the list, then the old node would be removed or replaced. Instead, I decided to leave the old open node in the open list and just add the new one. The old node would have a higher cost, so it would be processed later. However, when processed, this node would already be closed and so ignored. This is a lot faster than going to the open list and removing the old open node.

Another optimization arose from the fact that, between path finding calls, I had to clear the calculation grid. The calculation grid stores objects of type PathFinderNode and every object has a field Status. This field tells if the node is open, closed or neither. Status could be 0 = not processed, 1 = open or 2 = closed. Zeroing the grid usually took about 30 milliseconds for a 1024x1024 grid and that had to be done between path finding calls. To optimize it, instead of zeroing the grid what I do is change the values for the node status between calls. Then between calls, it increments the status value by 2. So in the first path finding search, we have:

- 1 = open

- 2 = closed

- X = not researched

In the second path finding search we have:

- 3 = open

- 4 = closed

- X = not researched

And so on…

In this way, between path finding calls, it doesn't need to clear the calculation grid. It just uses different values for open and closed nodes; any other value means it's not researched. Another small optimization was to promote all local variables to member variables. This allows creation of all variables in the heap at once instead of creating/destroying them in the stack for every pathfinder call. I changed my cost (G) and total cost (F) from int to float in order to obtain more detailed calculations of the total cost. The path produced for complex maps was 99% similar to when int was used for the cost and total cost. I noticed, however, that even when the float calculation time didn't vary too much from int, the number of nodes re-evaluated was huge. This was because the nodes were keeping the decimal values where before they made the algorithm skip the node. Now they were re-evaluated, which made the algorithm perform roughly 10 times slower. The difference was not appreciable, so for that reason I kept using int and discarding the decimals on the cost calculation.

In the optimization journey, I basically got rid of almost all memory allocation and sequential searches for fixed allocations and minimum search. It was analogous to replacing all mobile parts in a machine with fixed ones. Still, there are more optimizations that can be done and I'll probably keep working on those. If you have some feedback about the optimization or what can be done to improve it, feel free to add a comment and I'll reply as soon as I can.

Toolbar

The toolbar allows to us to load, create new and save test scenarios. It also allows to insert nodes (obstacles) in the grid with different costs. By default, all nodes in the grid have a cost of "1," but this can be changed to another cost. The "X" in the toolbar is cost "0" and represents an impassable node. The "start" and "end" buttons let us put the start and end positions inside the grid before running the algorithm. The costs of the nodes are not limited to the costs defined in the toolbar. The mouse buttons can change the current cost of the nodes, too. While moving the mouse and pressing the:

Left Button --> The current node is set to the cost specified by the active cost button in the toolbar.

Right Button --> The current node cost is incremented with the cost specified by the active cost button in the toolbar

Left & Right Buttons --> The current node cost is decremented with the cost specified by the active button in the toolbar.

Front-end

When I did the A* implementation, I took a long time to find the best parameters for my real project. Because of that, I decided to create a front-end independently from the real application to help me find those values. The only reason for the front-end was testing and debugging, so the front-end currently has a poor design. There is a lot of things that can be done to make it better and run with better performance, but the objective of this article is to learn about A* implementation and not GDI optimization. For that reason, I did not spend too much time on it.

Some time ago, I saw a pretty cool application that implements A*, but I can't remember the name. It was in made in Delphi, but no source code was available. I took the main idea from there to create this front-end. I didn't want to make a clone of that application; rather, I thought it looked really interesting and that it could be nice to create something similar. There is a different namespace where it contains just the algorithm and the helper classes, the "Algorithms" namespace.

As a note, the rendering in real-time of the researching nodes is not persistent. Basically, the nodes are drawn directly in the window HDC (Device Context). Therefore if a portion of the window is invalidated, it won't be redraw again. If you move another window over the front-end, the current path will disappear. That can be resolved using a second grid and keeping the status of every node, but again, when I did the front-end it was just to debug the algorithm and see how the settings affect the path search.

You will probably notice that setting the speed to maximum (minimum value) still doesn't look like good performance. That's not because of the algorithm; that's because the algorithm is doing a lot of callbacks (events) to the front-end to allow the rendering. The first line inside PathFinder.cs is a #define:

#define DEBUGON

If this symbol is defined, then the algorithm will evaluate if the debugging events are defined. If they are, the algorithm will make a callback (event) for every step. When the application is used on a real project, it is strongly recommended that you remove that symbol from PathFinder.cs. Removing the symbol will hugely increase the performance of the algorithm. If the symbol is not defined, then the algorithm won't make any callback and will return just after it has found a path or a path was not found at all.

Conclusion

This application and code can help the beginner to the advance developer in exploring the A* algorithm step by step. Feel free to make any comments or recommendations.

History

- 24 May, 2007 - Article edited and posted to the main CodeProject.com article base

- 29 September, 2006 - First author update

- 24 August, 2006 - Original version posted