Introduction

In some cases, we need to use mathematical tools and equations to solve our real-world problems. Unfortunately, there are many cases when we cannot use the simple pen-and-paper techniques we learned in high school (yes, they lied to us in high school!) to solve our problem and we need to rely on numeric methods. For example, the following simple problem cannot be solved using pen and paper:

e^(-x) + x - 2 = 0

Many numeric methods rely on the ability of their user to compute gradients (partial derivatives) of functions. This is a tedious task that can usually be done automatically by a computer, and we will demonstrate the AutoDiff library achieves this objective. It allows the developer to concentrate on her business logic - modelling the problem she is trying to solve, and saves the time wasted on manually developing the gradients.

Background

Automatic Differentiation (AD) is a very old technique and may refer to quite different things. But all have one thing in common - all methods are given some representation of a function and allow computing the gradient at any given point. More information on various AD techniques can be found here.

Unfortunately many people are not aware of it and worked very hard to efficiently compute derivatives in order to develop gradient-based algorithms. One very well known example is the back-propagation algorithm for neural networks. When using AD, there is absolutely no need to develop a learning algorithm. Any AD library can efficiently compute the gradient for you, and you just need to use its results to update the weights.

The AutoDiff library described in this article takes the operator overloading approach. Expressions (or terms) are represents using objects. Operator overloading allows creating new terms from existing ones. Once a term is defined, the library allows to efficiently compute its value or gradient for a given assignment for the variables.

Using the Code

A Quick Introduction

After you have downloaded the library, you can create a C# project, add a reference and start playing! First, let's give a quick "hello world" introduction. Here is a small program that defines a function and evaluates its value and gradient.

using System;

using AutoDiff;

class Program

{

static void Main(string[] args)

{

Variable x = new Variable();

Variable y = new Variable();

Term func = (x + y) * TermBuilder.Exp(x - y);

Variable[] vars = { x, y };

double[] point = { 1, -2 };

double eval = func.Evaluate(vars, point);

double[] gradient = func.Differentiate(vars, point);

Console.WriteLine("f(1, -2) = " + eval);

Console.WriteLine("Gradient of f at (1, -2) =

({0}, {1})", gradient[0], gradient[1]);

}

}

Here is the output:

f(1, -2) = -20.0855369231877

Gradient of f at (1, -2) = (0, 40.1710738463753)

Here are some key points to note:

- Variables are objects of class

Variable. - More generally, we build our functions using objects of the base class

Term. Variables are terms too. - We create new terms from existing ones using operator overloading and the

TermBuilder class. See the definition of func in the snippet above. - The extension methods

Evaluate and Differentiate are using to compute the value and the gradient, respectively, of a function defined by a given term.

AutoDiff on Steroids

The functions Evaluate and Differentiate are similar to running interpreted code. They work, but they are slow. We can speed up the computation by "compiling" our function and use the compiled version to efficiently evaluate or differentiate functions. Compiling is beneficial when we need to compute gradients of the same function multiple times. Fortunately, this is exactly what happens in most numeric algorithms. Here is another program that does exactly the same, but compiles the function first.

using System;

using AutoDiff;

class Program

{

static void Main(string[] args)

{

Variable x = new Variable();

Variable y = new Variable();

Term func = (x + y) * TermBuilder.Exp(x - y);

ICompiledTerm compiledFunc = func.Compile(x, y);

Tuple<double[], double> gradientAndValue =

compiledFunc.Differentiate(1, -2);

double eval = gradientAndValue.Item2;

double[] gradient = gradientAndValue.Item1;

Console.WriteLine("f(1, -2) = " + eval);

Console.WriteLine("Gradient of f at

(1, -2) = ({0}, {1})", gradient[0], gradient[1]);

}

}

There is one small difference between normal an compiled terms. Their Differentiate method returns a tuple that contains both the value and the gradient. There are two good reasons:

- Computing the gradient of the compiled form also evaluates the function too. So why not return the result?

- Most numeric algorithms require both the value and the gradient at a given point. So it just saves an additional call to

Evaluate.

Equation Solving Sample

Let us create a program that solves the equation given above:

e^(-x) + x - 2 = 0

We will use the well-known Newton-Raphson method of equation solving at our disposal. We will refer to it as the NR method. First, let's create a small method that given a function f, solves the equation f(x) = 0. Our function will be given as a compiled term. The NR method requires an initial value x0, that is close enough to the real solution and also a stopping criterion. In the following example, we will use the number of iterations as the stopping criterion.

static double NewtonRaphson(ICompiledTerm function, double x0, int iterationsCount = 10)

{

double x = x0;

for(int i = 0; i < iterationsCount; ++i)

{

var valueAndGradient = function.Differentiate(x);

var fx = valueAndGradient.Item2;

var dfx = valueAndGradient.Item1[0];

x = x - fx / dfx;

}

return x;

}

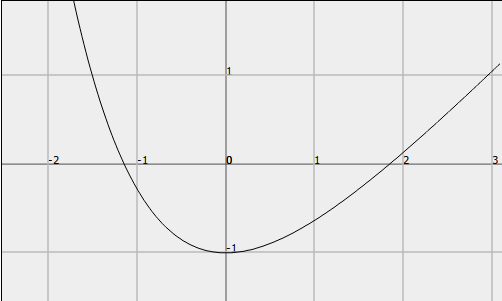

And now we can use this method to solve our equation. First, let us plot the graph of f(x) = e^(-x) + x - 2:

We can see that there are two solutions to our equation - one near x = -1, and another one near x = 2. So, we can write a small problem to solve our equation.

static void Main(string[] args)

{

var x = new Variable();

var func = TermBuilder.Exp(-x) + x - 2;

var compiledFunc = func.Compile(x);

var solution1 = NewtonRaphson(compiledFunc, -1);

var solution2 = NewtonRaphson(compiledFunc, 2);

Console.WriteLine("X1 = {0}, X2 = {1}", solution1, solution2);

}

And here is the output. You can use your favorite calculator to verify the correctness of the solutions:

X1 = -1.14619322062058, X2 = 1.84140566043696

More Information

The library was originally written to be used in numeric optimization algorithms. Most such algorithms rely on the user's ability to provide the function's value and its gradient at any given point. The variety of algorithms is huge (BFGS, L-BFGS, Conjugate Gradient, Gradient Descent) but they all need gradients. It was successfully used in my thesis research project and provided excellent results.

It was created to allow developers to concentrate on modelling the target functions and constraints that solve their real world problems, freeing them from the need to perform tedious mathematical tasks. Using AD in conjunction with an optimization library developers can experiment with many different functions quickly and easily. This library brings this ability to the .NET platform.

You can download the library and play with it for your research projects or real applications and are welcome to leave feedback. We need the feedback!

Some Algorithm Details

The library works using the Reverse-Accumulation algorithm. This algorithm allows computing function gradients in linear time in the size of our function. To be more precise, we can define our function with a DAG (= directed acyclic graph) that describes how it is computed. It's similar to compiler syntax tree. The terminal nodes are variables and constants, and mathematical operators are the internal nodes. It is very similar to .NET expression trees used for LINQ. The algorithm computes the gradient in linear time with respect to the size of this graph (= number of nodes + number of edges).

Surprisingly, linear time gradient computation is actually faster than approximating the gradient numerically. When we approximate, we need to evaluate our function once for each variable (by shifting its value a bit, and then evaluating the function). This means that approximation of the gradient runs in quadratic time - linear time for each evaluations, and we have n such evaluations. You get free lunch - both the accuracy of actually computing the gradient and the speed of the Reverse-Accumulation algorithm.

Unfortunately, Reverse Accumulation is poorly suited for single-variable functions. The NR sample you saw before is not a good usage for this library and a Forward-Mode AD library is a better alternative. However the NR sample is simple enough for this article and therefore was chosen. The real performance and ease of use benefit for this library is for multivariate optimization algorithms.

History

- 8th April, 2011: Initial version

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin