Introduction

Strictly speaking, implementing transactions in WCF is easy: you use attributes to control the service and operations behaviors, and you are done!

If that is the case, then why is the scrolling bar so tiny? Well, that is because just about like everything else in programming, you need to understand the architecture, the underground of whatever seems to be an easy task. Only then can you architect (design) solutions as opposed to only coding them…

WCF transactions are built from ground up around .NET transactions. This is hardly surprising anyway since WCF is a .NET technology; however, this sums up as follows: you need to understand how .NET does transactions in order to utilize them in WCF.

What is Covered?

At this point, you might be tempted to think “OK, so I know about the TransactionScope class and I have used it before, what else could I be missing about .NET transactions?” A lot – my answer will be if that is really where your knowledge stops. In that case, please keep reading.

This article is targeted for developers who want to truly understand transactions and how they are implemented in .NET in general and in WCF.

The Basics

This section lays down foundations of .NET transactions. Grasping information of this section is crucial, so let’s start:

Resource Managers

A Resource Manager (RM) is a system, product, or any component that manages data participating in transactions. An RM has the capability of storing changes to its resources and either commit or rollback these changes depending on the outcome of the transaction. For example, a DBMS is an RM, so is a message queue service. To be more specific, SQL Server 2005, Oracle, and MSMQ are all RMs to name a few.

RMs come in two flavors:

- Durable

- Volatile

Durable RMs can have transactions that survive system outage such as machine restarts. For example, SQL Server 2005 is a Durable RM because it can memorize the state of a transaction before the machine restarts; later, when the machine is up again, the transaction picks up from where it stopped.

Volatile RMs can only have in-memory transactions. They cannot survive system outages. For example, we can use the System.Transactions namespace introduced in .NET Framework 2.0 to implement in-memory RMs to manage types such as integers, strings, and even collections.

Transaction types

There are two transaction types: Atomic and Long Running (business transactions).

Atomic transactions usually take little time to finish. A typical example would be updating related tables in a database; you want all operations to complete or fail as a transaction. Atomic transactions are the ones that this article addresses.

Long Running – also called Business – transactions take a long time to complete. A typical example would be sending a request to a Web Service and expecting the reply over a course of days or even months. Obviously, you do not want to lock down resources for all that time, so this is not a case to be handled by Atomic transactions. Instead, a technique known as Compensation is used to manually (i.e., manual programming) detect business problems (such as detecting that the sent message has an incorrect customer ID) and “compensate” by a corresponding business action (such as sending a cancellation message with the same customer ID).

|

Quick test: Consider a scenario where a Web Service (WCF, for example) accepts requests from clients to be enlisted as potential customers. The service has to update its database with the client name which sent the request and the client; once guaranteed that the call is successful, wants to update its database by the service name as a potential service (dummy but suffices!). So, is this an Atomic or Long Running transaction? The answer would surely be Atomic: even though the operation involves calling a service on another machine and possibly another environment, the scenario indicates that the operation will only take seconds to complete; moreover, both RMs mentioned in the scenario are databases and supposedly can participate in transactions. Since the operation will only take seconds, then there is no cost of locking down resources in the RMs.

|

|

Quick test: When would the above scenario be considered a Long Running transaction? Easy, let’s say that the service has to send the client request to be reviewed by a certain employee before authorizing it; this action could clearly take hours or days to complete; in this case, it won’t make sense to participate in an Atomic transaction and lock down the RM resources. Instead, such cases are handled by code for compensation…

|

Transaction Protocols

Depending on each case, transaction protocols dictate the type and scope of communication between participating applications inside a transaction. The following are the available transaction protocols:

- Lightweight Protocol: this protocol is used when a single application inside an AppDomain is working with a single durable RM and/or multiple volatile RMs. No cross calls for other AppDomains are allowed, so logically no client-service calls are allowed.

- OleTx Protocol: this protocol allows cross AppDomain and even machine calls. It allows invoking multiple durable RMs. All this, however, is within the Windows environment through Remote Procedure Calls (RPCs). As such, no communication through firewalls is allowed and no cross-platform communications are allowed either. This protocol is typically used in a Windows intranet scenario.

- WSAT Protocol: WS-Atomic protocol is one of the Web Service industry standards, and is similar in capabilities to the OleTx protocol with one major difference: it can go across firewalls and do interoperable communication; as such, it is ideal for internet scenarios.

I know the above section might look a little bit dull, so let’s spice it a little bit with some Quick Tests. Which transaction protocol would each of the following scenarios be using?

|

Test 1: An application calls into SQL Server 2005 for multiple updates which are all enlisted in a single transaction.

Answer: Lightweight protocol since the application is calling into a single durable RM within the same application domain.

|

|

Test 2: A WCF service calls into SQL Server 2005 for multiple updates which are all enlisted in a single transaction. The service will be called from some clients on the same machine and some clients on other Windows machines. These clients will participate in the transaction along with the service.

Answer: OleTx since the WCF service will be called from other clients which are logically running on different application domains. Although the service itself fulfills the Lightweight protocol conditions (it executes in a single app domain and calls into a single durable RM), the fact that it is called from other clients on other app domains participating on the transaction makes this a case of OleTx protocol.

The fact some clients are on the same machine and others are on other Windows machines does not matter here because the OleTx protocol covers both cases.

|

|

Test 3: Same scenario as Test 2 with one big difference: some clients are Java based and they will call into the service and participate in the transaction.

Answer: WSAT protocol since this is a clear case of cross platform interoperability.

|

|

Test 4: An application calls into SQL Server 2005 and Oracle for multiple updates within a transaction. The application executes within its own app domain.

Answer: OleTx protocol; as although the application executes in a single app domain, it is actually calling into two durable RMs and thus the usage of OleTx.

|

Transaction Managers

So now that we have discussed transaction protocols, we need to discuss how transactions are practically implemented. After all, the protocol defines communication and boundary rules, but how do we manage these transactions and how do we specify which one to use? Thankfully, we don’t! We have transaction managers for us just for that.

There are three transaction managers:

- Lightweight Transaction Manager (LTM): this was introduced in the .NET Framework 2.0, and uses the Lightweight Protocol. That is, it manages all transactions that are using the Lightweight Protocol.

- Kernel Resource Manager (KRM): this was introduced in Win Vista and Win Server 2008. It also uses the Lightweight Protocol, but has also the ability to call on the transactional file system (TXF) and transactional registry (TXR) on Vista and Win 2008.

- Distributed Transaction Manager (DTC): familiar to most developers, this manager is capable of managing transactions across process and machine boundaries. As such, it is only logical that it uses either the OleTx Protocol or the WSAT Protocol.

Now, in case this topic is new to you, it is only logical that a question similar to the following has popped up into your head: “I feel more secure now that I know about the different types of Transaction Managers and the purpose of each; however, how do I instruct my code to use the appropriate manager and how do I change the manager when the scope of my code differs?”

The answer would be: do not worry; this is handled automatically for you. The System.Transactions namespace in .NET Framework 2.0 has a capability called Promotion which automatically selects the appropriate transaction manager. Let’s consider a scenario and track how Promotion happens.

- You have an application which opens a connection to SQL Server 2005 for multiple updates within a

TransactionScope class (in the System.Transactions namespace). Directly, LTM is used since this is a matching Lightweight protocol scenario (if this is not clear, then you have to read the above sections again).

- Now, your code tries to open another connection to the same or different SQL Server 2005 instance. This is a case of multiple durable RMs. As such, Promotion kicks in and automatically promotes to DTC which will be using the OleTx protocol in this case.

- Now, say your application calls for a Java service and enlists on its transaction. In this, transaction manager in use will still be DTC; however, this time using the WSAT protocol.

More about RMs and Transaction Managers

As previously explained, RMs come in two flavors: durable and volatile. It was also stated that LTM is used to manage transactions over a single durable RM such as SQL Server 2005.

While this is perfectly true, it is worth mentioning that not all durable RMs support LTM even for single instance operations. For example, if you have an application that sends multiple messages to an MSMQ inside a TransactionScope. Even though this scenario looks similar to the SQL Server 2005 case, and even though MSMQ is a durable RM; the LTM won’t be managing the transaction, instead DTC will. The reason is that MSMQ does not support LTM. Another durable RM that does not support LTM is SQL Server 2000.

But how come MSMQ and SQL Server 2000 do not support LTM? The idea here is that RMs must support promotion in order to use LTM; think about it: if the RM does not support promotion, then it cannot be promoted to DTC when needed, and this will cause problems for obvious reasons. By design, RMs that support promotion must implement the interface “IPromotableSinglePhaseNotification” so that when promotion is required, the .NET framework can call the method Promote() of the IPromotableSinglePhaseNotification interface. MSMQ and SQL Server 2000 do not implement IPromotableSinglePhaseNotification.

2 Phase Commit Protocol

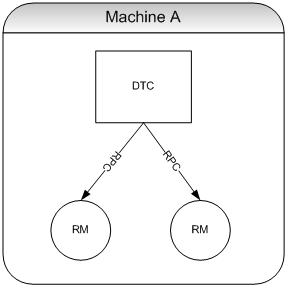

The 2 Phase Commit Protocol (2PC) is the management protocol used by DTC to manage the distributed transaction. This is best explained by an example, so examine the below figure:

In this example, we see a DTC managing a transaction within the boundary of the same machine A. The 2PC protocol works as follows:

- Phase1

- The DTC asks each RM to vote on either commit or abort through an RPC call (note: vote only, no action yet) (for example, in the case of SQL Server, this might be a voting on a table update operation).

- Each RM “votes” to either commit if its own operation is successful or to abort if it faced a problem.

- The DTC then collects all votes from all RMs and decides that the transaction is successful if all RMs have voted to commit and failed otherwise.

- Phase2

- The DTC then sends its “command” based on the collected votes to each RM to either commit or abort.

- If the “command” was to commit, then the DTC gets an acknowledgment that each RM has successfully committed.

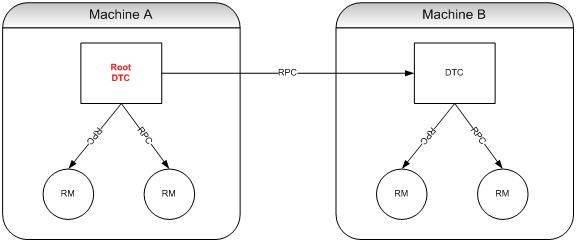

Now. let’s see a second example of what would happen if we extend the above scenario into two different machines (for example, a WCF client-service scenario). Examine the below image:

The 2PC protocol works as follows:

- Phase1

- Each DTC on each machine asks the corresponding RMs to vote for commit or abort.

- Each RM votes.

- Each DTC collects its corresponding RMs votes.

- The new step is that the DTC on machine A is now the root DTC considering that it is the one that started the transaction. As such, the Root DTC collects the vote of all DTCs on other machines – DTC on machine B, in this case. This is again done through RPC calls since we are on an all-Windows environment.

- The Root DTC then decides on the outcome of the transaction based on the votes that it has collected from its own RMs and other DTCs (DTC of machine B)

- Phase2

- The Root DTC sends its command to the participating DTCs.

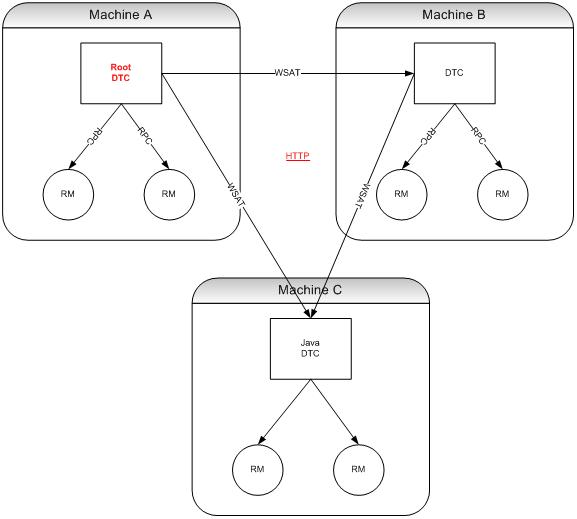

Let’s go one step further: in the previous scenarios, the communication was all-Windows, so the transaction protocol in use was OleTx (again, if this is not clear for you, then you should read the above sections before you continue). Now, let’s see how the 2PC protocol works with a Java platform, for example, coming into play. Examine the below image:

This is a classic internet scenario when interoperability is a must. HTTP now is used between all machines, and while the communication of the 2PC protocol stays pretty much the same, the big difference now is that DTC is using the WSAT protocol for interoperability.

Machine C uses a Java equivalent to Microsoft DTC, and the calls flying from the Root DTC and from the other DTC (just in case of multi-communication) are done through the Web Service standard Atomic Transaction (WSAT).

.NET Transactions

If you are new to the subject, then I bet the above was overwhelming. But before you think of a break, go on thorough this example which shows a hands-on approach of how transactions are implemented in .NET.

The example is a Windows application which has these methods: DoDBStuff(), DoMSMQStuff(), and DoVolatileStuff().

DoDBStuff() is a method which opens a single connection to a SQL Server 2005 database and performs multiple operations. This method is shown below:

private void DoDBStuff()

{

SqlConnection cnn = null;

SqlCommand cmd = null;

cnn = new SqlConnection("Password=sa;Persist Security Info=True;" +

"User ID=sa;Initial Catalog=TestDB;Data Source=localhost");

cmd = new SqlCommand("insert into testtable values" +

" ('test data')", cnn);

cmd.Connection.Open();

cmd.ExecuteNonQuery();

cmd.ExecuteNonQuery();

cmd.Connection.Close();

}

DoMSMQStuff() sends multiple messages to a transactional private queue called “testqueue”. This method is shown below:

private void DoMSMQStuff()

{

MessageQueue queue = null;

queue = new MessageQueue();

queue.Path = @".\Private$\TestQueue";

queue.Send("Message Body", "Message Label",

MessageQueueTransactionType.Automatic);

}

Finally, DoVolatileStuff() is a little bit interesting. Recall previously that I mentioned that in .NET Framework 2.0, we can use the System.Transactions namespace to create volatile (in-memory) resources made of .NET types such as integers, strings, and collections. Well, I will point you to a piece-of-art code written in MSDN magazine which creates a library of volatile .NET type representations. I use this library in my development whenever I wanted to create volatile .NET types. The URL of the code is: http://download.microsoft.com/download/2/e/9/2e9bde04-3af1-4814-9f1e-733f732369a3/transactions.exe. The DLL name is “VRM.dll”. The method is shown below:

Transactional<int[]> numbers = new Transactional<int[]>(new int[3]);

private void DoVolatileStuff()

{

numbers.Value[0] = 11;

numbers.Value[1] = 22;

numbers.Value[2] = 33;

}

Now, define a TransactionScope where you will begin testing the code:

private void button1_Click(object sender, EventArgs e)

{

numbers.Value[0] = 1;

numbers.Value[1] = 2;

numbers.Value[2] = 3;

try

{

using (TransactionScope scope = new TransactionScope())

{

scope.Complete();

}

}

catch

{

}

}

First, start by calling the method DoDBStuff(). Everything should work fine, and you should see two new records inside your table. This transaction is managed by LTM because we are dealing with a single durable RM within the same app domain. How to check this? Easy enough; just disable the DTC on your machine and note that the code still works.

Note: to disable DTC, go to Start -> Programs -> Administrative Tools -> Component Services. Click on Component Services, then click on Computers. Right click My Computer and select Stop MS DTC.

Now, call DoDBStuff() a second time. Keep DTC disabled. Run the code and you will get an error. The database status won’t be changed (no records are inserted); that is, even though the first call was successful, the second call wasn’t and the transaction rolled back. But, why does this happen? This time you have opened two simultaneous connections to the database; this means calling two durable RMs. This transaction needs to be managed by DTC, which is disabled. Enable DTC and re-run the code, which will work this time.

Now, disable both calls to DoDBStuff. Disable DTC. Call the method DoMSMQStuff(). The code will generate an error. Can you remember why? Recall that in this case we are calling a single durable RM which is MSMQ; MSMQ does support LTM due to its lack of support for promotion and thus needs DTC. Enable DTC and again the code will work.

Finally, you can check with the volatile RM using the method DoVolatileStuff(). This RM is managed by the LTM. Play with it a little bit and see how values are rolled back in case something wrong happens during the transaction. For example, try the code below and see how in-memory values are rolled back:

numbers.Value[0] = 11;

throw new Exception("custom exception");

numbers.Value[1] = 22;

numbers.Value[2] = 33;

You can try any combination you like to check for all cases of when LTM and DTC are used. For example, which transaction manager will be used if you call DoDBStuff() once and DoVolatileStuff() multiple times? You guessed it! It will be LTM because LTM can manage one durable RM and multiple volatile RMs…

WCF Transactions

So far we have done the hard part; hopefully, the concepts are all clear. Next, we turn our attention towards WCF transactions. As we will see, the concepts we have seen are the driving forces behind WCF transactions; after all, WCF is a .NET technology.

Transaction Flow

In the previous paragraphs, I have mentioned many times, scenarios of clients calling WCF services and how these clients and services participate in a single transaction. Well, how do they?

The answer is through transaction propagation. Simply put, a WCF service can be configured to join a transaction initiated by a client. Propagation will be explained in detail shortly; for now though, just grasp the concept.

Transaction Flow and Bindings

For a client to be able to propagate its transaction to a WCF service, the transport binding must support this. Not all bindings support transactions; if you think about it only TCP, IPC, and WS bindings support transactions.

IPC (NetNamedPipeBinding) and TCP (NetTcpBinding) bindings support transactions because they deal with AppDomains whether on the same machine (IPC) or across machines (TCP). WS (WSHttpBinding, WSDualHttpBinding, and WSFederationHttpBinding) bindings support transactions because clearly they implement the Web Service Enhancement standards (WSE); namely the WS-Atomic Transaction (WSAT).

Transaction Flow and Transaction Protocols

As you know by now, transaction protocols come in three flavors: Lightweight, OleTx, and WSAT. While what follows will sure be familiar to you if you have followed the article from the start, let’s recap anyway:

When transaction propagates from a client to a service on different AppDomains or Windows machines, the protocol in use will be OleTx. If different platforms are involved, WSAT will be the protocol in use. Lightweight will not be used actually for transaction propagation, it is only of use within the same AppDomain.

Configuring Bindings for Transaction Flow

Even for the five bindings that support transactions, transaction flow is disabled by default. You have to enable this for the client and the service in the configuration file. This is done by setting the “transactionFlow="true"” attribute of the <binding> element.

Configuring Transaction Flows for a WCF Service Operation

Transaction flows are configured on WCF operations level. I will use an example to show how to do that.

Consider the simple service below:

[ServiceContract]

public interface ITxService

{

[OperationContract]

bool DoTxStuff();

}

In order to configure operation DoTxStuff(), we have to set the attribute “TransactionFlow” into one of the below values (all commented):

[ServiceContract]

public interface ITxService

{

[OperationContract]

bool DoTxStuff();

}

“TransactionFlowOption” can be set to one of the below options:

NotAllowed: This is the default value. It means that the client cannot propagate its transaction into the service operation. At this end, it does not matter if the binding supports transactions and if it is configured to allow transaction flows; if the client tries to propagate its transaction, it will simply be ignored. No exception is thrown through.Allowed: This means that the operation allows transaction propagation if the client wants to. That is, if the client has created a transaction, and is using a binding which supports transactions, and has allowed transaction flows on the bindings; then the transaction will be propagated to the service (provided that transaction flow is enabled at the service). Otherwise, no propagation happens. It is mportant to note in this case that if the service is using a binding that does not support transactions, while the client is trying to propagate a transaction, an exception will be thrown.Mandatory: Means that the client and the service must be using a binding that supports transactions with transaction flow enabled. Any violation to these conditions will result in an exception.

Configuring Transactions for a WCF Service Operation

Now that we have configured transaction flows, it is time to do the actual transaction coding for a WCF operation. What this means is that whether a transaction is propagated from a client or the service is initializing its own transaction, we need the code that actually initializes the transaction, commit and abort it. This is shown in this section:

Continuing with the above example, this is how to configure the operation for transaction support:

public class TxService : ITxService

{

[OperationBehavior(TransactionScopeRequired=true,

TransactionAutoComplete=true)]

public bool DoTxStuff()

{

……

}

The “TransactionScopeRequired” property of the “OperationBehavior” attribute is analogous to the “TransactionScope” class we have seen earlier; it wraps the code block with a transaction scope.

The “TransactionAutoComplete” property instructs WCF to auto commit the transaction at the end of the code block. This is the same as using the “Complete” method of the “TransactionScope” class – as we have done previously.

It is important to note the following: the above configuration means that the operation will use a transaction; however, it says nothing about the source of this transaction. Meaning that if the configuration allows for client transaction flow, then the client transaction will be propagated to the operation and it will be the one in use. If, on the other hand, transaction flow is not configured, the operation will initialize its own transaction and use it.

Client-Service Transaction Propagation Configuration Modes

Armed with all the knowledge so far, let’s summarize the possibilities when configuring transaction propagation between a client and a service.

Client-Service Propagation

This configuration makes the service use the client transaction if the client has a transaction and propagation is enabled; else the service operation will initialize its own transaction. The steps to configure this mode are as follows:

- Use a binding that supports transactions and set the “

transactionFlow” attribute to true on both the client and the service.

- Set the “

TransactionFlowOption.Allowed” on the operation contract.

- Set the “

TransactionScopeRequired” and “TransactionAutoComplete” properties of the “OperationBehavior” attribute.

Client Only

This configuration enforces the service to only use the transaction propagated by the client. The service will never initialize its own transaction. The steps to configure this mode are as follows:

- Use a binding that supports transactions and set the “

transactionFlow” attribute to true on both the client and the service.

- Set the “

TransactionFlowOption.Mandatory” on the operation contract.

- Set the “

TransactionScopeRequired” and “TransactionAutoComplete” properties of the “OperationBehavior” attribute.

Service Only

This configuration enforces the service to initialize its own transaction regardless of whether the client has a transaction or not. The steps to configure this mode are as follows:

- You can use any binding. If you use a binding that does not support transactions, then nothing else is required. If you use a binding that does support transactions, make sure to set the “

transactionFlow” attribute to false.

- Set the “

TransactionFlowOption.NotAllowed” on the operation contract.

- Set the “

TransactionScopeRequired” and “TransactionAutoComplete” properties of the “OperationBehavior” attribute.

No Transaction

This configuration means that the service won’t have a transaction at all. The steps to configure this mode are as follows:

- You can use any binding. If you use a binding that does not support transactions, then nothing else is required. If you use a binding that does support transactions, make sure to set the “

transactionFlow” attribute to false.

- Set the “

TransactionFlowOption.NotAllowed” on the operation contract.

- Do not set the “

TransactionScopeRequired” property, or set it to false.

Local and Distributed Transaction Identifiers

The final concepts we will discuss before showing the sample, are those of local and distributed transaction identifiers.

The local identifier is the identifier for the LTM associated with the AppDomain. It is always available for the current ambient transaction. An ambient transaction is simply the current transaction that the code is running under. You can get the local identifier as follows:

TransactionInformation info =

Transaction.Current.TransactionInformation;

info.LocalIdentifier;

The global identifier is automatically set when a transaction is promoted to DTC. Similarly, you can access the global identifier as follows:

TransactionInformation info =

Transaction.Current.TransactionInformation;

info.DistributedIdentifier.ToString();

Sample: Client-Service Propagation

The first sample is one where the service and the client are configured for transaction propagation. Recall that in this mode, if the client has a transaction, then it will be propagated, else the service will initialize its own transaction.

The service code is shown below:

[ServiceContract]

public interface ITxService

{

[OperationContract]

[TransactionFlow(TransactionFlowOption.Allowed)]

bool DoTxStuff();

}

public class TxService : ITxService

{

[OperationBehavior(TransactionScopeRequired=true,

TransactionAutoComplete=true)]

public bool DoTxStuff()

{

TransactionInformation info =

Transaction.Current.TransactionInformation;

System.Diagnostics.EventLog.WriteEntry("service local id 1",

info.LocalIdentifier);

System.Diagnostics.EventLog.WriteEntry("service distributed id 1",

info.DistributedIdentifier.ToString());

DoDBStuff();

info = Transaction.Current.TransactionInformation;

System.Diagnostics.EventLog.WriteEntry("service local id 2",

info.LocalIdentifier);

System.Diagnostics.EventLog.WriteEntry("service distributed id 2",

info.DistributedIdentifier.ToString());

DoDBStuff();

info = Transaction.Current.TransactionInformation;

System.Diagnostics.EventLog.WriteEntry("service local id 3",

info.LocalIdentifier.ToString());

System.Diagnostics.EventLog.WriteEntry("service distributed id 3",

info.DistributedIdentifier.ToString());

return true;

}

private void DoDBStuff()

{

SqlConnection cnn = null;

SqlCommand cmd = null;

cnn = new SqlConnection("Password=sa;Persist Security Info=True;" +

"User ID=sa;Initial Catalog=TestDB;Data Source=localhost");

cmd = new SqlCommand("insert into testtable values ('test data')", cnn);

cmd.Connection.Open();

cmd.ExecuteNonQuery();

cmd.ExecuteNonQuery();

cmd.Connection.Close();

}

}

The service configuration is straightforward, and as explained previously for client-service transaction propagation.

Note in this example that I am printing the local and distributed identifiers into the event viewer. We will see why in a moment.

The binding I am using is netTcpBinding with transaction flow enabled.

Now, let’s see the client code:

{

TxServiceClient proxy = new TxServiceClient();

proxy.DoTxStuff();

For the moment, ignore the commented code. So the client simply calls into the service operation. No transaction is created in the client; as such, as per our configuration, the service will create its own transaction since no transaction is propagated from the client; but how to check that?

Run the client application, and then examine the event viewer. You will see the following:

- Service local ID 1 is set; service distributed ID is not set:

- Reason: since no transaction propagation took place, the current transaction is the local one created at the service itself; as such, the local ID is set while the distributed ID is not.

- Service local ID 2 is set and equal to service local ID 1; service distributed ID is not set.

- Reason: now, we have done the first call to the database so we are still using the LTM. So, the local ID is the same and the distributed ID is not set – again, since we are using LTM.

- Service local ID 3 is set and equal to service local ID 1 and 2; service distributed ID is set.

- Reason: now we have done the second database call (new connection), so, as you know by now, this will cause promotion from the LTM to the DTC. As a result, the distributed ID will now be set while the local ID is still the same.

That’s cool! Now, let’s do a simple yet meaningful variation: keep the service code as is, and uncomment the client code so that it looks like this:

using (TransactionScope scope =

new TransactionScope(TransactionScopeOption.Required))

{

TxServiceClient proxy = new TxServiceClient();

TransactionInformation info =

Transaction.Current.TransactionInformation;

System.Diagnostics.EventLog.WriteEntry("client local id 1",

info.LocalIdentifier);

System.Diagnostics.EventLog.WriteEntry("client distributed id 1",

info.DistributedIdentifier.ToString());

proxy.DoTxStuff();

info = Transaction.Current.TransactionInformation;

System.Diagnostics.EventLog.WriteEntry("client local id 2",

info.LocalIdentifier);

System.Diagnostics.EventLog.WriteEntry("client distributed id 2",

info.DistributedIdentifier.ToString());

scope.Complete();

}

We have simply created a transaction at the client so that it is propagated to the service. Run the client and examine the event viewer:

- Client local ID 1 is set; client distributed ID 1 is not set

- Reason: the client has created a transaction, so naturally, it starts as LTM, and as such it has a local ID and no distributed ID.

- Service local ID 1 is set; service distributed ID 1 is set

- Reason: contrary to the previous example, the distributed ID is set even before making a single call to the database. Why? Because we have crossed boundaries and LTM has been promoted to DTC which is now managing the transaction. So, by the time the request reaches the service, the DTC is managing the transaction and the transaction has been propagated into the service. The local ID is always present for any ambient transaction (see before).

- Service local ID 2 is set and equal to 1; service distributed ID 2 is set and equal to 1

- Reason: clearly, now the distributed ID is still active throughout the operation. Similarly, the local ID is also still the same for the same ambient transaction.

- Service local ID 3 is set and equal to 2; service distributed ID 3 is set and equal to 2

- Reason: same as above.

- Finally, Client local ID 2 is set and is the same as Client local ID 1; Client distributed ID 2 is set and is equal to the Service distributed ID (1,2, or 3)

- Reason: now, we are back on the client and after the call to the service. The local ID remains the same and this is normal (same ambient transaction). The interesting part is that the distributed ID now has the same value of the distributed ID of the service. Since the transaction got propagated from the client to the service, it has a uniform distributed ID across all boundaries participating in that transaction.

This sample does not cover all possible configuration combinations; however, by understanding it well, you can use the same tests to check on the other three possible configurations: Client Only, Service Only, and No Transaction…

| Note: Although not related to the sample, the update service reference did not work after changing the transaction flow mode. I had to remove and re-add the reference. |

Enabling WSAT

The final topic (I promise) that will be discussed is that of an interoperable scenario.

The above example was between a .NET client and a .NET WCF Service; that is, the transaction protocol in use was OleTx when crossing app domain boundaries. In fact, if you take a look at the generated configuration file at the client – via SvcUtil – you will notice that the transaction protocol in use is OleTx.

What will happen though if we have – say – a Java participant in the transaction?

Not much really would be the answer. This is a known virtue of WCF which by design encapsulates what once were difficult tasks into a set of configurable items.

Edit the transaction protocol for your service, and set it to “WSAtomicTransaction11”. This will allow the service to talk to other platforms through HTTP. As explained previously, your code won’t change because transaction management is a task that you do not have to manage yourself; you have transaction managers and WCF to do that for you!

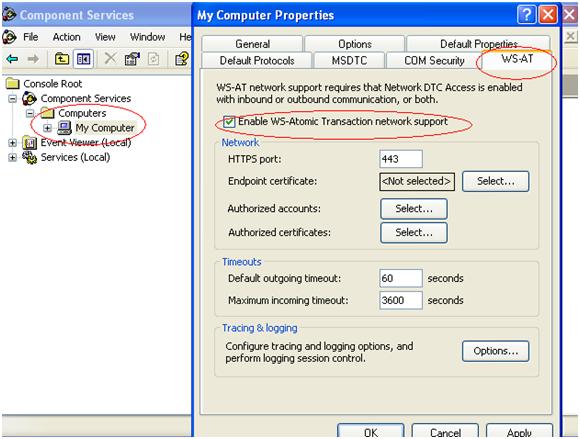

In order to use WSAT, you first need to enable it on your machine. Follow the below steps:

- Register the WSAT UI DLL using the VS 2008 command prompt as follows: “regasm.exe /codebase wsatui.dll” (this is found in the .NET 3.0 SDK).

- Install a hot fix for Windows to enable WSAT support from this URL: http://www.microsoft.com/downloads/details.aspx?displaylang=en&FamilyID=86b93c6d-0174-4e25-9e5d-d949dc92d7e8.

- Finally, open the Component Services properties UI (check previously for how) and you will find that you have a new tab called WS-AT. Enable it as shown in the figure below:

Finally

That was a long, I mean really long article. I truly believe that it will help you understand many of the inner workings of .NET transactions and how they are implemented in WCF…at least that’s what I hope!