Enough is Enough

Day after day, I help financial analysts, managers, and CEOs to troubleshot their freaking business *quick look behind me* with nice visualizations, great multi-dimensional models and so deaaaaaar Excel.

And ME, I am stuck troubleshooting this stupid legacy program with plain old raw XML files, text file, CSV files, rows and columns... and this ****** command line.

Enough is enough, dear developers and friends.

This is the day, we take the power into our hands, this is the day we are fighting with the same weapon as the one we serve.

This is the day I decide to spread the word, to you, we are not slaves and deserve better than stupid text file and command line interfaces.

... and don't tell me you have NotePad++ so you can't complain, you deserve way more.

Let's reclaim the power that is ours. Let's proclaim Technical Intelligence.

From Wikipedia:

Business intelligence (BI) is a set of theories, methodologies, processes, architectures, and technologies that transform raw data into meaningful and useful information for business purpose.

So here and now, both on my blog and on CodeProject, in June 2013, I declare:

Technical intelligence (TI) is a set of theories, methodologies, processes, architectures, and technologies that transform raw data into meaningful and useful information for technical purpose.

How great is this buzzword?

This article is about using BI tools, for our own technical needs. There is no reason why BI practices are not relevant for analyzing technical data.

With this hacker mind, let's see how we can play with other's tools for our own purposes.

This journey is about manipulation of information for developers by developers.

In a more poetic way, we'll eat our dog food.

This article will have almost no code, except if it helps in explaining my point, this article is about ideas to troubleshoot problems with an original point of view, this article will give you ideas.

Disclaimer: The few lines of code in this article are images. The reason for that is not laziness. The point of this article is to show you how to get higher level visualization of technical data quickly by crafting your own tools for your own problems and leveraging existing user applications the best you can.

The code here is throw away because it has a very narrow use case, specific to my problem.

Aggregate Technical Data with Pivot Table

If you don’t know about procmon, then it probably means that your main tool for debugging other’s program was a mix between The Dance Of The Rain and Google.

You wasted your life, but fear not, now that you know procmon, you’ll see a whole new world. And that’s only the beginning.

This excellent program was done by Mark Russinovich a long time ago, he is my coding hero, for his contribution on sysinternals, Windows Internals, and Windows Azure.

As you can see, you can see every file system or registry operation happening in real time on your system. How cool is that?

A tabular view is fine for sequential analysis.

But sometimes, you want to know about frequencies: “What is the program spamming my hard drive? Or where is the data of Evernote concentrated on the hard drive?”

In which case, you will prefer an aggregated view of the same data… something like that.

But maybe you prefer an aggregated view of the result of your operations… Easy enough… just change what dimension you are mapping on columns.

Everything is possible, thanks to the undervalued “Export the trace as CSV” in procmon… You named it, CSV is open bar for Excel.

… you can create your pivot table yourself, but since I’m doing this stuff daily, I created my own program to tweak procmon’s CSV and break into fields that are interesting for me. (Noticed the nice path hierarchy ? :))

You can check it out on CodePlex, there is no release, so download the code and compile by yourself… and more importantly, tweak it to your need, the code is not difficult. (Sorry GIT fans, I did this stuff before becoming a GIT fanboy.)

Know Thy Time

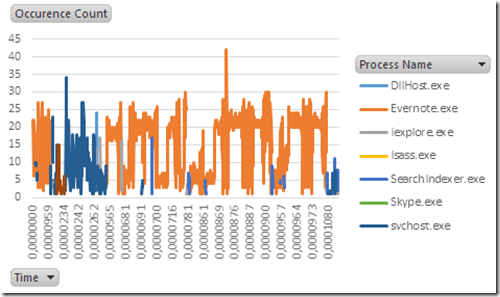

How does the disk access evolve through time by program?

It seems Evernote is continuously hungry!

But hey, that’s cool! It seems that windows SearchIndexer shuts up as soon as another program get high data throughput!

To do this sort of graph, Excel is waiting for a measure representation of data instead of an event representation.

Let me show you.

I get this sort of data from procmon…

I created the Time column, which is a number representation of the column Time of Day.

This is an event-driven representation of data. One event equal to one row.

On the other hand, if you want to use graphs in Excel, you need this representation. Every series with their own column, plus the time column.

This is a measurement-driven representation of data. You can see each row as one measure of 10 different properties at the same time.

So how do you transform an event-driven representation to a measurement-driven representation?

Easy… you just create a pivot table from the event-driven representation of data.

Then you add the time in row, and the properties to measure in column… and just count occurrences for each Time/Process name.

The resolution of the Time column is important (i.e., how much do you approximate the Time of day (timespan) column).

If you are very precise on the resolution, you get that.

The measures are messy, we can’t really see trends, but can see sharply when each process is starting, but we don’t see any trends.

High resolution, clearly show us that SearchIndexer stops as soon as Evernote needs to do stuff, and resumes as soon as Evernote stops.

On the other hand, if you improve the precision of the time, then you can see the trends more sharply, but you are not precise on time.

With a low resolution, you can clearly see that there is two pikes of Evernote.

With low resolution, you can deduce when I decided to save a note on Evernote, but can’t really conclude as well as the first graph that SearchIndexer stop as soon as Evernote stops.

This is what mathematicians call uncertainty principle or Heisenberg principle, by sampling, you can’t get exactly both positions in time (high resolution) and where it is going (low resolution).

But you can sample at multiple resolution by taking a high resolution sampling, and rounding it at different scale, like I have done.

Or use a mathematical tool called wavelets… but I’m just a developer and I don’t know how to use it, so I’ll stop here.

In the first graph, the Time was an integer representation of the “Time of day” column rounded to 0,00000001, whereas in the second graph, I removed 2 zeros 0,000001.

Show Me Who’s Slowing Down My Hard Drive

From the exact same data, you can easily map it on a TreeMap by transforming procmon’s data.

Procmon gave me that type of data.

All I need is to transform it into this new form, so every TreeMap framework will understand: One column to identify a rectangle (Id), one to identify the parent (Process), one to specify the Label (Label), and one to specify the size of the rectangle (Count).

We don’t need any line of code to make the transform.

Starting with the CSV output of procmon, create a new calculated column with all information that the TreeMap data needs separated by commas.

Next, we create a pivot table, plot our new columns and count it.

We copy all the values aside, and break the first column with ‘,’ which leaves us with what we need.

Then merge the columns into one column separated with “,” by using the Concatenate Excel function.

Copy that column, you have your CSV into the right format.

Once you have done that, find a simple treemap tool, I end up with Dojo’s treemap.

Copy their sample, and find where they are inserting data.

Replace with a placeholder, and change code that is relevant to your own columns.

Then hack a quick tool that transforms your CSV into json.

And enjoy!

The code is not pretty, but it goes to the point, very quickly, no over abstraction.

Conclusion

This was just an introduction, I will probably write again about the idea.

Data never tells any story, your point of view does.

Plain old XML rarely explains anything.

I write about techniques I use to reverse undocumented stuff on my blog ao-sec, and you don’t need to go deep into assembler when you know 3 things: what to measure, how to measure, and how to see it.

We are developers, so why are we sometimes so afraid to code for ourselves?

The data part is simple, for most of our needs we only need plain old CSV.

The view part is simple, for most of our needs we can just use Excel, you don’t have to be ashamed.

Don’t be afraid to use business user’s tools, searching for insight from data is a common need. Don’t let the marketing tell you who should use the tool.

Let the hacker mind blossom.