SQL cursor without using real cursor

4.36/5 (7 votes)

Sometimes for processing rowsets we need a cursor, but we may not use standard cursor, we can create our pseudo cursor with much more simple syntax...

Introduction

When we need to process a rowset in the cycle usually we open cursor over it and then make any processing.

For me it is not always fast and convinient in following cases:

1. Selected data stored in table variable in a body of stored procedure

2. Syntax of cursor creation and fetching is difficult (we need create and free cursor, because cursor is a system object)

3. Sometimes we can not use cursor because of changing data used by cursor filter (so cursor can refetch same record again - this can be avoided with additional calculations, but anyway...).

Background

The main Idea is to prepare data for sequential processing without cursor (preparation may be done on client or on server side), after that rowset can be simply processed in WHILE expression.

Preparation of data includes following:

Rowset should have a primary key or some unique field wich can be iterated sequentially - usually INT or BIGINT.

To get that we can use ROW_NUMBER function on SQL server side, or just set some field in CYCLE when preparing on CLIENT side. Or you can use IDENTITY definition in temp variable.

Using the code

Source data table:

CREATE TABLE [SampleData]

(

[Id] [uniqueidentifier] NOT NULL,

[Name] [nvarchar](100) NOT NULL,

CONSTRAINT [PK_SampleData] PRIMARY KEY CLUSTERED ( [Id] ASC )

)

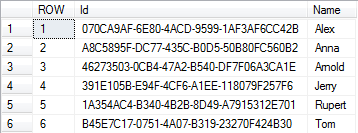

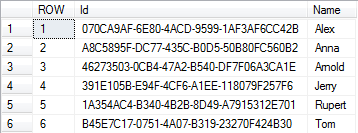

Source data:

INSERT INTO [SampleData]

(Name)

VALUES

('Alex'),

('Anna'),

('Tom'),

('Jerry'),

('Rupert'),

('Arnold')

Prepare data:

Using ROW_NUMBER:

DECLARE @preparedData TABLE (ROW int, Id uniqueidentifier, Name nvarchar(100))

INSERT INTO @preparedData

SELECT ROW_NUMBER() OVER (ORDER BY Name), Id, Name

FROM [SampleData]

The same using IDENTITY:

DECLARE @preparedData TABLE ([ROW] int IDENTITY(1,1), [Id] uniqueidentifier, [Name] nvarchar(100))

INSERT INTO @preparedData

( [Id], [Name] )

SELECT [Id], [Name]

FROM [SampleData]

ORDER BY [Name]

Now we can simply iterate by our variable: @preparedData

DECLARE @row int = 1

DECLARE @id uniqueidentifier = null

DECLARE @name nvarchar(100) = null

SELECT @id = [Id], @name = [Name]

FROM @preparedData

WHERE [ROW] = @row

WHILE @id IS NOT NULL

BEGIN

--Do something with fetched record

PRINT CAST(@id as nvarchar(100)) + ' - ' + @name

--Fetch next record

SET @id = NULL

SET @name = NULL

SET @row = @row + 1

SELECT @id = [Id], @name = [Name]

FROM @preparedData

WHERE [ROW] = @row

END

Results of current processing:

070CA9AF-6E80-4ACD-9599-1AF3AF6CC42B - Alex

A8C5895F-DC77-435C-B0D5-50B80FC560B2 - Anna

46273503-0CB4-47A2-B540-DF7F06A3CA1E - Arnold

391E105B-E94F-4CF6-A1EE-118079F257F6 - Jerry

1A354AC4-B340-4B2B-8D49-A7915312E701 - Rupert

B45E7C17-0751-4A07-B319-23270F424B30 - Tom

Thoughts

Using same technique and possibility to have table variable in stored procedure, we can simply implement some bulk processing of transferred records.

Of course, remember about memory... This should not be used if you whant to process millions of records without partitioning (I meant that you should not to get all records at once, you can use some paging or portions of data).