Analyzing some ‘Big’ Data Using C#, Azure And Apache Hadoop – Analyzing Stack Overflow Data Dumps

4.97/5 (17 votes)

Explains how to use Apache Hadoop and Azure to Analyze Large Data sets, using Map reduce jobs in C#

Time to do something meaningful with C#, Azure and Apache Hadoop. In this post, we’ll explore how to create a Mapper and Reducer in C#, to analyze the popularity of namespaces in the Stack overflow posts. Before we begin, let us explore Hadoop and Map Reduce concepts shortly.

A Quick Introduction To Map Reduce

Map/Reduce is a programming model to process insanely large data sets, initiallyimplemented by Google. The Map and Reduce functions are pretty simple to understand.

- Map(list) –> List of Key, Value

- The Map function will process a data set and splits the same to multiple key/value pairs

- Aggregate, Group

- The Map/Reduce framework may perform operations like group,sort etc on the output of Map function. The Grouping will be done based on the Keys and the values for a given key is passed to the Reduce method

- Reduce(Key, List of Values for the key) -> Another List of Key,Value

- The Reduce method may normally perform a aggregate function (sum, average or even other complex functions) for all values for a given key.

The interesting aspect is, you can use a Map/Reduce framework like Apache Hadoop, to hierarchically parallelize Map/Reduce operations on a Big Data set. The M/R framework will take the responsibility of multiple operations, including deploying the Map and Reduce methods to multiple nodes, aggregate the output from the map methods, passing the same to the reduce method, taking care of fault tolerance in case if a node goes down etc.

There is an excellent visual explanation from Ayende @ Rahien if you are new to the concept.

Apache Hadoop and Hadoop Streaming

Apache Hadoop is a very mature distributed computing framework that provides Map/Reduce functionality.

Hadoop streaming is a utility that comes with Apache Hadoop, and the utility allows you to create and run map/reduce jobs with any executable. Your Map and Reduce executables will read/write data from/to the console if you are using Hadoop Streaming, and Hadoop will do the required piping of data to your mapper/reducer.

From a Hadoop head node, you can run the following command to initiate your map/reduce job. Don’t bother too much as later you’ll see that the Hadoop dashboard we are using in Azure will provide us a decent UI to create the Map/Reduce command while we create a new Map Reduce Job.

$HADOOP_HOME/bin/hadoop jar $HADOOP_HOME/hadoop-streaming.jar \ -input myInputDirs \ -output myOutputDir \ -mapper <yourmapperexecutable>\ -reducer <yourreducerexecutable>

Presently, a Developer Preview version of Hadoop is available on Windows Azure, so you can head over tohttps://www.hadooponazure.com/ and sign in to create your own Hadoop clusters, to run Map/Reduce jobs. Once you sign up, you can use the Javascript Interactive console to interact with your Hadoop cluster.

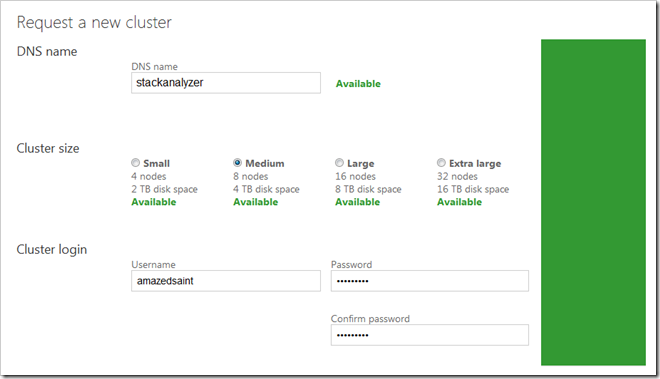

Setting up your Hadoop Cluster in Azure

You can easily setup your Hadoop Cluster in Azure, once you are signed in to https://www.hadooponazure.com/ . Request a cluster, and give a name to the same. I’m requesting a cluster with the name Stackanalyzer, so that I can access the same @stackanalyzer.cloudapp.net for remote login etc.

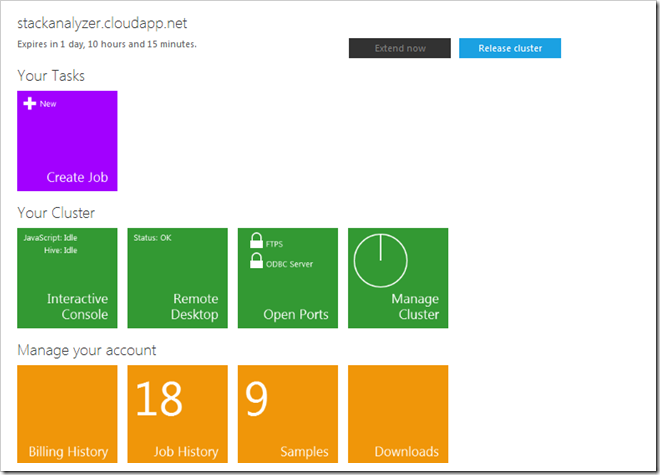

You need to wait few minutes till your cluster is prepared. Once the Cluster setup is done, you can access the cluster dashboard. You can access the cluster via the Javascript/Hive interactive console, via Remote Desktop etc. In this example, we’ll use the Javascript console for uploading our mapper, reducer and data files to the cluster.

Getting Some Stackoverflow Data To Analyze

Now, let us analyze some real data. You could go to Stack Exchange data explorer, compose a query, and download some data. You can execute the following query from http://data.stackexchange.com/stackoverflow/query/new to get some posts data. You can issue any query or you can use the posts data from the entire Stackoverflow data dump, this is just an example.

select top 10000 Posts.id, Posts.body from posts where posts.ViewCount>10000

Download the data as a CSV file and save it under the name “data.csv” - and keep it handy so that we’ll upload it to the cluster later.

Creating our Mapper And Reducer applications in C#

Now, let us create our Mapper and Reducer applications in C#. Fire up Visual Studio, and create two C# Console applications. Make sure that when you compile these applications, you are compiling the same in release mode so that we can deploy the same to the Hadoop cluster. The Code in our mapper program looks like this. Once we initiate the Job, Hadoop Streamer will push the lines in our Stackoverflow data csv file one by one to our Mapper. So, let us use a Regular Expression to find all C# namespace declarations starting with System, and output the found matches.

- //Simple mapper that extracts namespace declarations

- using System.IO;

- using System;

- using System.Collections.Generic;

- using System.Linq;

- using System.Text;

- using System.Text.RegularExpressions;

- namespace StackOverflowAnalyzer.Mapper

- {

- class Program

- {

- static void Main(string[] args)

- {

- string line;

- Regex reg=new Regex(@"(using)\s[A-za-z0-9_\.]*\;");

- while ((line = Console.ReadLine()) != null)

- {

- var matches = reg.Matches(line);

- foreach (Match match in matches)

- {

- Console.WriteLine("{0}", match.Value);

- }

- }

- }

- }

- }

- using System.IO;

- using System;

- using System.Collections.Generic;

- using System.Linq;

- using System.Text;

- using System.Threading.Tasks;

- namespace StackOverflowAnalyzer.Reducer

- {

- class Program

- {

- static void Main(string[] args)

- {

- string ns, prevns = null;

- int nscount = 0;

- while ((ns = Console.ReadLine()) != null)

- {

- if (prevns != ns)

- {

- if (prevns != null)

- {

- Console.WriteLine("{0} {1}", prevns, nscount);

- }

- prevns = ns;

- nscount = 1;

- }

- else

- {

- nscount += 1;

- }

- }

- Console.WriteLine("{0} {1}", prevns, nscount);

- }

- }

- }

Again, please note that you have to compile both mapper and reducer in Release mode.

Deploying our Mapper, Reducer and Data files to Azure Hadoop Cluster.

Go to the Azure Hadoop cluster dashboard, and select the Javascript console.

- Javascript console provides some utility methods like fs.put() for uploading your files to HDFS. Type help() to view few utility commands.

- You can run Apache Pig commands from the Javascript. You can consider Pig is almost a distributed LINQ, and you’ll love Pig commands as they are similar with Linq concepts. In this example, we are not using Pig.

- You can execute Hadoop File system commands from the Javascript console, using a # prefix – example #ls for listing the files or #cat <filepath> to view the contents of a file (brush up your Unix/Linux skills

)

)

Let us upload the mapper, reducer and our csv data file to the cluster. In the Javascript console, type fs.put(), browse your Mapper executable, and I’m uploading it to the destination /so/bin/mapper.exe

In the same way, you may

- Upload the Reducer executable to the location /so/bin/reducer.exe

- Upload our Stackoverflow CSV data file to the location /so/data/data.csv

Some times, I’ve found that the web uploader won’t work for large data sets – in that case, you can remote login to your node from the dash board, and use the Hadoop Command Shell to copy the files to HDFS from your local file system. If you’ve your CSV downloaded in your Cluster Name Node’s local path d:\data.csv, you can issue the Hadoop FSShell command, like this, to copy the file to the desired location.

hadoop fs -put d:\data.csv /so/data/data.csv

You can verify that your files are in order from the Javascript console, by issuing the command #ls /so/bin and #ls /so/data . Now you’ve got your goodies in the cluster, let us execute the job now.

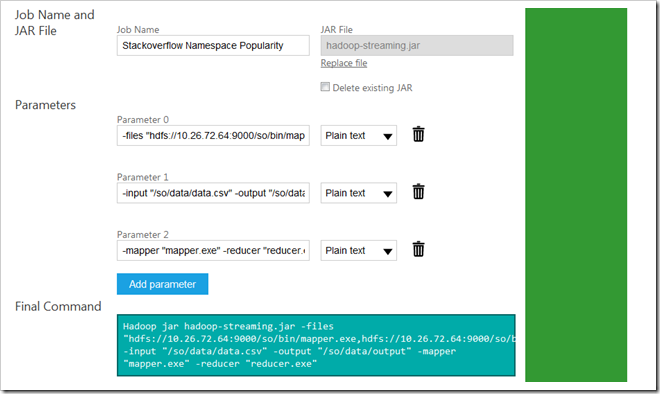

Creating and Executing the Job

You can create an execute a job either from the dashboard, or directly from the Hadoop Command Shell. To keep things simple, let us create a new job using the dashboard. You need the hadoop-streaming.jar file to create a new streaming job, so you may download it from the C# Streaming example file list under the Samples section in your Hadoop Azure Dashboard. Download and keep the hadoop-streaming.jar file handy. Also, just note down your Hadoop Node IP/URL, for that you could check the core-site.xml file content, by issuing the following command in the Javascript console

#cat file:///apps/dist/conf/core-site.xml

So now, let us go ahead and create the Job from the Hadoop for Azure dashboard. In the new Job dashboard, specify the values for files, input, output, mapper and reducer parameters. Also, choose hadoop-streaming.jar as the JAR file. Please ensure you use your actual IP/URL that you obtained from the above step, instead of mine ![]()

Hadoop jar hadoop-streaming.jar -files "hdfs://10.26.72.64:9000/so/bin/mapper.exe,hdfs://10.26.72.64:9000/so/bin/reducer.exe" -input "/so/data/data.txt" -output "/so/data/output" -mapper "mapper.exe" -reducer "reducer.exe"

Just in case you are facing errors, ensure that you’ve all the file paths provided correctly, and there is no space between the urls in the the files parameter. If things go well, you get the following result screen.

Now, from the Javascript console, inspect the output of the job below.js> #cat /so/data/output/part-00000You could find the number of times these namespaces where used in the set of posts we examined. You could run tasks like these in the entire data set, be it Stackoverflow or some other datasets – for parallelizing your analysis or calculations with the help of Azure and Hadoop.

using ; 1 using B; 1 using BerkeleyDB; 1 using Castle.Core.Interceptor; 1 using Castle.DynamicProxy; 1 using ClassLibrary1; 1 using ClassLibrary2; 1 using Dates; 1 using EnvDTE; 2 using G.S.OurAutomation.Constants; 1 using G.S.OurAutomation.Framework; 2 using HookLib; 1 using Ionic.Zip; 1 using Linq; 1 using MakeAggregateGoFaster; 1 using Microsoft.Build.BuildEngine; 1 using Microsoft.Build.Framework; 2 using Microsoft.Build.Utilities; 2 using Microsoft.Office.Interop.Word; 1 using Microsoft.SharePoint.Administration; 1 using Microsoft.SqlServer.Management.Smo; 1 using Microsoft.SqlServer.Server; 1 using Microsoft.TeamFoundation.Build.Client; 1 using Microsoft.TeamFoundation.Build; 1 using Microsoft.TeamFoundation.Client; 1 using Microsoft.TeamFoundation.WorkItemTracking.Client; 1 using Microsoft.Web.Administration; 1 using Microsoft.Web.Management.Server; 1 using Microsoft.Xna.Framework.Graphics; 1 using Microsoft.Xna.Framework; 1 using Mono.Cecil.Cil; 1 using Mono.Cecil.Rocks; 1 using Mono.Cecil; 1 using NHibernate.SqlCommand; 1 using NHibernate; 1 using NUnit.Framework; 1 using Newtonsoft.Json; 1 using Spring.Context.Support; 1 using Spring.Context; 1 using StructureMap; 1 using System.Collections.Generic; 31 using System.Collections.ObjectModel; 2 using System.Collections; 3 using System.ComponentModel.DataAnnotations; 1 using System.ComponentModel.Design; 1 using System.ComponentModel; 7 using System.Configuration; 1 using System.Data.Entity; 1 using System.Data.Linq.Mapping; 1 using System.Data.SqlClient; 3 using System.Data.SqlTypes; 1 using System.Data; 4 using System.Diagnostics.Contracts; 3 using System.Diagnostics; 15 using System.Drawing.Design; 1 using System.Drawing; 3 using System.Dynamic; 3 using System.IO.Compression; 1 using System.IO.IsolatedStorage; 1 using System.IO; 11 using System.Linq.Expressions; 4 using System.Linq; 21 using System.Management; 1 using System.Net; 1 using System.Reflection.Emit; 6 using System.Reflection; 9 using System.Resources; 1 using System.Runtime.Caching; 1 using System.Runtime.CompilerServices; 3 using System.Runtime.ConstrainedExecution; 3 using System.Runtime.InteropServices.ComTypes; 3 using System.Runtime.InteropServices; 14 using System.Runtime.Remoting.Messaging; 1 using System.Runtime.Serialization; 1 using System.Security.Cryptography; 1 using System.Security.Principal; 1 using System.Security; 2 using System.ServiceModel; 1 using System.Text.RegularExpressions; 2 using System.Text; 17 using System.Threading.Tasks; 2 using System.Threading; 8 using System.Web.Caching; 1 using System.Web.Configuration; 1 using System.Web.DynamicData; 1 using System.Web.Mvc; 4 using System.Web.Routing; 1 using System.Web; 4 using System.Windows.Controls.Primitives; 1 using System.Windows.Controls; 4 using System.Windows.Forms.VisualStyles; 1 using System.Windows.Forms; 9 using System.Windows.Input; 3 using System.Windows.Media; 2 using System.Windows.Shapes; 1 using System.Windows.Threading; 1 using System.Windows; 4 using System.Xml.Serialization; 4 using System.Xml; 2 using System; 112 using WeifenLuo.WinFormsUI.Docking; 1 using base64; 1 using confusion; 1 using directives; 1 using mysql; 1 using pkg_ctx; 1 using threads; 1

Conclusion

In the above post, we examined how to setup a Hadoop Cluster in Azure, and more importantly how to deploy your C# Map Reduce jobs to Hadoop to do something meaningful. There is a huge potential here, and you can start writing your C# Map/Reduce jobs to solve your organizations Big Data problems ![]() . Enjoy and Happy coding.

. Enjoy and Happy coding.

Updated: Just extracted the Top 500 MSDN Links from Stackoverflow Posts, details here.